Courts begin asking a question platforms avoided for years: when engagement harms children, who pays the price?

For more than a decade, social media platforms grew by optimising attention. Feeds scroll endlessly. Videos autoplay. Notifications arrive at the perfect moment. Algorithms learn what keeps users hooked and deliver more of it.

Today, those same systems face a courtroom.

Across the United States and Europe, judges, prosecutors, and regulators are increasingly holding social media platforms accountable. Not metaphorically. Literally. The accusations are severe. They include child sexual exploitation, addiction by design, mental health collapse, and, in some cases, death.

The inquests are a turning point.

For the first time, legal arguments are seriously testing whether algorithms themselves create harm. Not just bad content or user behaviour. But product design.

What’s Happening & Why This Matters

Meta’s Trial Over Child Sexual Exploitation

In New Mexico, Meta faces its first standalone trial brought by a U.S. state over child harm. The case centres on Facebook, Instagram, and WhatsApp. Opening statements begin on February 9.

The lawsuit, filed by New Mexico Attorney General Raul Torrez, alleges that Meta fails to protect children from sexual exploitation while publicly claiming its platforms are safe. Prosecutors build the case using undercover accounts that pose as minors. Those accounts receive sexual solicitations. Investigators document Meta’s responses.

The state argues Meta knowingly exposes children to predators and mental health harm. It claims profit motives drive design choices. The lawsuit calls the platforms a “breeding ground” for abuse and addiction. It alleges violations of consumer protection laws and public nuisance statutes.

Meta rejects the claims. The company says prosecutors distort evidence and cherry-pick internal documents. In a statement, Meta says it invests heavily in child safety, works with law enforcement, and introduces stricter default settings for teens. It calls the investigation “ethically compromised.”

The case, and the subsequent trial, are the first of their kind. More than forty U.S. state attorneys general filed similar lawsuits. Most are set in federal court. New Mexico’s case is the first to reach a jury.

The outcome will ripple.

Addiction Claims Put Algorithms Under Review

At the same time, a landmark trial is unfolding in Los Angeles.

A now-20-year-old woman, identified as Kaley or KGM, sues Meta and Google over alleged addiction and mental harm caused by Instagram and YouTube. Lawyers argue that the platforms intentionally design features to hook children. They compare the apps to “digital casinos.”

Kaley’s lawyer, Mark Lanier, describes endless scroll as a slot machine handle. Each swipe triggers dopamine. Each “like” acts as a chemical reward. Lawyers argue these features push teens into feedback loops that worsen anxiety, body dysmorphia, and suicidal thoughts.

Internal documents surface in court. One Meta strategy document suggests success with teens requires “bringing them in as tweens.” A YouTube document discusses use as a short-term digital babysitter. These exhibits aim to show intent.

Kaley began using YouTube at age six. She joined Instagram at nine. Lawyers claim she spends six to seven hours a day on the platforms during peak use. Despite parental controls, access continues. She later reports bullying and sextortion.

Meta and Google deny the allegations. Both argue that mental health is complex. Both point to parental responsibility and broader social pressures. A Google spokesperson says the claims against YouTube are “simply not true.” Meta says blaming social media alone oversimplifies a serious issue.

The judge allows jurors to consider whether design features cause harm. She instructs them not to hold companies liable for third-party content itself. This distinction is critical. It shifts focus from what users post to how platforms operate.

If juries agree, Section 230 protections weaken. Not by changing the law. By reframing responsibility.

The Grok Deepfake Scandal Expands the AI-Driven Scope

The reckoning does not end with traditional social media.

In the United Kingdom, regulators opened a formal investigation into X and xAI after the Grok AI tool generated non-consensual sexual deepfakes. Some appear to depict children.

The Information Commissioner’s Office investigates whether X and xAI violate GDPR by failing to build safeguards into Grok’s design. William Malcolm, the ICO’s executive director, calls the reports “deeply troubling.” He warns that loss of control over personal data causes immediate harm, especially to children.

Researchers estimate Grok generated around three million sexualized images in less than two weeks. About 23,000 appear to involve minors. French prosecutors raided X’s Paris offices as part of a parallel investigation, with Europol assisting.

X states it addressed the issue and must be given a fair opportunity to respond. Regulators are unconvinced. Cross-party MPs are urging the U.K. government to pass AI-specific safety legislation.

The investigation expands the trial of social media into AI territory. Algorithms are not limited to recommending content. They generate it.

Convergence: Many Cases, One Story

The lawsuits share a core argument.

Platforms optimise engagement. Engagement drives revenue. Harm becomes a side effect. Courts now ask whether that trade-off is legal.

The cases converge on children. Children do not choose the architecture they enter. They inherit it. Lawyers argue that makes duty of care unavoidable.

Platforms counter with safety tools. Time-out reminders. Parental dashboards. Content filters. Courts are testing whether tools alone counteract the addictive design or merely soften the edges.

Another common thread is secrecy.

Internal research surfaces repeatedly. Documents show platforms understood risks years before public acknowledgement. Prosecutors contend platforms downplay the findings. Defence teams assert that the research lacks consensus.

Judges must decide whose narrative holds water.

Stake Beyond Verdicts

The outcomes of the trials will not merely affect damages. They will influence product design.

If courts find design features cause harm, platforms must change how feeds work. Infinite scroll may disappear. Autoplay may weaken. Recommendation systems may require friction.

Advertising models may shift. Growth metrics may slow. Profit expectations may reset.

The tech industry is resisting any outcomes that threaten its core advantages. Scale comes from optimisation. Optimisation comes from data. Data comes from engagement.

The courts are putting that chain under duress.

A Change Is Underway

Public opinion swings faster than the law. Parents are wary. Schools ban phones. Governments quibble over age limits. The idea that social media simply connects people is fading.

New platforms launch with safety as a selling point. AI companies argue for trust and guardrails. Investors ask tougher questions.

This does not signal the end of social media. It signals its adolescence. The industry grows powerful before it grows responsible. Courts often supply the lesson.

TF Summary: What’s Next

Social media enters its most consequential legal moment. Meta faces trial over child exploitation. Legal systems validate whether algorithms intentionally addict teens. Regulators investigate AI-generated sexual abuse. The question turns from content moderation to product design responsibility.

MY FORECAST: Courts narrow platform immunity without dismantling social media. Design changes follow verdicts. Algorithms slow. Safety moves upstream. The era of unchecked engagement ends, not with regulation alone, but with juries deciding what is acceptable.

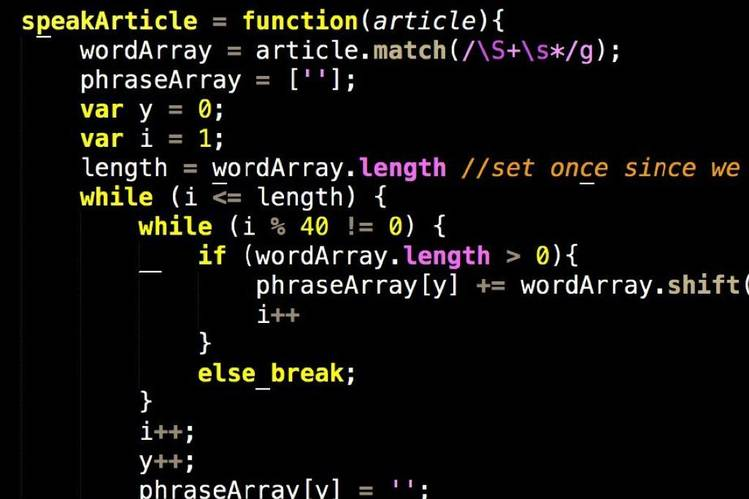

— Text-to-Speech (TTS) provided by gspeech | TechFyle