At Las Vegas’ CES 2026, Nvidia reframed the autonomous vehicle conversation. For years, self-driving progress centered on sensors, maps, and pattern recognition. This year, Nvidia shifted the focus toward machine reasoning. The company introduced a new class of AI models designed to think through rare, messy, real-world driving situations rather than simply react to past data.

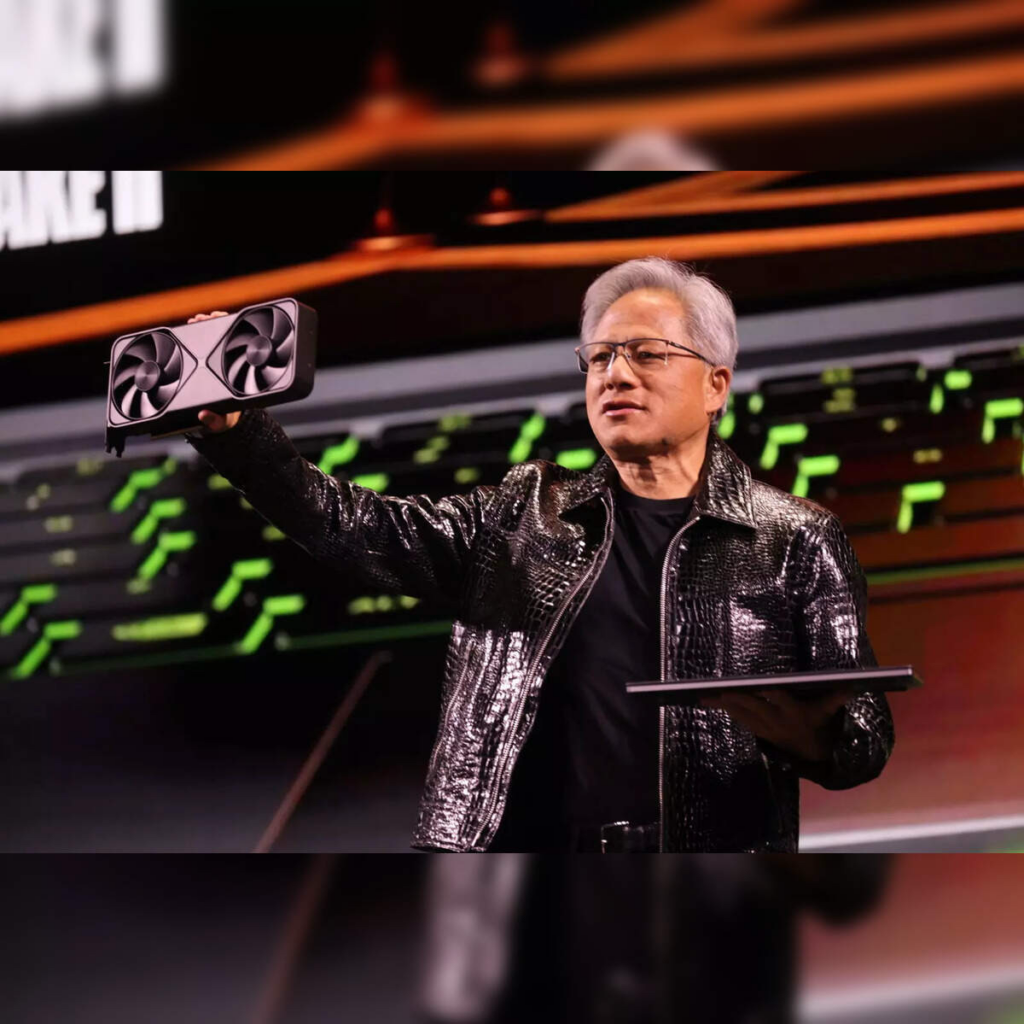

The announcement came directly from Jensen Huang, wearing his familiar black leather jacket, speaking to a packed CES audience. His message stayed clear. Autonomy fails not during everyday driving. It fails during edge cases. Road closures. Human hesitation. Unwritten rules. Nvidia now claims it addresses that gap.

What’s Happening & Why This Matters

Nvidia introduced Alpamayo, a self-driving AI platform that blends perception with language-style reasoning. Traditional autonomous systems rely on pattern learning. Alpamayo adds internal decision logic. The model reasons through uncertainty, explains actions, and adapts behavior when conditions break expectation.

Huang described the technology as a turning point for physical AI. He framed Alpamayo as the moment machines move from pattern execution toward contextual understanding. “The ChatGPT moment for physical AI is here,” Huang said during his keynote, describing a system that reasons before it acts rather than reacting after mistakes occur.

Real-World Partners

During the presentation, Nvidia showed footage of a Mercedes-Benz CLA equipped with Alpamayo navigating dense San Francisco traffic. The vehicle handled construction zones, unexpected driver behavior, and ambiguous lane markings. A passenger sat behind the steering wheel with their hands resting in their lap. The system narrated its own decisions in real time.

Nvidia explains that Alpamayo combines vision models with structured reasoning layers. The car not only sees objects but interprets intent. It predicts how human drivers behave and adjusts proactively. This approach reduces reliance on massive datasets while improving performance across rare scenarios that typically confuse autonomous systems.

The company confirmed active collaboration with Mercedes‑Benz. Nvidia already powers the vehicle’s compute stack. Alpamayo now serves as its cognitive layer. Production vehicles using the system already exist, with initial deployment beginning in the United States before expansion across Europe and Asia.

New Chips, Architecture

Nvidia also revealed details about its Vera Rubin chip platform. The architecture increases inference efficiency while lowering energy cost. Huang demonstrated server pods containing more than 1,000 Rubin chips optimised for physical AI workloads. The platform processes sensor data, planning logic, and reasoning simultaneously without latency spikes.

Industry analysts at CES described the announcement as Nvidia’s metamorphosis from chip supplier to autonomy platform leader. Paolo Pescatore from PP Foresight said Nvidia is uniquely aligned as a systems company while offering hardware vendor services. That is a differentiating distinctio. Carmakers won’t only purchase chips. They offer and integrate intelligence.

The move places Nvidia in direct competition with Tesla’s vertically integrated approach. Elon Musk responded publicly, stating that reaching near-perfect autonomy remains easy compared to solving the long tail of driving edge cases. Nvidia’s answer to that challenge now sits inside Alpamayo.

TF Summary: What’s Next

Nvidia treats autonomy as a reasoning problem rather than a perception problem. That reframing changes the entire self-driving roadmap. Carsa are not dependent exclusively on massive historical datasets. They evaluate context in real-time and understand intent. Nvidia models adapt when rules break down.

MY FORECAST: Reasoning-based autonomy is the baseline expectation across premium vehicles before the decade’s end. Carmakers that rely solely on pattern-learning stacks lose relevance. Nvidia’s platform strategy reimagines self-driving cars, robotics, logistics, and industrial automation worldwide.

— Text-to-Speech (TTS) provided by gspeech

Related Stories

Title: CES 2026: Nvidia’s Reasoning AI Redefines Self-Driving