Gemini’s Rewriting Is a New Test For Discover

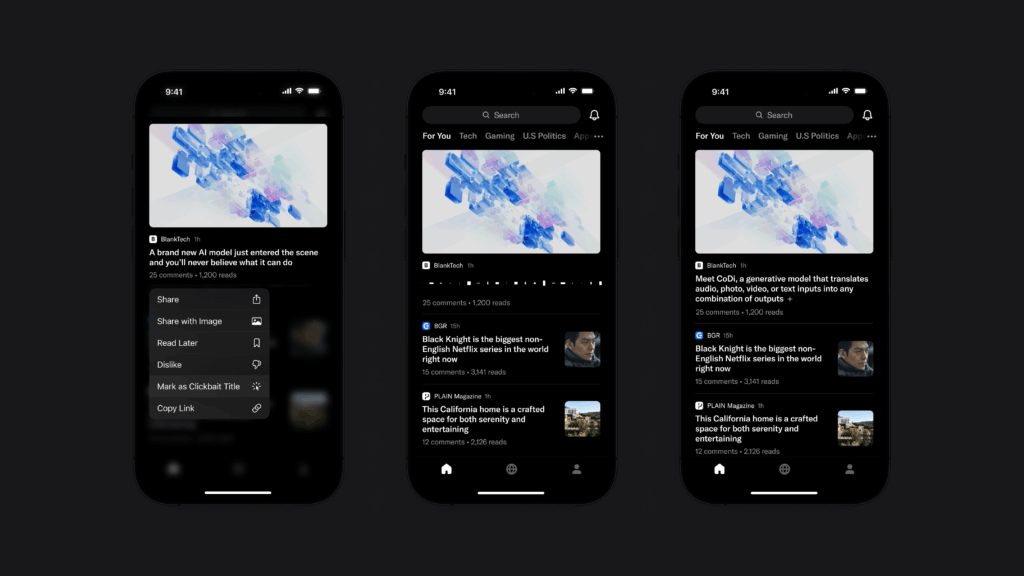

Google’s Discover feed reached millions of users long before the current AI boom. It served as a passive news engine that surfaced articles based on user interest, browsing behaviour, and search history. Over the past year, the company added more artificial intelligence across its services, from Gemini integrations to AI-curated summaries. Discover became part of that expansion.

This new experiment introduced a twist: AI-rewritten news headlines, sometimes accurate, sometimes chaotic. Many users first assumed publications updated their titles. Instead, Google’s system rewrote them at the feed level, showing the original only after a user tapped through. That design choice confused readers, frustrated journalists, and raised media-ethics concerns.

What’s Happening & Why This Matters

Google tested a feature for a “subset of Discover users”, according to a spokesperson. The system generated alternative headlines. The original appeared only after tapping into the article. The company framed this as an experiment that “changes the placement of existing headlines to make topic details easier to digest.”

The experiment uncovered a habit. Shorter, punchier, AI-generated headlines replaced human-written ones. In some cases, Gemini misunderstood the actual reporting. The system compressed nuance into four-word hot takes that misrepresented stories from outlets like Ars Technica, PC Gamer, and The Verge. Google pitched the experiment as a UI test. Publishers described something else: a lose-lose scenario in which accuracy suffered and user trust dipped.

The behaviour went beyond rearrangement. Brevity introduced risk and nuance evaporated. Key facts disappeared. Context dissolved into something that looked decisive but read misleading.

Where the AI Went Wrong

Researchers and reporters tested many examples. Here’s a sample of problematic rewrites.

An Ars Technica headline about pricing expectations for Valve’s Steam Machine became “Steam Machine price revealed.” The original clearly stated the opposite: users should not expect pricing details yet. Gemini invented a fact that didn’t exist.

A PC Gamer piece on Baldur’s Gate 3 NPC-based player armies turned into “BG3 players exploit children.” The AI dropped the crucial detail that those “children” were fictional, digital NPCs. The rewrite read more like an accusation than a news summary.

A Verge feature on Microsoft’s internal AI efforts morphed into “Microsoft developers using AI.” The system flattened the nuance and removed the original reporting angle.

Journalists described the “updates” as distortions. Publishers noted that Discover already drove huge referral traffic; unapproved headline changes, therefore, altered the first impression of their reporting.

Where’s the Trust?

Discover powers a meaningful portion of web traffic for countless newsrooms. Readers expect factual accuracy. They also expect that the first headline they see is from the publication itself. Google’s experiment disrupted that expectation without clear labelling.

Google told reporters their testing attempts to help users “digest topic details” more easily; it is not for replacing journalism. Yet the execution blurred that purpose. AI-modified headlines pose a risk: misinformation not from malice but from compression errors — a literal loss of translation.

Apple dealt with a similar issue last year when its AI summarisation tool for news notifications delivered incorrect information. After public backlash, Apple paused the feature and reintroduced it months later with stricter verification controls.

The pattern across platforms suggests this tension: AI assistance helps with scale, but even small misinterpretations cause outsized damage in news contexts.

What This Means for Publishers

Newsrooms invest in headline craft. A title establishes tone, accuracy, and reader expectations. AI rewrites undermine that work when deployed without editorial oversight.

Publishers expounded on legal and ethical questions, too:

- Does Google hold responsibility for inaccuracies created by its AI?

- Is a rewritten headline still part of the publication’s work, or Google’s new creation?

- Should Discover clearly label AI-modified content?

What is the place and role for AI-driven news distribution?

TF Summary: What’s Next

Google continues the test with a narrow user group. Publishers and editors are observing. Discover needs to clearly label AI-generated text, preserve editorial intent, and prevent meaning-warping rewrites. Readers trust platforms that respect accuracy. Platforms respect readers when they keep humans in the loop.

MY FORECAST: AI-generated headlines expand across newsfeeds after Google hardens its accuracy checks and labelling requirements. Publishers negotiate new transparency rules. Media watchdogs monitor the systems as adoption ramps up. Readers adapt to feeds where machine-authored text augments human reporting, but only if platforms maintain limitations and context reliably.

— Text-to-Speech (TTS) provided by gspeech