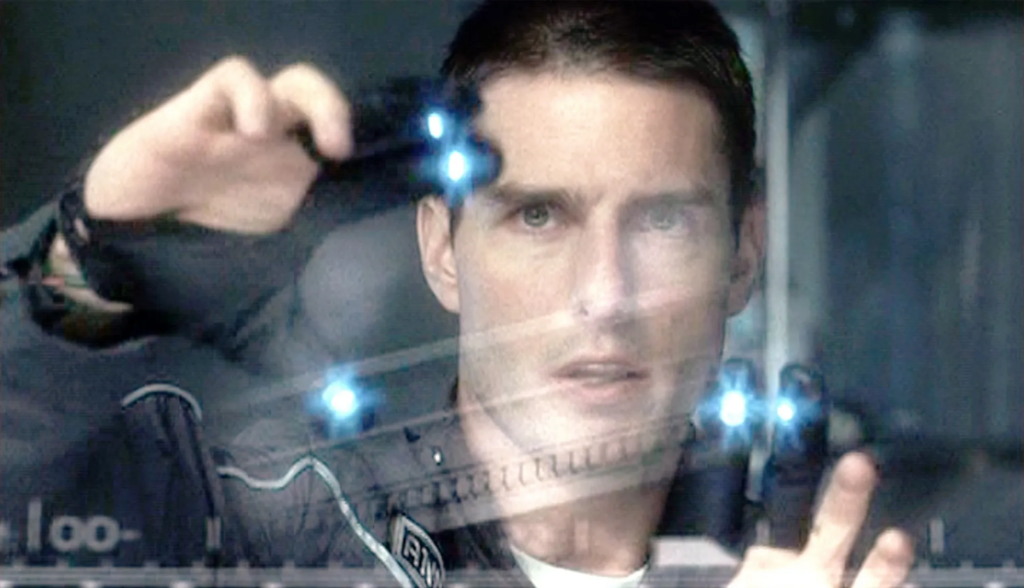

The UK government is exploring the development of a controversial algorithm designed to predict the likelihood of violent crimes, particularly focusing on murder prediction. This project, which has generated significant debate, leverages personal data to assess risk factors in individuals, including those with prior criminal records and others without criminal convictions. While the aim is to improve public safety by identifying high-risk individuals early, critics are raising concerns about the privacy implications, potential discrimination, and the accuracy of such predictions. This initiative brings to mind the Minority Report, though it differs greatly because it does not use the same futuristic approach to predicting crimes.

What’s Happening & Why This Matters

In the pursuit of smarter policing and better crime prevention, the UK Ministry of Justice (MoJ) is working on a new initiative to develop an algorithm capable of predicting violent crimes. This project called the Homicide Prediction Project, aims to analyze a variety of data points, including criminal history, mental health factors, and other personal characteristics, to evaluate an individual’s likelihood of committing violent crimes, especially murder. The project intends to use AI-powered algorithms to assess crime risk, enabling authorities to take preventative action.

However, the use of personal data for these predictions has raised the most concerns. Initially, the project was framed as a research-only initiative focused on improving the accuracy of risk assessments for individuals on probation or those with prior convictions. Yet, leaked documents suggest that the algorithm may use data from individuals who have never been convicted of a crime, including personal information about mental health, self-harm, domestic violence cases, and other sensitive data. Some of this information comes from interactions with the police that may not be related to criminal activities, such as seeking help for personal struggles.

The government’s stance is that using this data will help improve risk assessments, especially for individuals at higher risk of committing violent offenses in the future. The project is part of a broader effort to enhance public safety by identifying these high-risk individuals early and implementing targeted interventions. Despite these intentions, the project has sparked backlash from privacy advocates and civil liberties groups, who argue that the algorithm could lead to unjust profiling and violating personal privacy. These groups are especially concerned about how this data is used, who has access to it, and whether there are adequate safeguards against discrimination.

Another key concern is the bias inherent in some of the data sources. For example, police data has long been criticized for over-representing minority communities and low-income groups, which could perpetuate existing inequalities and reinforce racial profiling. The algorithm might disproportionately target individuals from these communities by relying on this data, further entrenching social disparities.

The government has defended the project, stating that it is committed to privacy protection and ensuring that the data used in the algorithm is closely monitored. The Ministry of Justice emphasizes that this initiative is not about violating privacy but about better using data to prevent serious crimes. Furthermore, they have assured the public that they will continue to refine the model, making it more accurate and less likely to misidentify individuals.

TF Summary: What’s Next

The pre-crime algorithm developed by the UK government is a significant development in applying AI in public safety and criminal justice. As the project moves forward, the government will need to address the growing concerns surrounding data privacy, algorithm bias, and the potential for unjust profiling. While the initiative promises to improve the accuracy of risk assessments, it raises ethical questions about the future of AI-driven policing and the impact of such technologies on civil rights.

As the Homicide Prediction Project progresses, it will likely face increased scrutiny from lawmakers, human rights organizations, and the public. The government will need to find a balance between leveraging AI to enhance public safety and ensuring that the rights of individuals are protected, especially those who are vulnerable to discrimination or unwarranted surveillance. How this balance is struck will have far-reaching implications for the future of AI in law enforcement and its role in society.

— Text-to-Speech (TTS) provided by gspeech