Self-driving cars promise fewer crashes, smoother traffic, and a future where your vehicle doubles as a chauffeur. Yet reality rarely follows marketing copy. In Austin, Texas, Tesla’s robotaxi rollout delivers both technical progress and a few uncomfortable reminders that physics still wins every argument.

Since launching its autonomous ride service, Tesla robotaxis have racked up a series of incidents that raise questions about readiness, safety claims, and regulatory oversight. None of the crashes resulted in serious injuries. However, the frequency of mishaps draws scrutiny because these vehicles operate without human drivers.

The broader story reaches far beyond Austin. It touches on public trust, liability law, insurance models, and the long-standing philosophical question of whether machines can truly outperform human judgment in messy, real-world environments.

What’s Happening & Why This Matters

Early Deployment Shows Real-World Friction

Tesla launches its robotaxi program using modified Model Y vehicles equipped with its Full Self-Driving software. At first, safety drivers sit behind the wheel. Later, the company begins fully driverless rides, a bold step that turns a controlled experiment into a public transportation service.

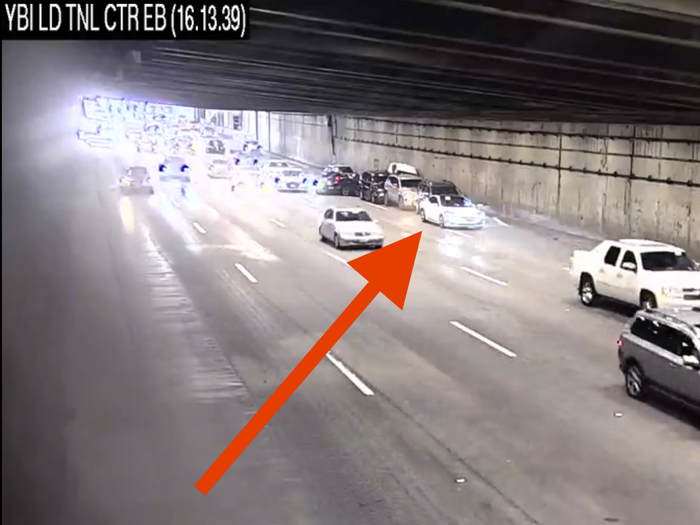

According to filings with the National Highway Traffic Safety Administration (NHTSA), the fleet has recorded 14 crashes since launch. None involves fatalities. Most cause property damage only. Still, autonomous vehicles operate under a higher standard because they promise superior safety.

Reported incidents include collisions with fixed objects, a heavy truck, a stationary bus, and obstacles encountered while reversing. Speeds range from walking pace to about 17 mph. These are not cinematic disasters. They resemble the everyday fender-benders humans cause constantly. Yet machines do not get the same social forgiveness.

One previously minor incident is later upgraded to include hospitalization, intensifying attention from regulators and critics. Even small changes in classification ripple through safety statistics and public perception.

Crash Rate Raises Comparative Questions

Tesla often emphasizes data. Here, the data creates tension. An average U.S. driver experiences a minor crash roughly once every 229,000 miles. Robotaxis, by contrast, log 14 crashes across about 800,000 miles of operation, or one crash roughly every 57,000 miles.

That ratio suggests human drivers currently outperform these vehicles in real-world conditions. It does not prove autonomous systems are unsafe overall, but it undermines claims of immediate superiority.

Autonomous driving is a probabilistic problem. Sensors interpret noisy inputs. Software predicts intent. Roads contain infinite edge cases: construction zones, erratic pedestrians, glare from the sun, unpredictable human behavior. A single unusual scenario can confuse even sophisticated models.

The paradox of automation appears here. Humans make mistakes frequently, but machines must approach near-perfection before society accepts them. One robotic error feels like a system failure. One human error feels like Tuesday.

Removal of Safety Drivers Signals Confidence — and Risk

Tesla CEO Elon Musk announces that robotaxis begin operating without safety drivers after early incidents. This decision suggests confidence in software improvements and remote monitoring capabilities.

From an engineering perspective, removing the human fallback marks a phase change. The system moves from supervised autonomy to operational autonomy. Philosophically, it shifts responsibility entirely onto algorithms.

Critics argue this move prioritizes speed over caution. Supporters counter that real progress requires real-world exposure. Both positions contain truth. Aviation achieved extraordinary safety only after decades of accidents, investigations, and iterative improvement.

Autonomous driving appears to be walking a similar evolutionary path, though public patience for trial-and-error remains thin.

Competition Reveals Industry-Wide Challenges

Tesla is not alone. Waymo, operated by Alphabet, also faces incidents despite extensive testing. Authorities investigate cases involving collisions with pedestrians and failures to respond properly to school buses.

Waymo states its system performs better than human drivers at avoiding injury-causing crashes. That claim may be statistically accurate within certain contexts. However, edge cases dominate headlines because they feel unsettling. When a machine makes a mistake, people wonder whether the error reveals a deeper flaw.

Autonomous vehicles must solve not only engineering problems but also psychological acceptance. Humans evolved to distrust opaque systems controlling life-and-death outcomes.

Liability, Regulation, and Insurance Implications

Every robotaxi crash triggers complex legal questions. Who bears responsibility — the manufacturer, software developer, fleet operator, or passenger? Traditional traffic law assumes a human driver. Autonomous vehicles dismantle that assumption.

Regulators monitor deployments closely. Agencies like the NHTSA collect incident reports and may impose restrictions if safety thresholds are not met. Meanwhile, insurance companies rethink risk models. Premiums tied to driver behavior become meaningless when software drives.

Cities also face new policy decisions. Autonomous fleets influence traffic flow, public transit economics, and urban planning. Austin becomes a real-world laboratory where policymakers observe how robotaxis integrate into daily life.

Public Trust Remains the Ultimate Gatekeeper

Technology adoption depends less on technical feasibility than on social acceptance. Airplanes became routine only after decades of safety improvements and transparent investigations. Elevators gained trust once automatic controls replaced human operators — but only after safety brakes proved reliable.

Robotaxis confront the same journey. Each incident chips away at confidence, even if overall safety improves over time. Conversely, long stretches without problems gradually normalize the technology.

Trust operates like a fragile ecosystem. It builds slowly and collapses instantly.

TF Summary: What’s Next

Autonomous taxis represent one of the most transformative transportation technologies since the internal combustion engine. Austin’s experience shows both promise and turbulence. The vehicles function. They complete rides. Yet they still struggle with unpredictable real-world conditions that humans navigate intuitively.

MY FORECAST: Expect regulators to demand more transparency, more testing data, and stricter safety benchmarks before large-scale expansion. At the same time, competition among companies will accelerate improvements. Machine learning systems improve fastest when exposed to diverse scenarios, though that exposure inevitably includes mistakes.

The likely future is not a sudden robot takeover of roads. It is a gradual blending of human and autonomous driving, with machines dominating controlled environments first and chaotic settings later. The end state may indeed be safer transportation — but the path there looks less like a clean software rollout and more like biological evolution: messy, iterative, and stubbornly real.

— Text-to-Speech (TTS) provided by gspeech | TechFyle