The AI Boom Is Burning Energy Like Never Before

OpenAI has struck a massive 10-gigawatt deal with Broadcom to design and build custom AI chips and network systems. The deal is the largest energy allocation ever for a single tech company. The deal, enough to power a large city or 8 million U.S. households, underscores a growing concern—AI’s enormous appetite for electricity.

As OpenAI’s reach expands through tools like ChatGPT and Sora 2, the company’s infrastructure needs are skyrocketing. While this new partnership secures future computing power, it also raises questions about sustainability, energy policy, and the future of data centres in an AI-dominated era.

What’s Happening & Why This Matters

10 Gigawatts for AI Ambition

OpenAI’s collaboration with Broadcom is a turning point in the race to sustain AI’s growth. Following deals with Nvidia and AMD, this latest partnership ensures OpenAI can develop custom AI accelerators. The are chips specifically optimised for next-generation models like Sora 2 and ChatGPT.

Sam Altman, OpenAI’s CEO, called the collaboration “a critical step in building the infrastructure needed to unlock AI’s potential and deliver real benefits for people and businesses.” According to reports, deployment of these new systems begins in 2026. This signals OpenAI’s intent to integrate its hardware to match its software demands vertically.

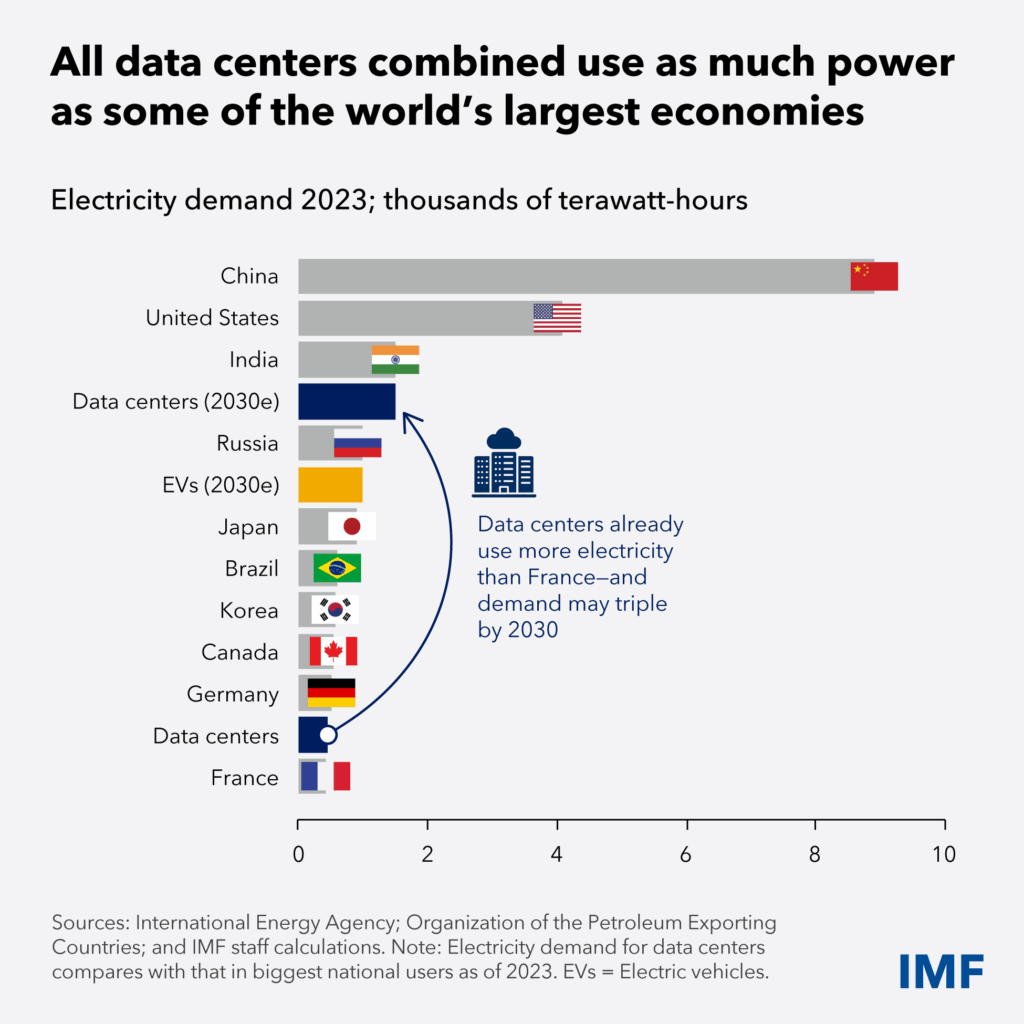

This investment — measured in gigawatts — reflects AI’s soaring energy footprint. The U.S. Department of Energy estimates that data centres could consume up to 12% of America’s electricity by 2028. This is nearly triple their 2023 share of 4.4%.

That increase stems from the rapid rise of generative AI workloads. Altman once admitted that “each ChatGPT query consumes as much energy as a light bulb running for several minutes.” Generating realistic video through Sora 2, however, demands vastly greater computational intensity.

The Power Problem Behind AI Progress

The Broadcom partnership enables OpenAI to be more self-reliant, embedding its AI learnings directly into silicon. Custom accelerators make training large models faster and more efficient. However, they won’t reduce power demand — at least not yet.

Broadcom’s stock jumped 12% following the announcement, reflecting investor enthusiasm for AI hardware expansion. CEO Hock Tan confirmed during an earnings call that Broadcom “secured a $10 billion customer,” widely believed to be OpenAI.

However, the deal’s scale triggered arguments about AI’s environmental cost. Energy analysts warn that powering massive data clusters for AI strains electrical grids and accelerates carbon emissions. The search for new renewable sources is needed for integration.

Some industry experts argue that OpenAI’s next play involves co-locating data centres near nuclear or hydroelectric facilities for sustainability with speed. This would mirror strategies by Google, Microsoft, and Amazon, who are racing to develop green AI infrastructure to offset their expanding energy consumption.

TF Summary: What’s Next

OpenAI’s 10-gigawatt Broadcom deal signals the next era of AI — a phase where power becomes as crucial as data. Expect future innovation in model design AND energy-efficient computing. AI companies are competing to minimise the cost of intelligence.

MY FORECAST: Sora 2’s rise proves that the AI future is visual, interactive, and resource-heavy. OpenAI’s hurdle now is to sustain its meteoric growth without overloading the planet. The next breakthroughs come from larger models — that are smarter, use greener hardware keeping the lights — powering AI’s creative explosion.

— Text-to-Speech (TTS) provided by gspeech