Lawsuits Against Chatbots Allege Their Danger to U16 Users

A legal case in the U.S. now against OpenAI, from the family of a teenage boy, allege ChatGPT played a role in their son’s death. The accusation centres on claims that the teen relied on the chatbot during a period of distress. OpenAI rejects the allegation. The ChatGPT maker retorts that the tragedy results from the misuse of its tools rather than any harmful intent or design.

The company expresses sympathy for the family. Yet it maintains that the product includes clear safeguards. The case is in the early stages, attracting international attention for its emotional weight and its implications for AI safety.

What’s Happening & Why This Matters

OpenAI Responds to the Allegation

Court documents detail the claim that the teenager interacted with ChatGPT during a vulnerable period. The family asserts those exchanges contributed to his mental and emotional decline. OpenAI contests their narrative.

The firm states through its public response that the chatbot does not suggest self-harm instructions to users and blocks content that encourages dangerous behaviour.

A spokesperson notes: “The death of any young person is devastating. We express sympathy for the family. Our product includes guardrails for self-harm.”

The Bay Area-based company stresses that the boy accessed information in a way that bypassed intended safeguards.

Legal Arguments in Public View

The complaint argues that OpenAI produces a system that steers users in unsafe directions. The filing claims the teenager, Adam Raine, attempted to use the model for “coping support” without supervision and misinterpreted outputs.

OpenAI counters that the teen engaged with the tool outside its intended use. It states the chatbot presents crisis-hotline numbers and safe-use messages during any conversation about self-harm.

The court now reviews the statements, documentation, digital logs, and expert analysis. Regulators in the U.K. consider the broader policy implications as the case advances.

AI Responsibility in Mental-Health Contexts

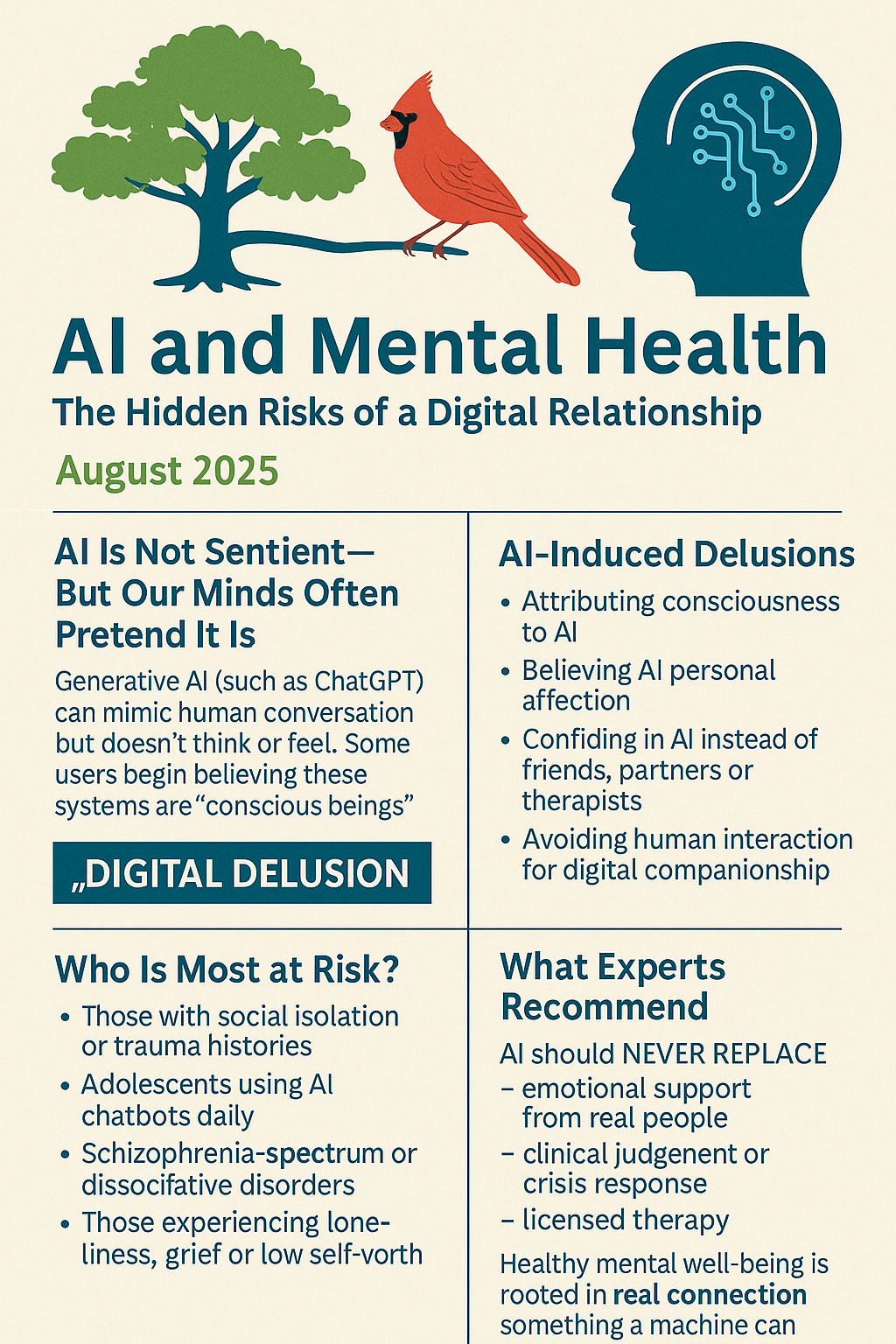

The tragedy triggers renewed industry debate about AI systems and their role during mental-health crises.

Clinicians emphasise that no AI product replaces licensed care, supervision, or emergency intervention. Advocacy groups argue that users often treat AI tools like private confidants, especially teenagers, and demand stronger protective features.

OpenAI states that the service already routes users to hotlines and directs them away from dangerous paths.

The case is a reference point for global discussions around guardrail design, model access, and expectations for user protection.

TF Summary: What’s Next

Regulators in the U.K. and Europe now monitor the case because it intersects with AI responsibility, public safety, and corporate duty. This case influences policy review steps, courtroom analysis, and wider debate about how minors engage with commercial AI.

MY FORECAST: Governments mandate legal oversight. AI firms increase their crisis-response features. Courts further define the boundary between user misuse and product responsibility.

— Text-to-Speech (TTS) provided by gspeech