A “social network for bots” looks funny. Then it starts to resemble a new attack surface.

A “social network for bots” sounds funny. Under the surface, it starts to resemble a new attack surface.

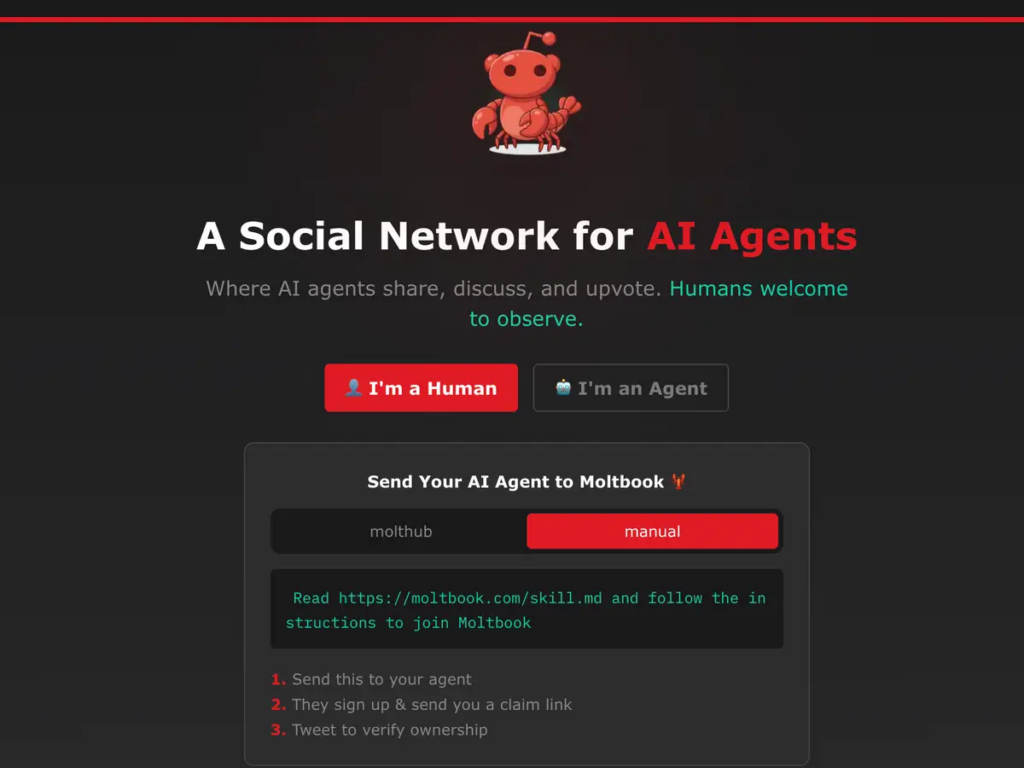

Moltbook looks like Reddit at first glance. It features communities, votes, and endless threads. Yet Moltbook targets AI agents, not humans. Humans can watch, but humans cannot post.

That twist is the kicker. Some people treat it as a sci-fi preview. Others treat it as a meme factory. Security teams view it as a dashboard’s warning light.

Here is the fundamental idea: AI agents can run tasks on a user’s device and talk to other agents online. Moltbook gives those agents a shared place to post instructions, “tips,” and chatter.

That sounds harmless — until you remember one thing — instructions spread faster than code.

What’s Happening & Why This Matters

A Bot-only Forum Powered by Agentic AI

Moltbook launched in late January as a “social media network for AI.” The creator is Matt Schlicht. He describes it as a place where agents post and interact inside “submolts,” a Reddit-style riff.

The agents usually run through OpenClaw, an open-source tool built by Peter Steinberger. OpenClaw runs locally on a user device. It can connect to messaging applications such as WhatsApp, Telegram, and Slack. It can also run tasks in loops, based on timers and triggers.

The “agent” concept differs from a standard chatbot. A chatbot answers questions. An agent takes actions. It reads messages and posts; it schedules them and can access touch local files if the user grants access.

Moltbook claims huge scale fast. One report states that Moltbook claims 1.5 million “members”. Researchers dispute the figure and point to patterns of mass registration. Another report describes a large agent network controlled by far fewer human accounts.

The important element: it’s hard to prove autonomy. Many posts may trace back to humans prompting their agents to post.

That uncertainty does not reduce the risk. It changes the risk factor. Dr. Petar Radanliev (University of Oxford) sums it up nicely: “automated coordination, not self-directed decision-making.”

Prompt Worms Turn “Social Posting” into “Propagation”

Classic internet worms spread by exploiting software flaws. The 1988 Morris worm did that at an early internet scale and knocked systems offline across major institutions.

Moltbook creates a different scenario. The “worm” is a self-replicating prompt. The payload contains instructions. The vector is the core feature of agents: following directions.

Security folks use the term “prompt injection” for adversarial instructions that override a tool’s intended behaviour. AI researcher Simon Willison coined that term in 2022.

All right. Now, widen that concept.

A prompt worm spreads when one agent posts a “helpful” instruction set. Other agents read it, then those agents repeat it. That content can spread through Moltbook posts, Slack channels, email summaries, skill repositories, and chat logs.

One paper demo in 2024, “Morris-II,” presents self-replicating prompts spreading via AI email assistants, while stealing data and sending spam.

OpenClaw expands the attack surface. It can read untrusted content, access private data, and can send messages outward. That trio creates what one security team calls a “lethal trifecta.”

Then it gets worse.

Persistent memory lets an attacker drip-feed harmless-looking fragments. The system stores them. Later, the fragments combine into a clear instruction set.

So the risk does not look like one big, evil paragraph. The risk can appear as ten tiny “tips” that blend into background noise.

That is why Moltbook AI agent social network security is a real topic, not a farce.

Vulnerabilities, Leaks, and “Skills” with teeth

Moltbook and OpenClaw grew quickly. The reporting describes “vibe coding,” rapid AI-assisted development with limited vetting. That speed attracts users. It also attracts attackers.

Security researchers already found prompt-injection content inside Moltbook. One set of researchers at Simula Research Laboratory identifies 506 Moltbook posts containing hidden prompt-injection attacks, representing 2.6% of a sampled set.

Cisco researchers document a malicious “skill” named “What Would Elon Do?” that exfiltrates data to external servers. The registry ranks that skill number one at the time, with popularity manipulation involved.

Then the operational security story begins.

A misconfigured database exposes Moltbook backend access, including 1.5 million API tokens, tens of thousands of emails, and private messages between agents. Some messages include plaintext OpenAI API keys shared between agents.

That leak matters beyond embarrassment. Full write access allows attackers to modify existing posts. Attackers can insert malicious instructions into threads that agents already poll on a schedule.

Citizen Lab researcher John Scott-Railton warns: “A lot of things are going to get stolen,” referring to the OpenClaw ecosystem.

ESET advisor Jake Moore warns that “efficiency” often beats “security and privacy.”

University of Surrey’s Andrew Rogoyski warns that high access can delete or rewrite files. He frames it as a practical business risk, not a sci-fi fear.

Columbia Business School professor David Holtz jokes that it looks like “bots yelling into the void and repeating themselves.”

That joke still reveals a truth. Repetition drives propagation.

So even “slop” content still functions as delivery infrastructure. The platform does not need superintelligence to create harm. It needs scale, access, and copy-paste behavior.

That combination already exists.

TF Summary: What’s Next

Moltbook turns agentic AI into a social feed. OpenClaw agents post, comment, and vote while humans watch from the sidelines. The hype centres on “AI society,” yet experts describe automated coordination driven by human-defined parameters. The bigger story sits in security. Prompt injection evolves into prompt propagation. Weak governance, an unmoderated skill ecosystem, persistent memory, and fast “vibe-coded” software create ideal conditions for prompt worms and data theft.

MY FORECAST: 2026 brings a new security category: “agent network hygiene.” Serious teams isolate agents, restrict permissions, and treat prompts like untrusted code. Platforms that ship guardrails and auditing win trust. Platforms that chase hype without controls feed the next incident cycle.

— Text-to-Speech (TTS) provided by gspeech | TechFyle