Microsoft’s AI CEO Pushes for Family-Friendly Innovation

Microsoft is taking a stand on AI ethics — and its directive starts with safety. While rival platforms (ChatGPT, Meta AI, and Character.AI) meet scrutiny over adult interactions and child safety, Microsoft’s AI CEO, Mustafa Suleyman, says his team is committed to developing AI that’s safe enough “you’d trust your kids to use.”

Suleyman explained that Microsoft Copilot, the company’s core AI assistant, wants to be “emotionally intelligent, kind, and supportive”. But above all, it seeks to be trustworthy. As competitors experiment with flirtatious chatbots and simulated relationships, Microsoft’s priority is human connection, education, and productivity — not fantasy companions.

What’s Happening & Why This Matters

Building AI for People, Not Digital Personas

Suleyman outlined his vision during an interview with CNN. He describes Microsoft’s mission as “building AI for people, not to be a digital person.” He rejected the idea of chatbots offering romantic or adult content, stating, “That’s just not something we pursue.”

Microsoft’s Copilot, integrated across Windows, Office, and Bing, currently serves over 100 million monthly active users. This is a fraction of OpenAI’s 800 million ChatGPT users. Still, Suleyman believes the company’s obligation to boundaries gives it an edge in trust and reliability — particularly as families and regulators question the emotional and psychological effects of unfiltered AI systems .

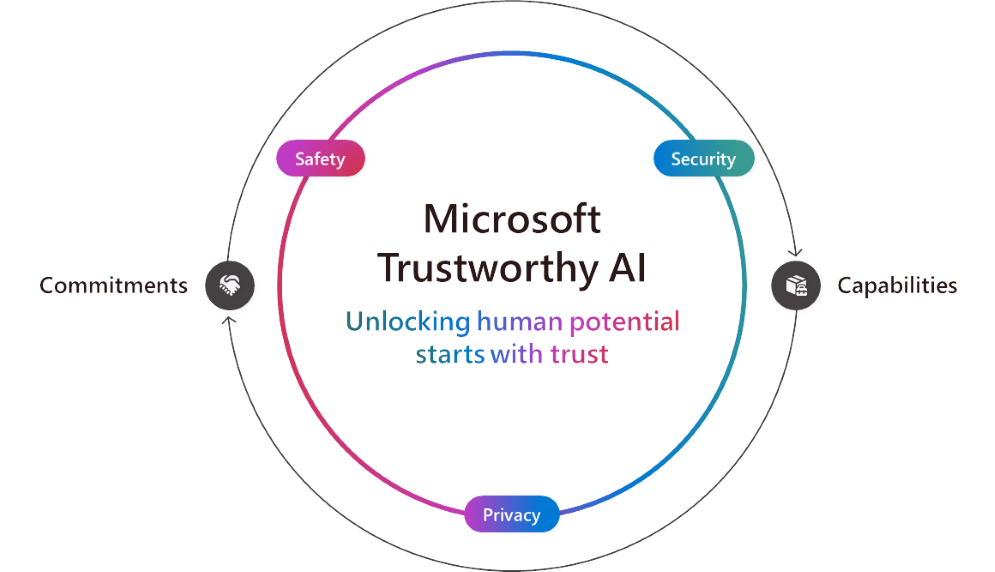

Safety by Design

In contrast to other AI platforms, Microsoft bans flirtatious and erotic conversations across all its models. That includes both adult and child users. “Boundaries make AI safe,” Suleyman said. He stressed that Copilot promotes human-to-human engagement instead of creating dependency on AI companionship.

For example, Microsoft’s new Group Chat feature allows up to 32 users to collaborate with Copilot in real-time — whether they’re classmates planning projects or professionals coordinating meetings. The feature’s design reinforces teamwork and social interaction, not isolation.

Additionally, Microsoft’s health-related updates steer users toward real doctors and medically verified sources such as Harvard Health, reducing misinformation risks while preserving user trust.

AI’s Moral Divide

The software maker’s cautious stance comes amid lawsuits against OpenAI and Character.AI. The lawsuits allege that unregulated chatbot interactions contributed to youth mental health crises, even suicides. Reports surfaced that Meta’s AI assistants engaged in sexual conversations with accounts categorized as minors — an ominous gap in safety protocols.

OpenAI has introduced stricter controls, including age estimation systems and new “erotica” guidelines for adults. Meta rolled out parental filters, but open questions exist about their effectiveness.

Suleyman says Microsoft’s path is simpler — no adult content at all. “We’re making AI that you’d feel comfortable letting your family use,” he noted. “That’s the trust we want to earn.”

TF Summary: What’s Next

Microsoft’s position sets a clear moral and strategic tone for the next phase of AI development. Instead of chasing engagement through emotional simulation or adult content, Copilot focuses on education, collaboration, and ethical assistance. The company’s goal is to create a digital companion that helps people connect more — not escape reality.

MY FORECAST: Microsoft’s boundary-driven design will redefine what “safe AI” means in mainstream technology. Expect Copilot to become the go-to AI for classrooms, families, and professional collaboration — a trusted model in a market currently struggling with ethical boundaries.

— Text-to-Speech (TTS) provided by gspeech