New York City is the place to be on June 5th as executives convene to look into how they can ensure that the AI models they deploy are free from bias, perform effectively, and comply with ethical standards for all types of organizations.

What’s Happening & Why This Matters

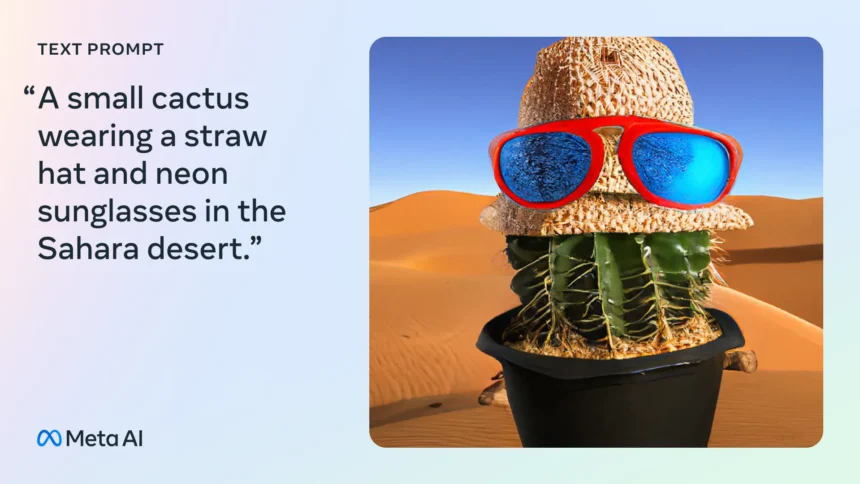

AI experts at Meta, the social media giant, have given the world a taste of what’s in store with their latest models made for generative AI. The Chameleon is a new family of models set to take the field of multimodal models by storm. These models have not yet been released by Meta, yet initial experiments show that Chameleon is already breaking new ground. It’s living up to the hype, claiming cutting-edge performance on various tasks including image captioning and visual question answering, while maintaining high performance on text-only tasks as well.

This architecture is leading to new possibilities in AI, especially those requiring a deep understanding of both visuals and written content. The rise of multimodal foundation models in AI has relied on models trained separately for different modalities and piecing them together, resulting in a “late fusion” approach. Certainly, this comes with its challenges and limitations, especially with integrating information across modalities and producing sequences of images and text. While effective, it continues to pose limitations to models’ integration of modalities and generating interleaved image and text sequences.

Chameleon introduces the innovative “early-fusion token-based mixed-modal” architecture, which was created to learn from an interleaved mix of images, text, and other modalities from the ground up. This has helped to generate new sequences and ensure efficient performance. Early fusion comes with challenges when training and scaling the model, but the team has applied numerous architectural modifications and training techniques to overcome these limitations.

TF Summary: What’s Next

It’s exciting to know that Meta’s new models are on the way, performing diverse tasks in both text-only and multimodal approaches. While there are still more mysteries to unravel about this groundbreaking model, its release could very well be the start of a new era in the world of AI. Keep an eye out for further developments.