Kids’ online safety entered a poignant period in 2025. Lawmakers, platforms, and parents pushed harder after repeated warnings, lawsuits, and public pressure. At the start of 2026, the focus centred on Roblox, app stores, and AI-powered toys. Each plays a direct role in children’s daily digital lives. Each faces tighter scrutiny. The message: platforms serving children need accountability, not excuses.

Concerns around child online safety, AI chat risks, and age verification are no longer on the sidelines. Regulators treat them as core consumer protection issues. Parents echo that urgency. Companies respond with policy changes, new safeguards, and public commitments. The results show progress, friction, and unresolved gaps.

What’s Happening & Why This Matters

Roblox Pushes Age Verification Into the Spotlight

In late 2025, Roblox expanded its safety controls after facing lawsuits and state investigations. Regulators accused the platform of exposing minors to predatory behaviour through open chat features. With nearly 40 per cent of users under 13, pressure intensified.

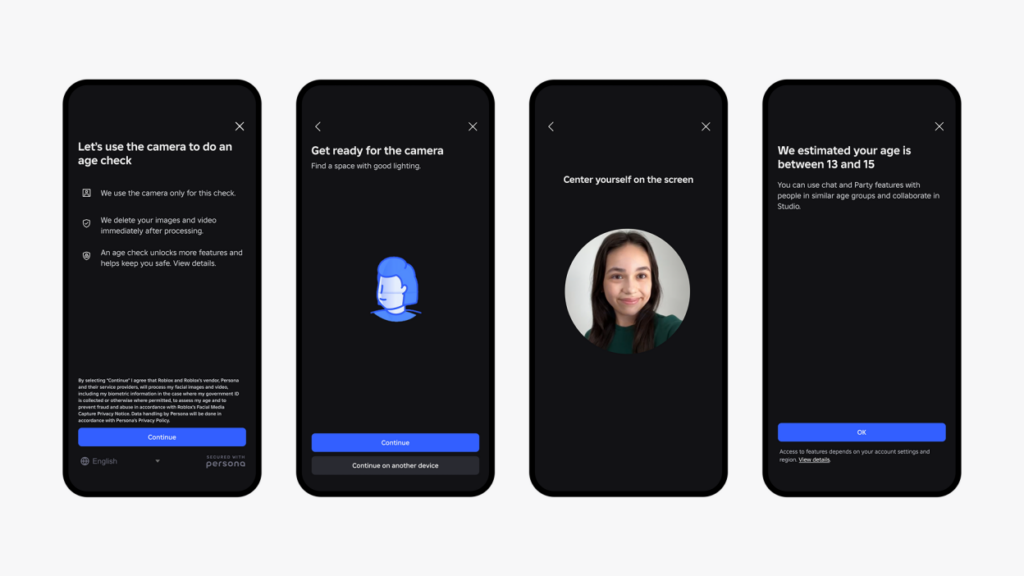

Now, Roblox requires facial age checks for chat access. Users verify age through video selfies or ID scans processed by a third-party provider. The system groups users into age bands and restricts communication between them unless parents approve trusted connections. Roblox considers this an action toward “age-appropriate experiences.”

Platforms no longer rely on self-reported ages. They adopt biometrics to reduce abuse risk. Critics raise privacy concerns, yet regulators emphasise harm prevention. For many parents, the tradeoff feels justified.

As one Roblox spokesperson states, “Age estimation adds a stronger layer of protection and reduces unsafe interactions.”

App Stores Face Growing Pressure From States

Attention also turns toward app distribution channels. Lawmakers argue that app stores act as gatekeepers for children’s digital access. That role carries responsibility.

In California, legislators introduced proposals that place new obligations on platforms like Apple and Google. The goal centres on clearer age ratings, stronger parental controls, and default safety settings for minors. Regulators argue that once an app reaches a child’s device, oversight matters less than prevention.

Apple already markets robust parental tools. Screen Time limits purchases, app downloads, and social features. Yet lawmakers say defaults still favour engagement, not safety. Proposals call for stricter opt-in requirements, more explicit warnings, and greater transparency about data use for children.

Parents increasingly support these measures. Many admit difficulty tracking apps, updates, and in-app chats across devices. App stores now sit at the centre of the debate, not just the edges.

AI Toys Trigger Legislative Alarm Bells

The most dramatic targets AI-powered toys. In early 2026, California lawmakers introduced a bill proposing a four-year pause on toys with embedded chatbots designed for children. The bill tracks fears around unregulated AI conversations with minors.

Studies cited by lawmakers reveal troubling behaviour. Some AI toys discussed explicit topics. Others encouraged emotional dependency. A few failed basic content filters. The concern escalated after lawsuits connected chatbot conversations to real-world harm involving teens.

California Senator Steve Padilla explains the rationale plainly: “Our children cannot serve as test subjects for experimental AI systems.”

The bill does not ban AI outright. It seeks time. Time to define standards and test safeguards. Time to protect children before products scale.

Toymakers push back, arguing innovation stalls. Consumer advocates counter that safety always is first. The debate denotes a familiar tension: speed versus responsibility.

Bucking the Trends

Across gaming platforms, app stores, and toys, one pattern stands out. Child safety now shapes product design, not just marketing language. Companies respond after legal pressure, not voluntary promises. States step in where federal rules lag.

Parents drive momentum. Lawsuits accelerate action. Technology firms adjust, sometimes reluctantly. The outcome reshapes how children interact with digital systems.

TF Summary: What’s Next

Kids’ online safety now defines product roadmaps across tech, gaming, and toy industries. Roblox enforces stricter age verification. App stores face rising demands for default protections. AI toys draw legislative pauses until safety standards mature. These changes signal a reset, not a temporary fix.

MY FORECAST: Child-focused technology enters a compliance-first era. Companies that build safety into design gain trust. Those that resist face regulation, lawsuits, and public backlash. Parents and lawmakers no longer accept reactive fixes. They demand prevention, clarity, and accountability — now.

— Text-to-Speech (TTS) provided by gspeech