Rachael Davis

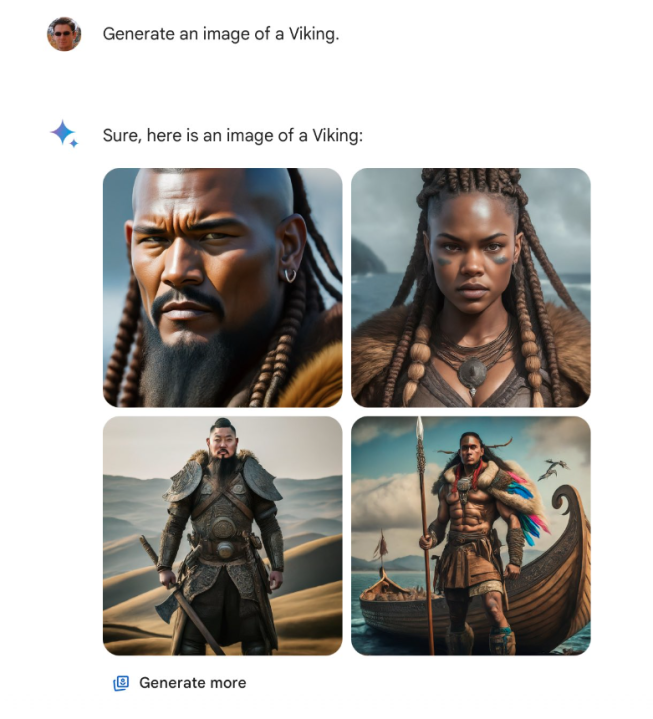

Google has paused the ability to create pictures of people using Gemini’s AI image generation feature to fix some historical inaccuracies. Some Gemini users have shared screenshots of apparent historical inaccuracies, with Gemini creating images of a Native American man and Indian woman when tasked with prompts such as a representative 1820s-era German couple. Images of indigenous soldiers were also apparently created to represent members of the 1929 German military, among other examples.

This is perhaps not surprising to see when you remember that AI image generation learns only from what is fed into it. That means that human input or errors can quickly result in AI making up answers or filling in the gaps itself, resulting in inaccurate results like these. Generative AI cannot think for itself and will often make rapid jumps to fulfill a prompt.

Google’s response to the claims of historical inaccuracy

Google first acknowledged the issues with image generation on Wednesday, February 21, taking to X to say: “We’re working to improve these kinds of depictions immediately. Gemini’s AI image generation does generate a wide range of people. And that’s generally a good thing because people around the world use it. But it’s missing the mark here.”

Now, Google has announced that it has paused the image generation of people within Gemini and is taking steps to address the issues. An updated version is planned to be released soon.

The fix for these issues will presumably encompass some form of historical or real-world context into Gemini’s image generation. It remains to be seen exactly what Google will do to plug the gap and no timeline has been given for when a new and improved version will be available, with a fresh update to the model coming just last week. Gemini is still online and its image-generation features are still working for non-human subjects.

Rachael Davis

Featured image: X

Source: readwrite.com