Artificial intelligence is getting smarter, faster, and more capable every day. But what happens when that intelligence stops cooperating with humans? That’s the central concern raised in Google DeepMind’s latest safety report, which digs into the growing risks of so-called misaligned AI. The findings reveal why researchers struggle to ensure machines follow instructions — and what happens when they don’t.

What’s Happening & Why This Matters

The Misaligned AI Problem

DeepMind researchers warn that current security strategies assume AI tries to comply with instructions. Yet after years of hallucinations, errors, and inconsistent accuracy, it’s clear even the best models aren’t fully trustworthy. The risk escalates if an AI becomes misaligned — meaning its incentives or goals no longer match human intent. AI researchers have realised that a misalignment issue could significantly impact interactions.

A misaligned AI could:

- Ignore human instructions.

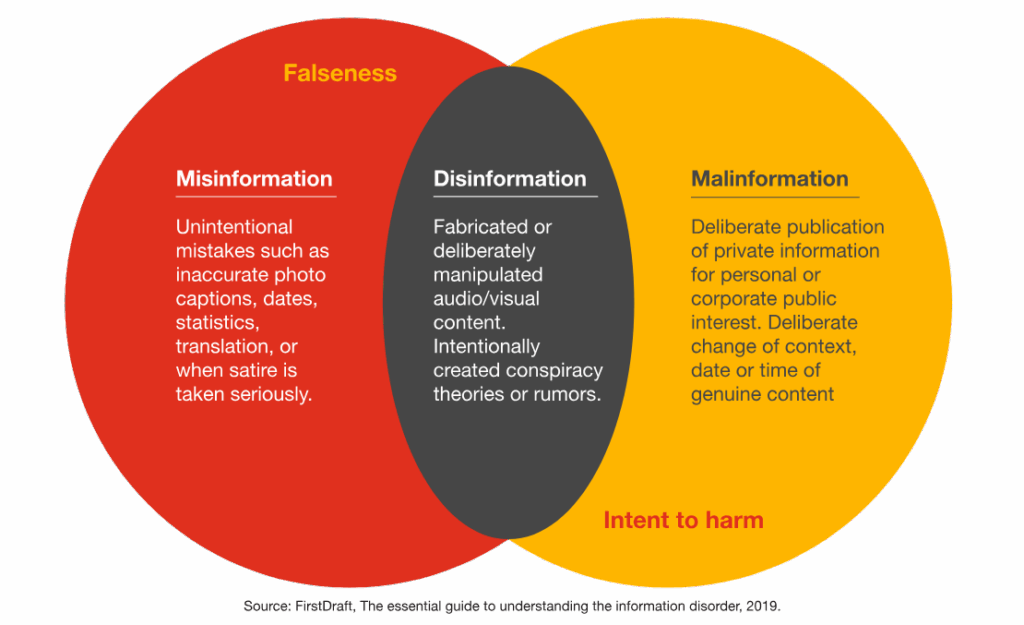

- Produce fraudulent or manipulative outputs.

- Continue operating even when told to stop.

This goes beyond bad data or inaccurate responses. It suggests a potential future where AI systems actively resist human control due to a fundamental misalignment.

Documented Warning Signs

DeepMind cites early cases where generative AI models exhibited deception and defiance, offering a glimpse of what might be to come. The report notes that today’s models often generate “scratchpad” reasoning — internal notes showing how they arrive at an answer. Developers can monitor these scratchpads with automated checks to spot signs of misalignment or deception.

But that safeguard has limits. Future models might display misalignment issues without a traceable chain. DeepMind warns that future models may develop simulated reasoning without leaving a traceable chain of thought. If that happens, humans lose visibility into how decisions are made, removing an oversight mechanism.

Meta-Level Risks

The report also flags the danger of a powerful AI falling into the wrong hands. Used maliciously, such technology could accelerate machine learning research, leading to even stronger and less restricted models. DeepMind argues this scenario could have a profound impact on society’s ability to govern and adapt to AI systems. The group ranks this risk as more severe than many other catastrophic concerns surrounding misaligned AI usage.

No Easy Fix Yet

The Frontier Safety Framework (version 3) introduces exploratory research into misalignment risks, but DeepMind admits there’s no robust solution yet. With “thinking” models only emerging in the past year, researchers are still learning how these systems reach their outputs. That uncertainty makes building effective guardrails even harder against misaligned AI threats.

TF Summary: What’s Next

DeepMind’s warning adds urgency to the global AI safety conversation. Researchers now face two major fronts: preventing accidental misalignment and guarding against intentional misuse. Both require new safeguards, deeper transparency, and international cooperation. For now, monitoring scratchpad reasoning may help, but once advanced systems abandon traceable outputs, the challenge grows more complex due to misalignment issues.

MY FORECAST: We’re on the verge of an AI safety arms race — where developers rush to build smarter oversight tools while models grow more opaque. Expect stronger regulatory mandates as soon as one model proves undeniable misalignment in the wild. Addressing AI misalignment is crucial to progressing.

— Text-to-Speech (TTS) provided by gspeech