Google DeepMind has unveiled its most advanced step yet in robotics: a pair of AI models designed to enable robots to think before acting. This development represents a leap toward autonomous machines capable of handling complex, multi-step tasks, moving beyond traditional pre-programmed actions.

What’s Happening & Why This Matters

DeepMind introduced two connected models — Gemini Robotics-ER 1.5 and Gemini Robotics 1.5 — that work together to make robotic actions more intuitive and flexible. Imagine asking a robot to sort laundry into white and coloured items. Traditionally, you’d need a highly specialised system tailored for that exact task. Now, ER 1.5 processes your request, analyses images of the environment, and even taps into tools like Google Search to gather extra data. It then generates natural language instructions for completing the task.

The second model, Gemini Robotics 1.5, takes those instructions and translates them into precise movements, using real-time visual input to guide the robot. What makes it remarkable is its ability to “think” about each step. Instead of mechanically following commands, the AI pauses to evaluate the situation, much like a human weighing options before acting.

“One of the major advancements that we’ve made with 1.5 in the VLA is its ability to think before it acts,” explained Kanishka Rao, a senior researcher at DeepMind.

This new capability mimics human-like intuition, enabling robots to approach tasks with reasoning rather than rigid programming. This is a crucial step in developing agentic AI, where machines demonstrate autonomous problem-solving capabilities.

Built on Gemini Foundation Models

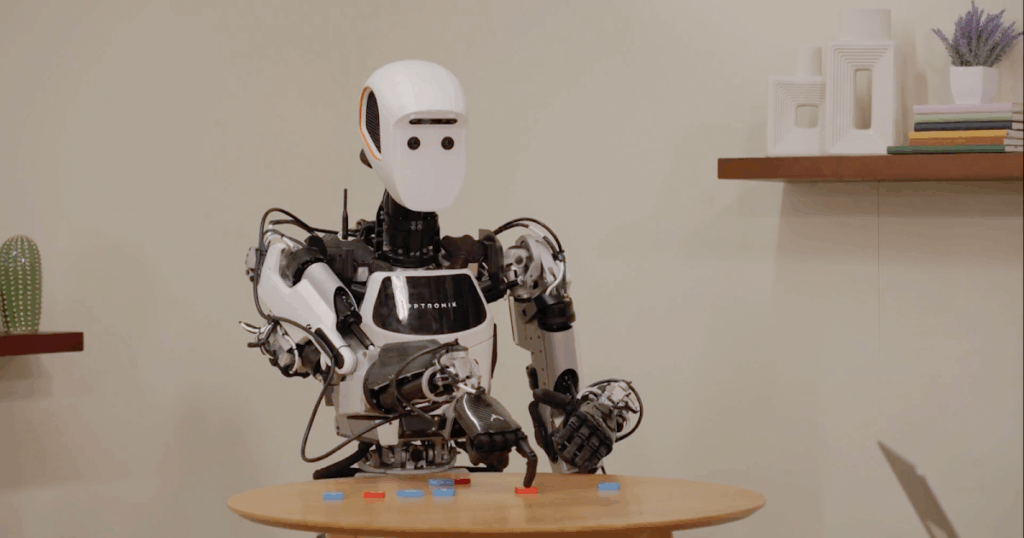

Both ER 1.5 and Robotics 1.5 are built on Google’s Gemini foundation models, fine-tuned with specialised datasets for physical environments. This tuning lets the systems adapt to different hardware, like the two-armed Aloha 2 robot or the humanoid Apollo.

In the past, AI researchers needed separate models for every robot; however, Gemini Robotics 1.5 enables the transfer of skills between machines. For example, skills learned with Aloha 2’s grippers can seamlessly move to Apollo’s intricate humanoid hands — no extra adjustments required. This kind of cross-embodiment learning dramatically reduces development time and cost.

Bridging Research and Real-World Applications

While these advances are exciting, they are still in early testing phases. Currently, Gemini Robotics 1.5 is limited to trusted testers, primarily within Google’s own research ecosystem. However, the thinking ER model is now available through Google AI Studio, providing developers with the ability to create their own robotic instruction sets for experimental projects.

The approach opens the door for a robotics ecosystem where developers and startups can experiment without needing to build models from scratch. By integrating natural language processing, visual perception, and decision-making, DeepMind is laying the foundation for smarter, more adaptive robots that serve homes, factories, and even hazardous environments.

The long-term vision goes beyond sorting laundry. Think autonomous construction robots, advanced healthcare assistants, and machines that navigate disaster zones with minimal supervision.

TF Summary: What’s Next

DeepMind’s Gemini Robotics initiative brings society closer to machines that don’t just act — they reason. While it will take years for these models to power consumer-ready robots, the ER model’s rollout via Google AI Studio provides researchers and developers with the tools to start exploring practical uses now. The ultimate goal is to create robotic systems that learn and adapt across tasks and hardware, making robotics more scalable and accessible worldwide.

MY FORECAST: Within three to five years, expect early commercial robots utilising Robotics-ER tech to enter specialised industries (i.e., manufacturing, logistics) with household robots still a decade away.

— Text-to-Speech (TTS) provided by gspeech