The rise of AI-generated deepfakes is reshaping the world of digital fraud, making scams more sophisticated, convincing, and financially devastating. Fraudsters are leveraging advanced artificial intelligence to create realistic fake celebrity endorsements, phishing campaigns, and fraudulent investment schemes, stealing millions from unsuspecting victims. As Hollywood celebrities, lawmakers, and cybersecurity experts scramble to combat this growing threat, tech platforms struggle to keep deepfake-driven fraud from spiraling out of control.

What’s Happening & Why This Matters

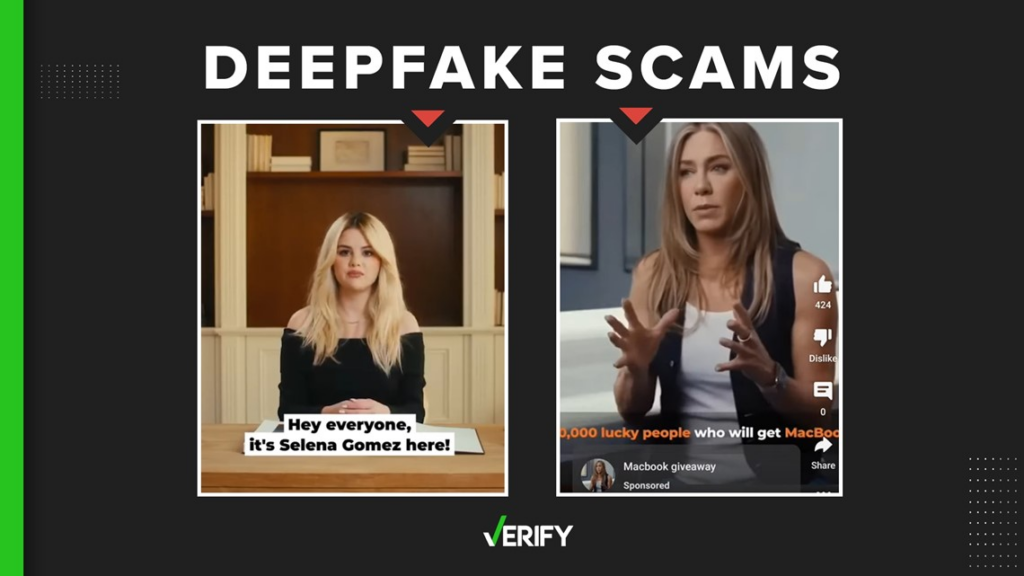

The spread of AI-generated fraud is accelerating at an alarming rate. Scammers now use deepfake videos, voices, and manipulated images to create fake celebrity endorsements, tricking users into making bogus investments or handing over their personal information. High-profile figures, including Steve Harvey, Scarlett Johansson, and Brad Pitt, have been impersonated in fraudulent AI-generated ads, pushing deceptive crypto investment schemes and get-rich-quick scams. One of the most alarming cases involved a woman in France losing $850,000 after falling for an AI-generated Brad Pitt impersonation.

Fraudsters operate these scams on major social media platforms, including Facebook, YouTube, and Google, where they use AI-generated voiceovers and fake testimonials to make their schemes seem more credible. Investigators uncovered a call center operation in Georgia responsible for stealing over $35 million from victims across Europe, the UK, and Canada by promoting deepfake ads featuring well-known public figures.

The impact of deepfake-driven fraud extends beyond just fake investment scams. AI-generated phishing emails have become a growing cybersecurity nightmare, using fabricated videos and voice clones to impersonate CEOs, government officials, and trusted institutions. A recent attack used an AI deepfake of YouTube CEO Neal Mohan, tricking content creators into clicking on malicious links disguised as YouTube monetization updates. Several victims lost access to their accounts, emails, and financial data, proving that deepfake fraud is evolving beyond social media scams and entering corporate and institutional cybersecurity threats.

The response from governments and Hollywood celebrities has been swift. The No Fakes Act, a bipartisan bill, seeks to impose strict regulations on using AI-generated deepfake content, holding tech platforms and content creators accountable. The bill proposes $5,000 fines per violation, which could quickly add to millions in penalties for platforms failing to remove fraudulent deepfake content. Celebrities including Steve Harvey and Scarlett Johansson have publicly condemned the rise of deepfake abuse, urging lawmakers to act quickly before scammers perfect their methods.

Despite these efforts, experts warn that regulation alone will not stop deepfake fraud. AI-powered scams evolve faster than governments can legislate, requiring a more aggressive approach to AI detection and digital security. Companies like Vermillio AI are developing tools like TraceID, which uses AI fingerprinting technology to track and remove manipulated content. While promising, these tools are not yet widely available, leaving millions of users vulnerable to AI-generated fraud.

With the No Fakes Act gaining momentum and cybersecurity firms racing to counteract deepfake-driven fraud, the fight against AI-powered scams is only beginning. Social media giants must implement stronger detection measures, while governments and tech developers must collaborate to outpace fraudsters who continue refining their AI-driven deception techniques.

TF Summary: What’s Next

AI-driven fraud is evolving at a pace that outstrips regulatory efforts, making deepfake scams one of the fastest-growing cyber threats. As tech companies struggle to deploy effective AI detection, governments push for stricter accountability measures while scammers continue perfecting AI-generated deception. Expect an ongoing battle between digital fraudsters and cybersecurity experts, shaping the future of AI regulation, online safety, and fraud prevention.

— Text-to-Speech (TTS) provided by gspeech