When Battlefield Pragmatism Collides With Silicon Valley Ethics

Artificial intelligence now sits inside the war room. Not as science fiction. Not as a distant research project. As a working tool. Reports that the U.S. military used Claude AI, developed by Anthropic, during an operation targeting Venezuela have ignited a fierce debate about how democratic nations should wield machine intelligence in combat.

At the center lies a philosophical conflict. The Pentagon prioritizes victory, speed, and operational advantage. AI companies emphasize guardrails, ethics, and long-term safety. When those priorities diverge, friction sparks. That friction now threatens contracts, alliances, and perhaps the shape of military AI adoption itself.

The controversy goes beyond one operation. It raises a deeper question: Can tools designed to protect humanity also help conduct war? Or does using them in conflict inevitably reshape their purpose? The answer matters because modern militaries increasingly depend on software as much as soldiers.

What’s Happening & Why This Matters

Claude Reportedly Used In A Venezuela Operation

According to reporting cited in the documents, the U.S. military used Anthropic’s Claude AI model during a classified operation involving Venezuela and President Nicolás Maduro. The operation allegedly included strikes in Caracas and resulted in significant casualties, though details remain disputed.

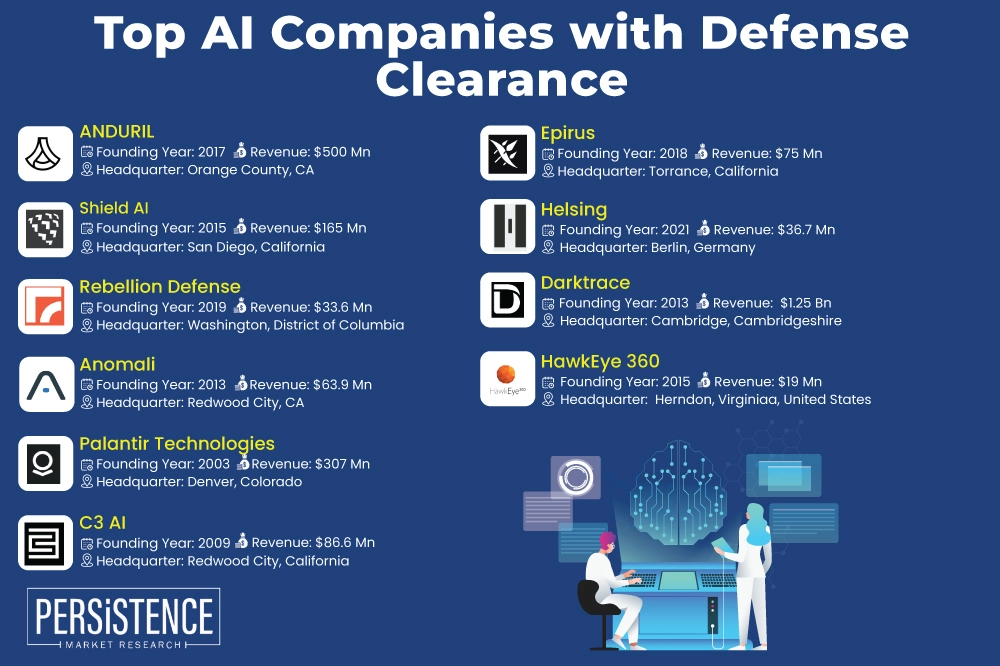

Officials do not publicly explain how the AI was deployed. Claude’s capabilities range from analyzing documents to processing data and potentially supporting autonomous systems. Sources suggest the system entered the mission through a partnership with Palantir Technologies, a long-time defense contractor specializing in intelligence platforms.

Anthropic declines to confirm operational use. The U.S. Department of Defense offers no public comment. In classified environments, silence often functions as a statement of its own.

What is clear is that militaries worldwide increasingly integrate AI into targeting, logistics, surveillance, and decision support. The U.S. joins other nations experimenting with algorithmic warfare, from autonomous drones to predictive intelligence systems.

AI Companies’ Ethical Lines

Anthropic’s policies prohibit using Claude for violent activity, weapons development, or surveillance abuses. The company positions itself as a safety-focused alternative to more permissive models. Its leadership frequently calls for regulation and safeguards around powerful AI systems.

CEO Dario Amodei has warned about risks associated with autonomous weapons and unchecked surveillance. That caution appears to clash directly with defense requirements. Militaries cannot operate with tools that refuse participation in combat scenarios.

The tension reflects an industry divide. Some technology firms embrace defense partnerships. Others adopt restrictive policies to avoid contributing to lethal operations. The debate echoes earlier controversies over nuclear research, surveillance programs, and cybersecurity tools.

Pentagon Pushback Intensifies

Reports indicate the Pentagon may reconsider its relationship with Anthropic due to these limitations. Defense officials insist partners must support “all lawful purposes” necessary for national security.

A spokesperson stresses that warfighters need tools capable of winning conflicts, not software that declines mission-critical tasks. From a military perspective, reliability matters more than philosophical nuance.

The Department of Defense already explores alternatives. It collaborates with multiple AI providers, including systems from Google, OpenAI, and xAI. Some operate on classified networks tailored to defense needs.

Switching vendors is possible. However, replacing entrenched systems requires time, testing, and integration work. Defense procurement resembles turning a battleship, not a speedboat.

How AI Actually Helps Modern Militaries

Despite dystopian imagery, most military AI applications involve analysis rather than autonomous killing. Systems sort intelligence data, identify patterns, track logistics, and support planning. Humans typically retain decision authority.

Claude reportedly assists with tasks such as document processing, trend identification, and complex analysis. These functions can accelerate operations dramatically. Information that once took analysts weeks to digest can now be summarized in minutes.

Speed matters in warfare. Decisions delayed often equal opportunities lost. AI enables the processing of vast data streams in real time, potentially improving effectiveness and safety by reducing uncertainty.

Critics counter that faster decisions can also mean faster mistakes. Automated recommendations may amplify bias or flawed assumptions. When lives hang in the balance, errors carry irreversible consequences.

The Rise Of Algorithmic Warfare

The Venezuela episode illustrates a broader transformation. Wars increasingly depend on software ecosystems. Drones rely on computer vision. Cyber operations run on automated scripts. Intelligence analysis uses machine learning.

Other countries pursue similar capabilities. Israel deploys AI to expand targeting databases. Russia experiments with autonomous systems. China invests heavily in military AI research. The technological arms race accelerates quietly.

Unlike nuclear weapons, AI spreads through code rather than uranium. That makes control difficult. Once developed, models can proliferate through partnerships, leaks, or independent replication.

Some analysts argue that refusing to develop military AI does not prevent others from doing so. The logic drives nations toward adoption even when ethical concerns remain unresolved.

Democratic Values Under Pressure

Supporters of AI restrictions argue that ethical limits distinguish democratic societies from authoritarian regimes. If Western companies remove safeguards, they risk enabling surveillance states or indiscriminate warfare.

Opponents argue that excessive constraints could handicap democracies against rivals willing to use any available tool. The tension resembles a classic moral dilemma: adherence to principles versus survival in a competitive world.

History offers precedents. Debates over chemical weapons, nuclear deterrence, and cyber espionage all revolve around similar questions. Each technology forces societies to decide how much power they are willing to wield — and under what conditions.

The Civilian Spillover Effect

Military use of AI influences civilian technology. Defense funding often accelerates innovation that later reaches commercial markets. GPS, the internet, and many computing breakthroughs originated in defense programs.

If AI development is tightly coupled with military priorities, future consumer tools may reflect those origins. Conversely, strict separation between civilian and military AI could slow progress or fragment ecosystems.

Public perception also matters. Citizens may distrust technologies associated with warfare or surveillance. Transparency is crucial to maintaining legitimacy.

Contracts And Consequences

Anthropic reportedly holds a contract worth hundreds of millions of dollars with the Pentagon. That agreement now hangs in uncertainty. Officials consider whether the company’s limitations make it unsuitable for long-term defense use.

Replacing a partner involves more than signing paperwork. Systems must integrate securely, comply with classified standards, and operate reliably under stress. New vendors must prove their tools can handle real-world missions.

Meanwhile, AI companies face reputational risks. Too much military involvement may alienate customers. Too little may forfeit lucrative contracts and strategic influence.

The situation resembles a delicate negotiation between ethics, economics, and national security.

TF Summary: What’s Next

The reported use of Claude AI in the Venezuela operation exposes the growing friction between military necessity and corporate ethics. Defense agencies want flexible tools that can support combat operations. AI companies want safeguards that prevent misuse. Neither side can fully satisfy the other without compromise.

MY FORECAST: Expect continued negotiations rather than abrupt separation. The Pentagon will diversify suppliers while pushing partners to loosen restrictions. AI firms will refine policies to balance responsibility with relevance. Over time, a new framework for military AI collaboration will likely emerge — one shaped not only by technology, but by the values societies choose to defend.

— Text-to-Speech (TTS) provided by gspeech | TechFyle