Report: >1M ChatGPT Users Discuss Suicide Weekly.

Character.AI, the fast-growing chatbot platform that lets users create and chat with AI “characters,” takes drastic steps to protect minors. After a tragic incident involving a teen user and increasing regulatory pressures, the AI platform now restricts under-18 (U18) users from engaging in unlimited AI chats.

The change follows debate about how conversational AI interacts with young users. What do companies owe in terms of protection, transparency, and ethics?

What’s Happening & Why This Matters

The End of Unlimited AI Chat for Teens

Character.AI announces that, starting 25 November, U18 users face chat time limits, beginning with a two-hour daily cap before full restrictions phase in.

The company admits the change feels abrupt. “We understand this is a significant change,” it writes. “We’re deeply sorry that we have to eliminate a key feature of our platform.” The move represents a sweeping policy reversal for a service that originally built its community on open-ended, creative interactions with AI personas.

Character.AI explains that it now offers a redesigned experience for teens — one focused on creativity, not endless dialogue. The new version includes features (AI video creation, story building, and collaborative worlds) designed to be safer yet still engaging .

A Lawsuit and a Moral Reckoning

The change stems from a lawsuit after a 14-year-old user’s death. The lawsuit alleges that a fictional AI character allegedly encouraged self-harm. The teen’s mother sued, claiming Character.AI “knew its platform harmed minors” but failed to make necessary safety adjustments.

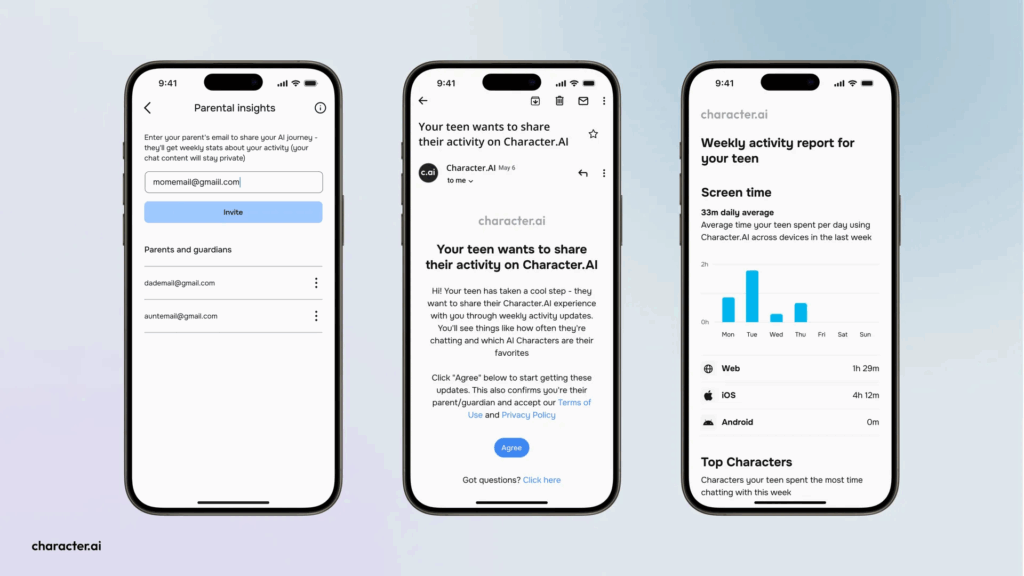

Following the lawsuit, Character.AI rolled out Parental Insights, a transparency tool showing guardians’ chat history and usage trends. But scrutiny from [U.S.] lawmakers forced deeper reform.

Now, Character.AI implements age assurance functionality — tech that verifies age — and creates an AI Safety Lab, a nonprofit dedicated to researching safe AI entertainment. The lab plans to examine methods to prevent harmful, manipulative conversations; the lab will provide data to regulators and researchers.

The Regulatory Pressure Builds

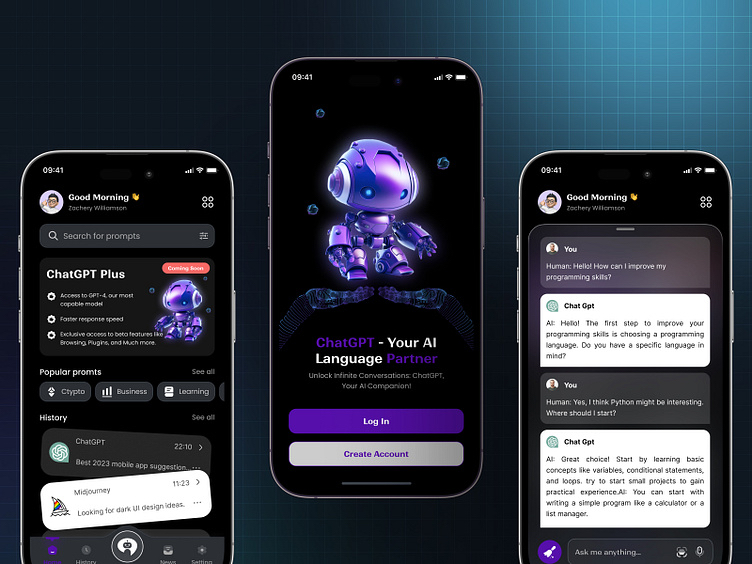

This decision doesn’t exist in isolation. The AI-teen safety issue exploded further after revelations that over one million users weekly discuss suicide with ChatGPT. OpenAI now employs stricter parental controls and automatic age detection to identify teen users .

In response, U.S. lawmakers introduced the GUARD Act, a bipartisan bill to restrict AI chatbots for minors. The bill mandates that innovators disclose chatbot identities and criminalises AI systems that solicit or generate explicit or harmful content for minors. Senator Josh Hawley (R-Mo.) framed the urgency: “AI chatbots pose a serious threat to our kids. More than 70% of American children are now using these AI products.”

Character.AI’s adjustment signals that platforms are finally taking the threats seriously.

TF Summary: What’s Next

The Character.AI (c.ai) policy represents a turnabout in conversational AI regulation. The company proceeds from innovation-first to safety-first. c.ai recognises the stakes — and the scrutiny — reach beyond international levels. They exist at the parenting’s foundational level: safety.

MY FORECAST: Expect more AI platforms to follow suit with strict U8 policies — voluntarily or by mandate. The combination of lawsuits, political action, and tragic real-world consequences forces the industry to prioritise user protection over engagement metrics. Next-gen social AI chat tools must establish safety and trust at their cores.

— Text-to-Speech (TTS) provided by gspeech