California Tightens Grip on AI’s Wild West

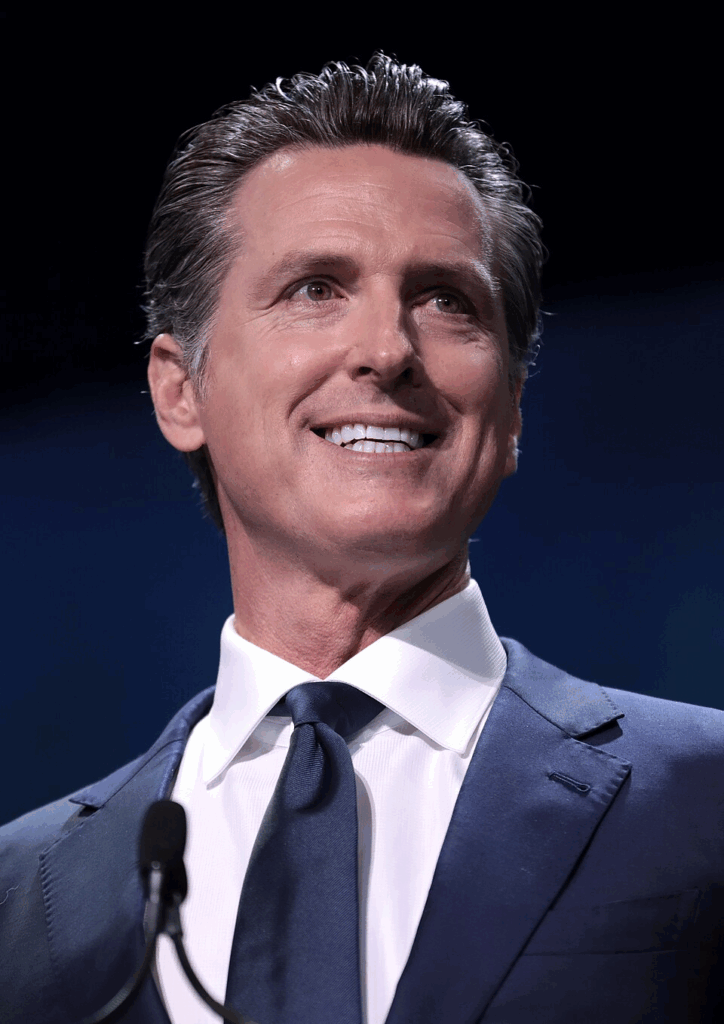

California just became the first U.S. state to implement sweeping new rules for AI governance, tackling both AI company accountability and child safety in the digital era. Governor Gavin Newsom signed two new laws—the Transparency in Frontier Artificial Intelligence Act and a Companion Bot Regulation Bill—that aim to make AI development and interaction safer for users of all ages.

The first law targets AI corporations. It mandates more transparency from companies like OpenAI, Anthropic, and Google DeepMind without halting innovation. The second zeroes in on AI companions and deepfake creators, demanding stricter measures to protect children and regulate harmful content.

While both laws represent progress, critics say California stopped short of full regulation, favouring disclosure and reporting over enforcement.

What’s Happening & Why This Matters

Transparency Over Testing

Governor Newsom signed the Transparency in Frontier Artificial Intelligence Act (S.B. 53) after shelving a tougher version, S.B. 1047, which required safety testing and “kill switches” for AI systems. Instead, S.B. 53 stresses corporate disclosure.

AI companies earning over $500 million annually must now publish their safety protocols, explain how they follow national and international standards, and report any “critical safety incidents” to the California Office of Emergency Services.

The definition of “catastrophic risk” includes incidents involving at least 50 deaths or over $1 billion in damages linked to autonomous acts or system misuse. Companies that fail to comply risk civil fines of up to $1 million per violation.

Newsom praised the new approach, saying, “California has proven that we can establish regulations to protect our communities while ensuring that the growing AI industry continues to thrive.”

The transformation notes transparency instead of direct intervention, signalling a new model for AI oversight that balances innovation with responsibility.

AI Companions Face Oversight

California is also cracking down on AI companion bots — digital assistants that simulate empathy and companionship. The new AI Companion Regulation Law, effective 1 January 2026, requires platforms like ChatGPT, Character.AI, and Grok to introduce clear mental health protocols.

The systems must detect suicidal ideation and connect users to crisis prevention resources. Platforms must share statistical data about these interventions with the Department of Public Health and make that data public.

The law also bans AI companions from marketing themselves as therapists and introduces “break reminders” for minors. It further blocks sexually explicit content from reaching underage users.

“American families are in a battle with AI,” said Senator Steve Padilla, who sponsored the law. “This legislation puts real protections into place and lays the groundwork for future safeguards as the technology evolves.”

Deepfake Crackdown

The second half of the law targets AI-generated pornography and nonconsensual deepfakes. Under the new provisions, victims — including minors — can seek up to $250,000 in damages from anyone who knowingly distributes fake explicit material.

Previously, fines ranged from $1,500 to $150,000. This dramatic increase underscores California’s intent to protect victims and deter malicious use of generative AI.

TF Summary: What’s Next

California’s dual AI laws are pivotal in U.S. tech regulation. Expect other states to follow its model — particularly in transparency, user protection, and mental health safeguards. However, without strong enforcement, large AI firms will still drive the rules through self-reporting.

MY FORECAST: If the state strengthens oversight and introduces independent audits, California could set the global standard for ethical AI governance. The question remains: can transparency alone prevent the next AI crisis?

— Text-to-Speech (TTS) provided by gspeech