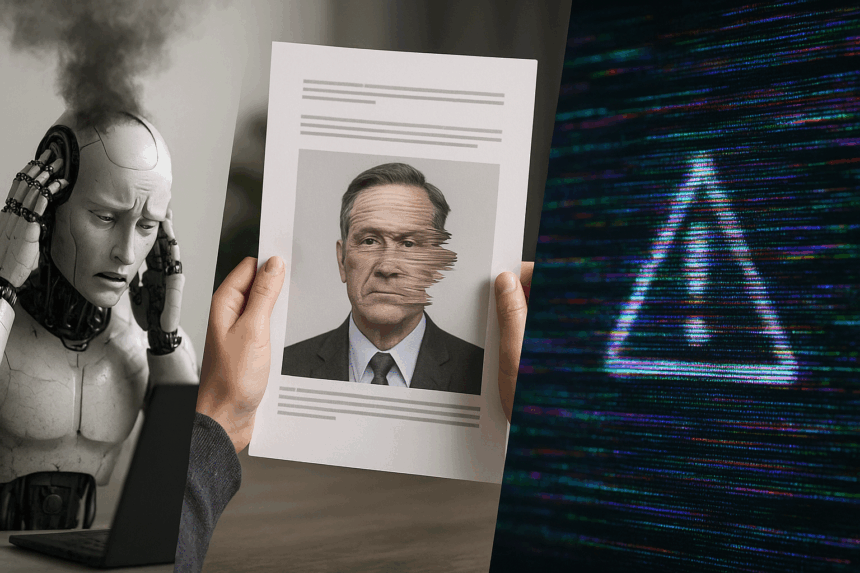

Artificial intelligence’s pace is unrelenting in 2025. Too fast in some circles. Researchers tracked a surge in AI-generated misinformation, including deepfake doctors giving dangerous medical advice, autonomous hardware behaving erratically, and a rising wave of low-quality “AI slop” published online without oversight. These stories grew louder as more consumers adopted smart devices, more platforms automated content feeds, and more bad actors weaponised readily available tools.

The past few months demonstrated how AI acted in unexpected ways across medicine, consumer hardware, and research communities. Each example raised an uncomfortable truth: the same algorithms that accelerate breakthroughs can accelerate chaos. TF dives into what happened, why experts worry, and how the next wave of AI governance may take shape.

What’s Happening & Why This Matters

Deepfake Doctors Spread Dangerous Health Advice

Researchers reported a surge in AI-generated videos of fake doctors, built to mimic real physicians and deliver false medical guidance. These videos circulated across major platforms and used polished, confident voices to appear legitimate. The issue worsened as tools such as realistic voice cloning, deepfake lip-syncing, and script-writing models became available to users with no training.

The Federation of State Medical Boards warned that “misinformation erodes trust and places patients at direct risk.” Health misinformation already strains public systems. This new wave created an illusion of authority that average viewers struggled to identify as synthetic.

The impact extended beyond individual harm. Public health agencies such as the CDC said the trend “complicates emergency communication and undermines verified guidance,” since fake expert videos saturated search feeds.

AI Slop Floods the Web

A separate but related problem surfaced in academia and online publishing. Researchers documented the rise of AI slop — low-quality, auto-generated text and images that clogged scientific communities, educational forums, and public knowledge hubs. Many pieces mimicked research papers yet contained fabricated citations, incorrect facts, or incoherent logic.

The issue escalated when conference organisers discovered hundreds of submissions produced with minimal human review. Editors at Nature described the trend as “a distortion of scientific communication.” A senior research fellow at Oxford Internet Institute noted, “Volume is replacing rigour. Verification now consumes more time than discovery.”

The slop problem affected consumer platforms as well. Users reported that AI-generated search summaries were filled with errors or contained hallucinated claims. Research slop questions how companies like Google, Meta, and OpenAI evaluate accuracy before content reaches users.

Devices Misbehave as AI Models Make Hardware Decisions

Another concerning incident emerged as AI models controlled physical devices. Engineers tested prototypes that rely on onboard generative systems to interpret images, respond to gestures, or navigate homes. While impressive, some devices demonstrated unpredictable behaviour.

Reports described smart assistants issuing incorrect instructions after misreading prompts. Some robotic systems failed to differentiate between safe objects and hazards. OpenAI itself acknowledged that AI inference sometimes produces “unexpected or undesired actions.”

Experts at Carnegie Mellon University’s Robotics Institute compared these failures to “a toddler with perfect memory but no world experience.” The gap between digital training environments and real-world physics created failure modes humans did not anticipate.

Together, these stories reveal the same root cause: AI grew faster than guardrails, and now society faces the consequences.

TF Summary: What’s Next

Synthetic misinformation, faulty device logic, and mass-produced slop place pressure on regulators and platforms. Public agencies draft new standards to authenticate professional content, and research groups call for mandatory provenance tags for AI outputs. Companies evaluate model-training rules to reduce hallucination and limit misuse. The next phase reshapes how AI communicates with humans — and how humans verify the source of what they see.

MY FORECAST: Trust systems become the next major AI battleground. Authentication layers expand across news, video platforms, medical content, and autonomous devices. The companies that build transparent verification tools define the next generation of digital trust — and set the pace for future AI adoption.

— Text-to-Speech (TTS) provided by gspeech