Meta’s New AI Thinks Beyond English

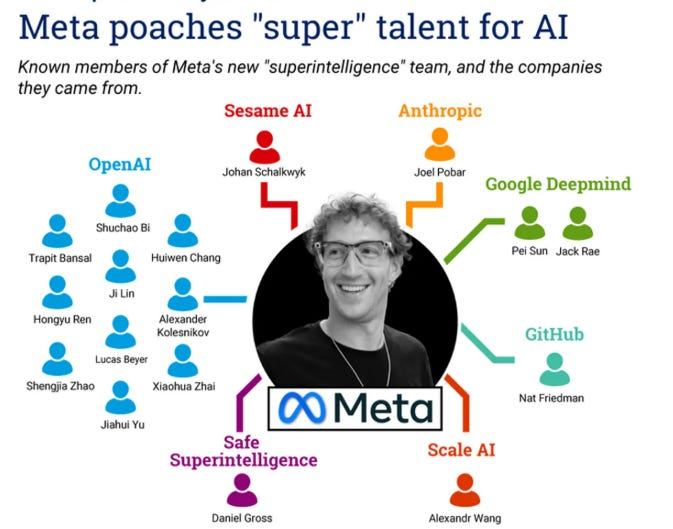

Meta is teaching its artificial intelligence to talk like the world. Its newly formed Superintelligence Labs launched a model that can transcribe 500 lesser-known or “low-resourced” languages, one of the most ambitious, multilingual AI projects ever attempted.

The technology, called Omnilingual Automatic Speech Recognition (ASR), is open-source and available on Hugging Face. Meta says it can recognise over 1,600 spoken languages, including those with little to no online representation, like Hwana (Nigeria), Rotokas (Papua New Guinea), and Güilá Zapotec (Mexico).

The goal is bold: reduce the digital divide by making speech technology accessible to everyone, no matter how obscure their language. But even Meta admits the system isn’t perfect — at least not yet.

“This is experimental software,” Meta says. “While we strive for accuracy, transcriptions are not perfect. You should always double-check the outputs.”

What’s Happening & Why This Matters

Meta’s Push for Global Language Equality

For years, Big Tech prioritised primary global languages — English, Mandarin, Spanish, and French. This left thousands of local dialects without proper digital tools. Meta’s Superintelligence Labs wants to change that.

The company’s Omnilingual ASR model employs AI-powered speech recognition that requires only a few recorded examples to learn a new language. Meta calls it Omnilingual wav2vec 2.0, an advanced system that can learn speech patterns without needing extensive training data.

Meta also released a supporting dataset called the Omnilingual ASR Corpus, a collection of transcribed speech in 350 underserved languages. The system allows users to contribute by uploading a few short audio clips and text samples. The model then improves itself — learning to recognise patterns faster across regions.

This approach increases accessibility for billions of people who speak non-dominant languages, providing new digital tools for communication, education, and commerce.

A Digital Bridge for the Unconnected

Meta’s announcement ties directly into its broader push for digital inclusion. Beyond AI models, the company is expanding infrastructure with Project Waterworth — the world’s longest underwater data cable network. It connects regions across Africa, the Middle East, and Asia to improve bandwidth and enable AI access in areas that currently lack high-speed connectivity.

“We see this as part of a larger mission to bring more voices online,” a Meta spokesperson said. “Language is the bridge, not the barrier.”

If successful, the system could create a universal transcription service, turning spoken words into text across thousands of cultural contexts. However, the real test lies in whether native speakers trust and use these tools — especially in regions where internet penetration remains low.

Meta vs. Google: The Global Language Race

Google currently leads global translation with Google Translate, which introduced zero-shot translation in 2022 — a system that can translate text between languages it’s never seen before.

However, Google’s approach is more human-centred, relying on professional translators and native speakers before adding new languages. Meta, on the other hand, leans heavily on AI scalability — using vast datasets to approximate human-level understanding faster.

Both companies share the same goal: giving every human a voice online. But their paths differ — Google perfects, while Meta experiments.

AI’s Ever-Developing Linguistic Brain

The Omnilingual ASR model is part of Meta’s long-term strategy to create a “superintelligent” AI that can understand all human communication, from speech to gestures to cultural nuances.

While Meta acknowledges the system produces imperfect transcriptions, its self-learning structure allows rapid adaptation. Over time, it could even support real-time voice translation in VR and AR spaces — essential for the Metaverse vision CEO Mark Zuckerberg champions.

Still, some linguists warn of the risks. Overreliance on AI for linguistic preservation introduces biases and inaccuracies, especially in dialects that lack standardised writing systems.

Yet for Meta, the mission is clear: the more languages its AI learns, the closer it gets to a truly global superintelligence.

TF Summary: What’s Next

Meta’s Superintelligence Labs now stands at the frontier of AI linguistics, bridging technology and cultural preservation. While the Omnilingual ASR model isn’t flawless, it signals a massive leap toward an internet that speaks everyone’s language.

MY FORECAST: Meta is refining its models to integrate with its AI ecosystem, including Messenger, WhatsApp, and Quest VR devices. Expect partnerships with universities and cultural groups as Meta strives to verify and improve lesser-known language models. Within five years, this project could improve how humanity communicates — online, and in other realities.

— Text-to-Speech (TTS) provided by gspeech