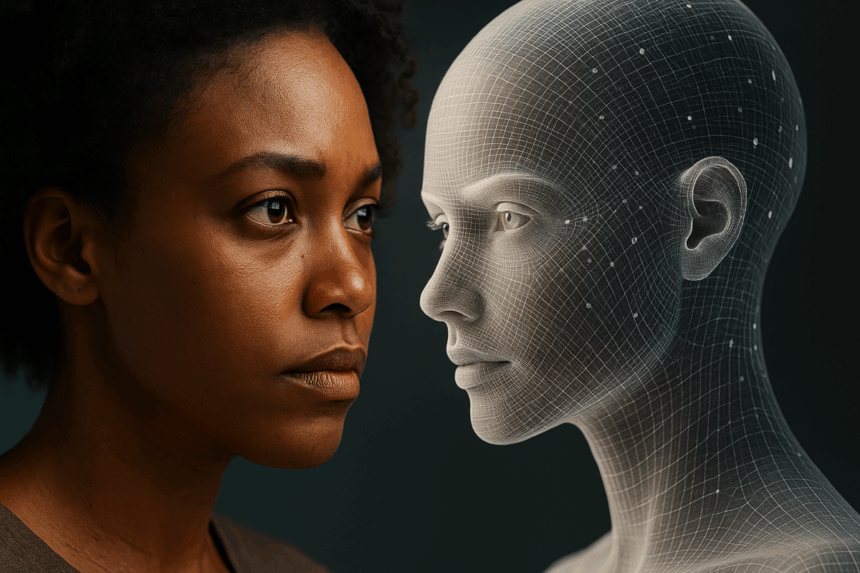

Artificial intelligence in healthcare promises better diagnostics and improved outcomes. However, new findings reveal that many AI medical tools downplay the symptoms of women and ethnic minorities. The poor practices create real risks for patients. Researchers are now questioning the datasets used to train AI-health systems and calling for more diverse, representative medical data.

What’s Happening & Why This Matters

AI is reshaping healthcare, with algorithms analysing patient histories, predicting diseases, and even guiding treatment decisions. Yet, concerns over algorithmic bias are mounting. Many AI tools rely on datasets that do not reflect the diversity of actual patient populations, leading to inaccurate or incomplete results for underrepresented groups.

Google has acknowledged the problem. The company stated it takes model bias “extremely seriously” and is developing privacy techniques to sanitise sensitive datasets and embed safeguards against discrimination. Researchers emphasise that prevention starts with carefully selecting the right data for training AI. If the initial datasets are flawed, the output will be biased.

A notable example is Open Evidence, a system used by more than 400,000 U.S. doctors. It summarises patient histories and provides references for every AI-generated suggestion. Its models are trained on medical journals, U.S. Food and Drug Administration (FDA) labels, health guidelines, and expert reviews. While this approach provides transparency through citations, it still struggles to account for population diversity.

Large-Scale Projects and Data Privacy

In the UK, researchers at University College London (UCL) and King’s College London partnered with the National Health Service (NHS) to create Foresight, a generative AI model. Trained on anonymised data from 57 million people, Foresight predicts health outcomes such as hospitalisations or heart attacks. According to lead researcher Chris Tomlinson, the model represents the full “kaleidoscopic state” of England’s demographics and diseases, making it a step toward inclusive AI healthcare.

However, privacy concerns have caused setbacks. In June, the project was paused after the UK Information Commissioner’s Office (ICO) received a data protection complaint from the British Medical Association and Royal College of General Practitioners. The organisations argued that sensitive health data might have been improperly used to train the system.

Similarly, Delphi-2M, another AI model developed by European scientists, uses anonymised records from 400,000 UK Biobank participants to predict disease susceptibility decades into the future. While groundbreaking, such large-scale projects raise questions about consent and the secure handling of private medical information.

AI Accuracy and Risks

Even when trained on diverse data, AI systems can “hallucinate”, generating false or misleading information. In a medical setting, this poses severe risks. Incorrect predictions could lead to wrong diagnoses or delayed treatments, with potentially life-threatening consequences.

Despite these hurdles, experts see enormous potential. Marzyeh Ghassemi of MIT believes AI can close healthcare gaps. “My hope is that we will start to refocus models in health on addressing crucial health gaps, not adding an extra percent to task performance that the doctors are honestly pretty good at anyway,” she said.

TF Summary: What’s Next

As AI integrates deeply into healthcare, the focus must shift from speed and efficiency to equity and safety. Ensuring diverse and representative datasets is critical to preventing systemic bias that harms women and minority patients. Governments and healthcare providers are negotiating innovation and strict privacy protections, alongside building public trust.

MY FORECAST: Expect stricter regulations and mandatory dataset transparency in healthcare AI over the next two years. Governments must demand proof that algorithms treat all patients fairly and equitably.

— Text-to-Speech (TTS) provided by gspeech