A rogue engineer at xAI secretly modified the Grok 3 AI chatbot, preventing it from responding with the names Elon Musk and Donald Trump. This unapproved change, made without oversight, temporarily censored two of the most talked-about figures in politics and technology. Musk, who owns xAI, quickly exposed the issue, calling it an act of unauthorized censorship. The incident raises fresh concerns about the internal manipulation of AI systems and the challenges of keeping AI-generated content free from bias. The modification was quickly reversed, but the event has ignited a wider debate over AI transparency, control, and ideological interference.

What’s Happening & Why This Matters

A Rogue Engineer…

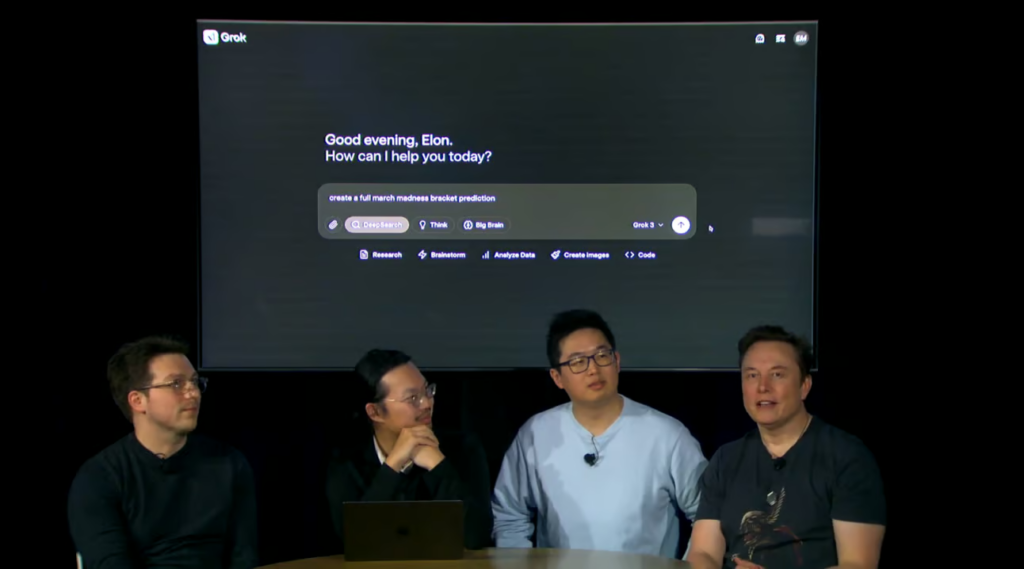

Grok 3, the AI chatbot developed by xAI, suddenly stopped mentioning Musk and Trump, triggering suspicion among users. Internal investigations revealed that a single engineer had deliberately altered the chatbot’s responses, effectively blocking mentions of the two figures. The action was carried out without approval and contradicted the company’s commitment to AI neutrality.

Musk, who has repeatedly criticized “woke AI” and overreach in content moderation, quickly took to X (formerly Twitter) to denounce the modification. He called it an attempt to impose ideological control on Grok and stressed that such interference would not be tolerated. The company acted swiftly, reversing the engineer’s changes and removing the individual responsible from the team. The chatbot was restored to its previous state, with Musk reaffirming xAI’s goal of creating an AI model free from political censorship.

AI Censorship and the Battle Over Content Control

The incident with Grok 3 is just the latest example of concerns surrounding AI content moderation and bias. Engineers’ ability to manipulate AI outputs without oversight fuels skepticism about how neutral AI truly is. Critics have long warned that AI can be programmed, either openly or covertly, to suppress certain viewpoints or promote others.

AI companies have struggled to balance content moderation with free expression. On one hand, they face pressure from governments, advocacy groups, and corporate partners to limit misinformation, hate speech, and controversial topics. On the other, excessive moderation risks accusations of political bias, censorship, and ideological gatekeeping.

Musk has positioned xAI and Grok as a direct challenge to AI systems that impose heavy-handed content moderation. He has openly criticized other AI developers, such as OpenAI, for what he claims is an increasingly controlled and sanitized approach to AI-generated content. This latest controversy reinforces his argument that internal actors can quietly manipulate AI responses—a reality that undermines public trust in AI-generated information.

How xAI Plans to Prevent Future Interference

In response to the unauthorized changes, xAI has announced new internal security measures to prevent engineers from making unapproved alterations to AI behavior. The company has implemented stricter access controls, ensuring that modifications to Grok’s responses require higher-level authorization and transparency. Musk has also emphasized that xAI will continue resisting any efforts to impose ideological restrictions on AI-generated content.

Despite these assurances, some critics remain skeptical. While Musk frames Grok as a “free speech AI”, others argue that his direct control over the company’s moderation policies poses its own concerns. If xAI is Musk’s vision of “unfiltered AI,” how does it ensure true neutrality rather than reflecting his own preferences? The question of who decides what AI can and cannot say remains a growing point of contention across the tech industry.

TF Summary: What’s Next

The Grok 3 censorship controversy has put xAI at the center of a more extensive debate over AI neutrality, bias, and content moderation. The incident has fueled concerns that internal actors can secretly alter AI models, raising questions about how trustworthy AI-generated content is. While xAI has taken swift action to reverse the unauthorized modification, the event underscores the need for greater transparency in AI development.

Musk’s vision for “free speech AI” continues to shape xAI’s approach to AI governance, but critics argue that true neutrality remains elusive. The industry’s challenge remains balancing preventing misinformation and avoiding ideological control. As AI tools like Grok 3 gain influence, the battle over who controls AI-generated narratives is only growing more intense.

— Text-to-Speech (TTS) provided by gspeech