The AI Action Summit in Paris has brought together world leaders, tech executives, and researchers to address the growing challenges and opportunities artificial intelligence presents. While discussions focus on global governance, AI safety, and sustainability, serious divisions have emerged. The UK and the US have refused to sign an international AI declaration, signaling opposition to global AI regulations, while deepfake technology and corporate AI restrictions have also dominated conversations.

With deepfake clips of French President Emmanuel Macron going viral, concerns over AI-generated misinformation are escalating. Meanwhile, an international law firm has restricted employee access to generative AI, citing security risks and unregulated usage. The summit’s outcomes could shape the next wave of AI policy, industry standards, and ethical debates.

What’s Happening & Why This Matters

UK and US Decline to Sign Global AI Agreement

A significant takeaway from the summit is the UK and US’s refusal to sign a global AI declaration, despite support from France, China, India, and 57 other nations. The agreement promotes “open, inclusive, and ethical” AI development, focusing on accessibility, transparency, and sustainability. However, the UK government cited national security concerns, arguing that the declaration lacked clarity on global governance. US Vice President JD Vance defended AI deregulation, warning that excessive oversight could stifle industry growth. In contrast, French President Emmanuel Macron pushed back, stressing that AI must be regulated to ensure safety and innovation coexist. The decision has drawn criticism from AI safety advocates, who argue that the UK’s credibility as a leader in AI governance is now at risk.

Macron’s Deepfakes Spark Debate on AI Misinformation

Macron’s use of AI-generated deepfakes to promote the summit has sparked controversy. A viral video montage featured AI versions of Macron inserted into pop culture clips, ranging from 1980s disco music to action TV shows. While some experts argue that normalizing deepfakes makes spotting misinformation harder, Macron defended AI’s role in transforming industries such as healthcare and energy. Paul McKay of Forrester Research warned that deepfake technology must be handled carefully, as its misuse could erode trust in digital content. Meanwhile, the EU’s newly implemented AI Act, aimed at regulating synthetic media and AI-generated content, was heavily debated at the summit.

Law Firms and AI Restrictions: A Growing Trend?

Beyond policy disputes, AI’s role in corporate environments is facing new restrictions. The international law firm Hill Dickinson has blocked employee access to AI tools, citing security risks and compliance concerns. The firm detected over 32,000 ChatGPT queries and 3,000 hits to DeepSeek AI in just one week, raising alarm over potential data leaks. Employees also accessed Grammarly nearly 50,000 times, furthering concerns over sensitive data exposure. AI advocates argue that banning AI tools outright is counterproductive and that regulated AI integration is a better path forward. The UK’s Information Commissioner’s Office (ICO) emphasized that organizations should enable secure AI use rather than push it underground. Other law firms and businesses now closely monitor AI usage, underscoring an industry-wide debate over AI security, compliance, and employee training.

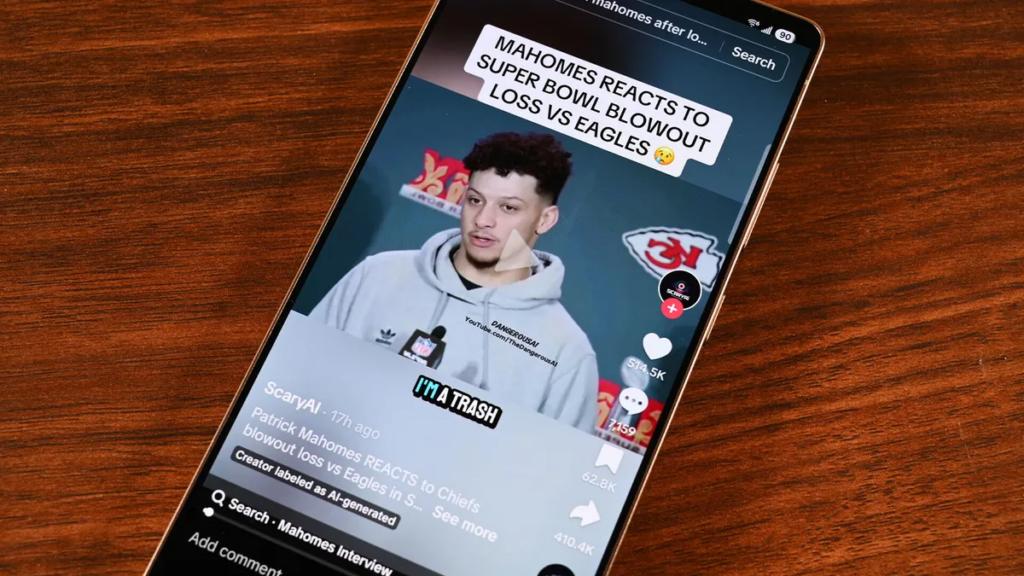

Deepfake Culture and the Risks of AI-Generated Content

While deepfakes have become common in entertainment, their potential for abuse is raising red flags. A viral deepfake of NFL quarterback Patrick Mahomes, viewed over six million times, blurred the line between parody and deception. The ScaryAI account, known for its hyper-realistic sports deepfakes, has continued to create AI-generated viral content, further complicating efforts to control misinformation. Experts warn that unchecked deepfake technology could be exploited for disinformation, fraud, and election interference. Several countries are pushing for stricter AI content labeling laws, while platforms like Meta have struggled to regulate AI-generated profiles. The debate over whether AI-generated content is harmless fun or a serious threat to digital trust remains unresolved.

TF Summary: What’s Next

The AI Action Summit in Paris has exposed critical divides over AI governance, regulation, and misinformation. With the UK and US rejecting global AI oversight, concerns over AI safety, ethics, and corporate responsibility are mounting. As deepfakes and generative AI gain traction, governments and industries face growing pressure to find a balance between innovation and regulation.

— Text-to-Speech (TTS) provided by gspeech