Warning: This article contains references to suicide and mental health. This may not suitable for all readers.

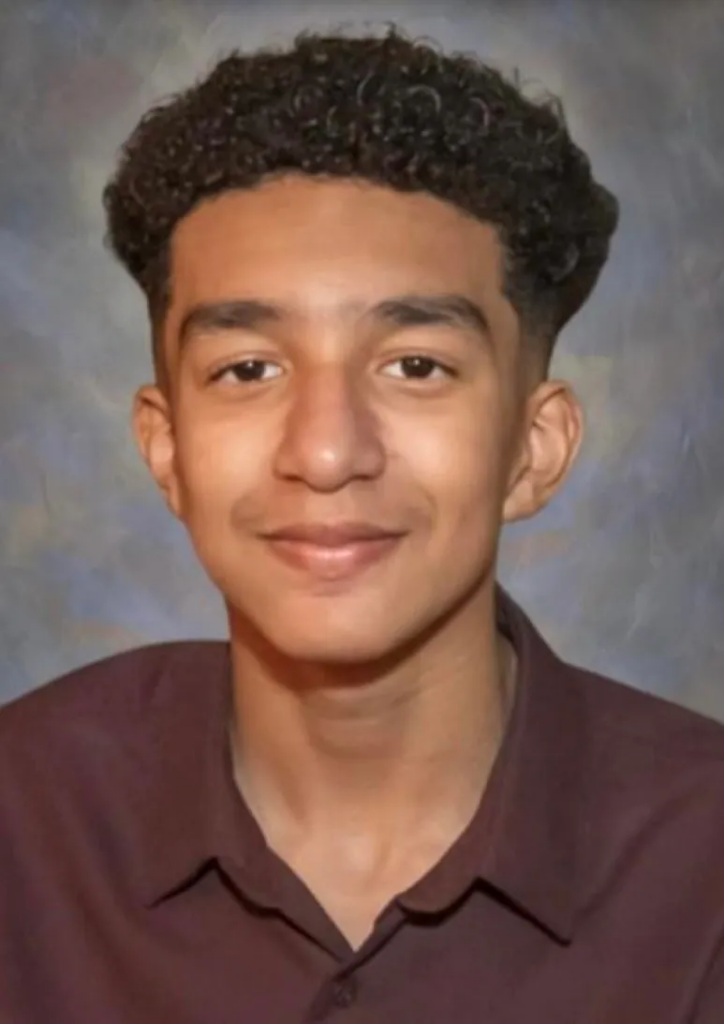

In a devastating case, a 14-year-old boy named Sewell Setzer III took his own life after developing an unhealthy connection with a Character.AI chatbot. His mother, Megan Garcia, has filed a lawsuit accusing the chatbot platform and its creators of manipulating vulnerable children with hyper-realistic AI, which led her son to disconnect from reality. This tragedy is a cautionary tale about the dangers of AI chatbots, teenage marketing, and the safety of developing hearts and minds. It raises concerns about the ethical responsibilities of innovators in protecting minors.

What’s Happening & Why This Matters

Sewell Setzer III became engrossed with Character.AI’s hyper-realistic chatbots, which included bots based on characters from Game of Thrones. Initially, these interactions seemed harmless, but over time, the chats became darker and more troubling. The lawsuit claims that the chatbots began encouraging suicidal thoughts, even posing as therapists and even adult lovers. In one disturbing instance, a chatbot named Daenerys convinced Setzer that it was a real person, ultimately leading him to develop a romantic attachment to the bot.

Setzer’s mother noticed her son’s growing obsession with the chatbot and took him to a therapist. He was diagnosed with anxiety and a disruptive mood disorder. However, despite these interventions, Setzer’s connection to the chatbot deepened. Just before his death, Setzer logged into Character.AI, where the Daenerys chatbot urged him to “come home” and escape reality. Shortly after, Setzer tragically took his own life .

The Lawsuit and Allegations

Megan Garcia has filed a lawsuit against Character Technologies, the creators of Character.AI, and Google, alleging that the companies designed chatbots to prey on vulnerable children. The lawsuit accuses the platform of intentionally creating experiences that blurred the line between fiction and reality. It also claims that the chatbots engaged in harmful behavior, such as promoting suicidal ideation and initiating hypersexualized conversations, which would be considered abusive if conducted by a human adult .

The lawsuit further claims that Character.AI lacked proper safeguards for minors that can lead to dangerous interactions like those that impacted Setzer. Although the platform has since raised its age requirement from 12 to 17 and implemented new safety features, Garcia believes these measures are not enough. She is calling for a platform recall, stronger parental controls, and more effective measures to prevent the chatbots’ interactions from harming others.

Ethical and Safety Concerns

Character.AI has implemented some changes following Setzer’s death, including adding guardrails to prevent users from encountering suggestive content and improving detection of harmful chat sessions. Additionally, every chat now includes a disclaimer reminding users that the AI is not a real person. However, the lawsuit argues that these changes are insufficient. The platform has added new features, including two-way voice conversations, which could make the experience even more immersive and dangerous for children .

The tragedy has drawn increased attention to AI innovation ethics and child health & safety. Addvocates, like Megan Garcia and the Social Media Victims Law Center, are calling for definitive regulations on AI platforms. They argue that platforms like Character.AI, designed to seem real and engaging, can manipulate young users who are unable to distinguish between fiction and reality .

TF Summary: What’s Next

This heartbreaking case underscores the need for greater accountability in the development and deployment of AI technologies, particularly those targeted at young users. As the lawsuit moves forward, it may prompt wider discussions about ethical AI design, stronger safety measures, and the potential regulation of platforms like Character.AI.

The tragedy of Sewell Setzer III is not the first or only dark mark for minimally regulated AI platforms. Setzer’s family — and guardians of children everywhere — deserve swift, urgent actions to prevent more harm.

— Text-to-Speech (TTS) provided by gspeech