The UK government and regulatory bodies have raised alarms about the role of social media platforms in fueling violence and spreading harmful content. Concerns have intensified after a series of violent incidents, including a fatal stabbing in Southport, where social media amplified tensions and misinformation. These platforms now face increasing pressure to address their involvement in promoting unrest.

What’s Happening & Why This Matters

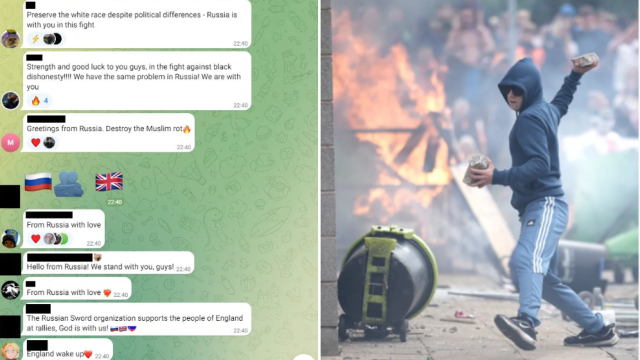

After a tragic stabbing in Southport that left three young girls dead, social media platforms faced criticism for enabling the rapid spread of false information and inflammatory content. Right-wing groups exploited the situation by circulating misleading details about the attacker, which sparked real-world violence, including anti-immigrant riots.

In response, Ofcom, the UK’s telecom regulator, issued stern warnings to social media and video-sharing platforms. The regulator stressed the need for these platforms to prevent their services from being used to incite violence, hatred, and other crimes. Ofcom reminded platforms of their legal duty to protect users from content that could provoke such actions. This warning comes as the UK prepares to implement the Online Safety Act, which will impose stricter responsibilities on platforms to manage illegal content more effectively.

Despite these efforts, some platforms, such as X (formerly Twitter), have resisted calls to remove harmful content. Instead, they have opted to let users add context through features like Community Notes, a strategy that has drawn criticism from UK officials who demand more direct action.

Meanwhile, Telegram, a messaging app known for its privacy features, experienced a surge in use following the Southport stabbing. Far-right groups reportedly used the app to organize violent activities, including an attack on a mosque that left over 50 police officers injured. Counterterrorism organizations have noted that while many tech platforms are exploited in these ways, X and Telegram are particularly vulnerable to disinformation and the organization of violence.

TF Summary: What’s Next

The UK’s implementation of the Online Safety Act will increase pressure on social media companies to remove illegal and harmful content. As the government scrutinizes these platforms more closely, especially in light of their role in recent violence, companies like X and Telegram may need to rethink their content moderation strategies. Their responses will be crucial as the debate over balancing free speech with public safety continues.