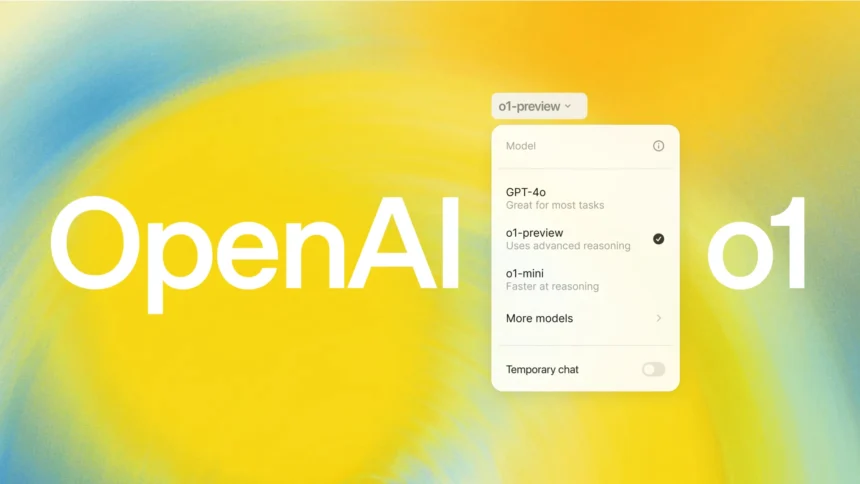

OpenAI has introduced its newest AI language models, o1-preview and o1-mini, which are said to offer enhanced reasoning and problem-solving capabilities compared to previous models. This new “Strawberry” model family aims to set a new standard in AI by focusing on complex tasks and iterative problem-solving. Available for ChatGPT Plus users and selected API customers, these models have generated buzz within the AI community due to their potential to handle challenging tasks better than ever before.

What’s Happening & Why This Matters

OpenAI launched the o1-preview and o1-mini models as part of its “Strawberry” AI line, claiming that these models outperform the older GPT-4o across various benchmarks, including competitive programming, mathematics, and scientific reasoning. The o1-preview model reportedly ranks in the 89th percentile on competitive programming questions from Codeforces and scores 83 percent on a qualifying exam for the International Mathematics Olympiad, compared to GPT-4o’s 13 percent. These claims, however, have yet to be independently verified as AI benchmarks often require external testing to establish their credibility.

The new models use a unique reinforcement learning (RL) training approach that encourages the AI to “think” through problems methodically before responding, a concept similar to “chain-of-thought” prompting in earlier models. This method allows o1 to try different strategies, identify errors, and improve its responses, making it especially suited for tasks that require deep reasoning and planning.

However, not everyone is convinced about the model’s capabilities. Some users report that o1 does not yet surpass GPT-4o in every aspect and can be slow due to its multi-step processing. OpenAI’s product manager, Joanne Jang, addressed these concerns on social media, stating that while o1 shines in tackling difficult tasks, it is not a “miracle model” that outperforms its predecessors in every area. She expressed optimism about the model’s potential and future improvements.

AI industry experts and independent researchers remain cautious. Wharton Professor Ethan Mollick shared his hands-on experience, describing o1 as “fascinating” for its problem-solving abilities but noting it doesn’t excel in all areas. He highlighted an example where the model successfully built a teaching simulator using multiple agents and generative AI but required multiple steps and time to solve a crossword puzzle correctly, sometimes hallucinating answers.

Controversies and Challenges

The launch of o1 has sparked debate over the use of terms like “reasoning” to describe AI capabilities. Critics argue that terms such as “thinking” and “reasoning” falsely suggest human-like cognitive abilities in AI systems. Clement Delangue, CEO of Hugging Face, argued that AI systems do not “think” but instead “process” and “run predictions.” This debate reflects broader concerns about how AI is presented to the public and the potential for misleading impressions of its capabilities.

Moreover, the new model has not yet integrated some features found in earlier iterations, such as web browsing, image generation, and file uploading. OpenAI plans to add these functions in future updates, alongside ongoing development of both the o1 and GPT model series.

TF Summary: What’s Next

OpenAI’s o1 models introduce new capabilities and methods in AI problem-solving, but they also come with limitations and mixed reactions from early users. While the models show promise in handling complex tasks, they require further validation through independent testing. OpenAI plans to expand the models’ features and improve performance, suggesting more updates in the future. Observers should keep an eye on how these developments unfold, particularly in terms of their impact on AI’s role in solving increasingly complex challenges.

— Text-to-Speech (TTS) provided by gspeech