The National Highway Traffic Safety Administration (NHTSA) is once again investigating Tesla’s Full Self-Driving (FSD) technology — this time over allegations that the system runs red lights and ignores lane markings, raising fresh questions about the safety of partially automated driving systems.

The probe covers FSD (Supervised) and FSD (Beta) versions deployed across approximately 2.9 million Tesla vehicles. According to NHTSA’s Office of Defects Investigation (ODI), the agency has received multiple complaints and incident reports suggesting the system behaves unpredictably in critical driving situations.

What’s Happening & Why This Matters

Tesla’s Full Self-Driving Under Federal Scrutiny

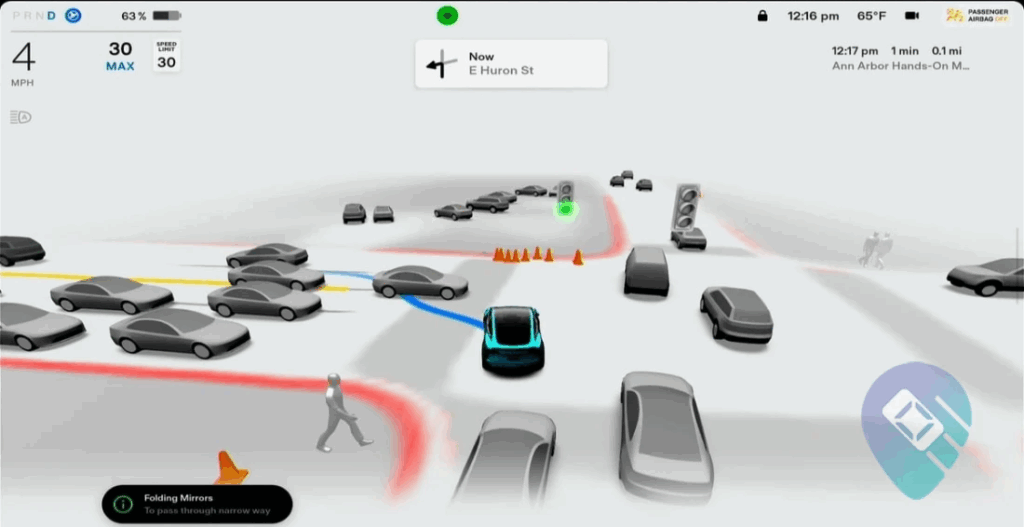

The investigation focuses on two key safety concerns: how FSD handles traffic signals and how it navigates lane boundaries. In several cases, FSD-enabled vehicles reportedly ran red lights or stopped briefly before proceeding through intersections while lights were still red. Some drivers said the system failed to detect or display the correct traffic signal state, and in others, it did not alert the driver to take control.

The ODI has logged 18 complaints and one media report on red-light issues. Six incidents escalated to crashes, with four resulting in injuries.

The second concern involves lane detection failures. FSD-equipped Teslas have drifted into wrong lanes or attempted to turn the wrong way onto divided roads, even in the presence of double-yellow lines and “Do Not Enter” signage. NHTSA received two internal reports from Tesla, 18 public complaints, and two media reports about such lane issues. Additionally, the ODI recorded six driver complaints and four crash-related reports involving ignored or misinterpreted lane markings.

Technical and Regulatory Obstacles

The NHTSA’s review now centers on whether Tesla’s driver monitoring and alert system gives adequate time for human intervention when FSD misbehaves. Regulators also want to know whether recent software updates or interface modifications have introduced new risks.

Currently, FSD remains a Level 2 driver-assistance system, meaning drivers need to stay alert and ready to take control at all times. Despite its “self-driving” branding, Tesla’s system requires active human supervision — something critics argue is poorly communicated to consumers.

Tesla’s robotaxi trials in Austin and San Francisco still employ safety drivers, reflecting ongoing regulatory caution. NHTSA’s fresh probe mirrors an earlier investigation from 2024 that examined whether FSD contributed to collisions in low-visibility conditions.

Implications

This investigation comes as autonomous and semi-autonomous vehicle oversight intensifies across the U.S. Regulators are under pressure to address safety risks without stifling innovation. Tesla, for its part, maintains that its driver-assistance systems improve safety when used as directed, citing billions of autonomous miles logged.

Still, Tesla’s FSD remains a lightning rod for criticism. Experts argue that the company’s beta testing approach on public roads blurs the line between research and consumer deployment. Critics contend that features labeled as “self-driving” mislead drivers into over-relying on automation.

Missy Cummings, a robotics and AI safety researcher at George Mason University, said,

“It’s a regulatory paradox — Tesla’s FSD is technically supervised, but it’s marketed as autonomy. That tension puts every driver, and everyone sharing the road, at risk.”

TF Summary: What’s Next

The NHTSA’s latest review of Tesla’s FSD system adds to a stack of investigations into automated driving safety. With more than 2.9 million vehicles affected, the outcome may impact how advanced driver-assistance systems are regulated across the auto industry.

MY FORECAST: If the probe finds systemic flaws in Tesla’s FSD design or updates, regulators may require recalls, software rollbacks, or new driver-monitoring standards. Tesla’s automation roadmap — including its robotaxi ambitions — depends on how effectively it demonstrates that FSD enhances, rather than endangers, public safety.

— Text-to-Speech (TTS) provided by gspeech