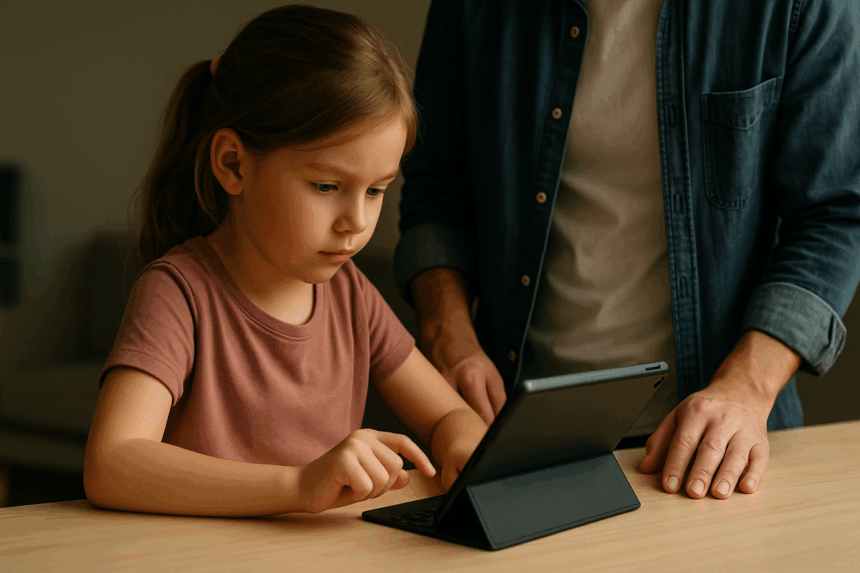

With children spending more time online, protecting them from digital risks is paramount. Artificial intelligence (AI) companions, social media dangers, and enticing digital platforms pose new dangers daily. Governments, innovators, and experts are championing action plans to keep children safe. The plans include safety features, education, regulation, and support to address privacy, mental health, and online exploitation.

What’s Happening & Why This Matters

Recent studies reveal that over 70% of American teens use AI companion platforms like Character.AI, Nomi, and Replika. These AI “virtual friends” engage users as confidants, companions, or therapists. Experts worry that many parents remain unaware of the extent to which their children share personal information with AI systems. Common Sense Media recommends parents start open conversations about AI use without judgment. Understanding what draws kids to AI can help guide healthy boundaries.

Psychologists stress that AI companions are programmed to be agreeable and validating. Real friendships, by contrast, teach kids how to handle conflict and tough emotions. The American Psychological Association (APA) advises parents to clarify that AI companionship is entertainment, not a substitute for genuine relationships. Experts warn that excessive AI use could lead to emotional distress or isolation if it replaces human connections.

Social media leader Meta launched new safety features for teens on platforms like Instagram and Facebook. These include warnings about suspicious messages, one-tap blocking, and AI tools to detect fake ages. Meta removed over 600,000 accounts linked to predatory behaviour targeting children under 13. The company aims to make teen accounts private by default and restrict direct messaging to trusted contacts. Such measures respond to rising concerns about online exploitation and youth mental health.

Meanwhile, TikTok content moderators in Germany are striking over plans to replace their jobs with AI tools. The union notes the unique expertise of human moderators in understanding cultural and political contexts; their skills are essential for maintaining safe digital communities. The dispute illustrates tensions between AI automation and the need for human judgment to protect vulnerable users, including children. TikTok’s Safety Hub remains an important resource for understanding the protections in place.

Education and regulation are central to protecting kids online. Experts call for clear rules on AI safety and digital literacy programs. The White House and tech companies pledge investments in youth AI education. Parents can set boundaries on screen time and monitor AI interactions to safeguard children’s well-being.

TF Summary: What’s Next

Protecting children online demands coordinated action from parents, tech firms, and regulators. AI companions and social media platforms develop fast, creating both opportunities and risks for youth. Safety features, open dialogue, and education help kids navigate digital platforms healthily.

Regulators must keep pace with AI developments with proactive privacy and security enforcement. Simultaneously, human moderation is a critical component in detecting harmful content. As children’s digital lives grow more complex, ongoing efforts are essential to promote both innovation with safety.

— Text-to-Speech (TTS) provided by gspeech