Google Is Investing In Its Own AI Chips

Google waded further into AI hardware arena this year, and the energy around its Tensor Processing Unit (TPU) program. TPU created quite a stir in Silicon Valley and then on Wall Street. Nvidia sat at the center of that conversation. Nvidia supplied the GPUs. Google built the models. That rhythm cracked a little after new reporting revealed Google leaned into its own silicon with more passion. Investors reacted fast. Analysts questioned Nvidia’s growth path. Engineers across the industry asked one question: Does Google punch at Nvidia’s level?

The reporting emerged after Google briefed partners about its TPU v6 and Axion accelerator efforts. Journalists surfaced new comments from Google executives about internal adoption. Nvidia responded through the press. The company stressed stability and demand. That exchange created a moment. It exposed the tension inside an AI market that expands with no ceiling in sight.

What’s Happening & Why This Matters

A Full Court Press on AI Silicon

Google and Nvidia collaborated for years. That history sat in the background. Yet the tone around their relationship shifted through 2025 as enterprise buyers explored alternatives. Some wanted lower energy use. Others wanted cheaper training cycles. Some disliked GPU scarcity. Every path triggered fresh interest in competing silicon.

Google speaks with unusual confidence about its TPU program. The company described its TPU v5p as an engine for model training at a scale that reduces energy use and lowers latency. TPU v6 followed with a new architecture that targets inference throughput and creates more stable performance across large clusters. Engineers inside Google described the hardware as “built for internal AI growth.” That phrasing carried weight since Google now runs models across Search, Maps, YouTube, and Android.

Google also introduced Axion, a custom accelerator with tighter integration for memory-heavy workloads. Early testers noted faster response times in retrieval pipelines and less heat under load. That detail circulated widely because energy use affects many cloud buyers’ purchasing decisions.

Nvidia Stands Its Ground

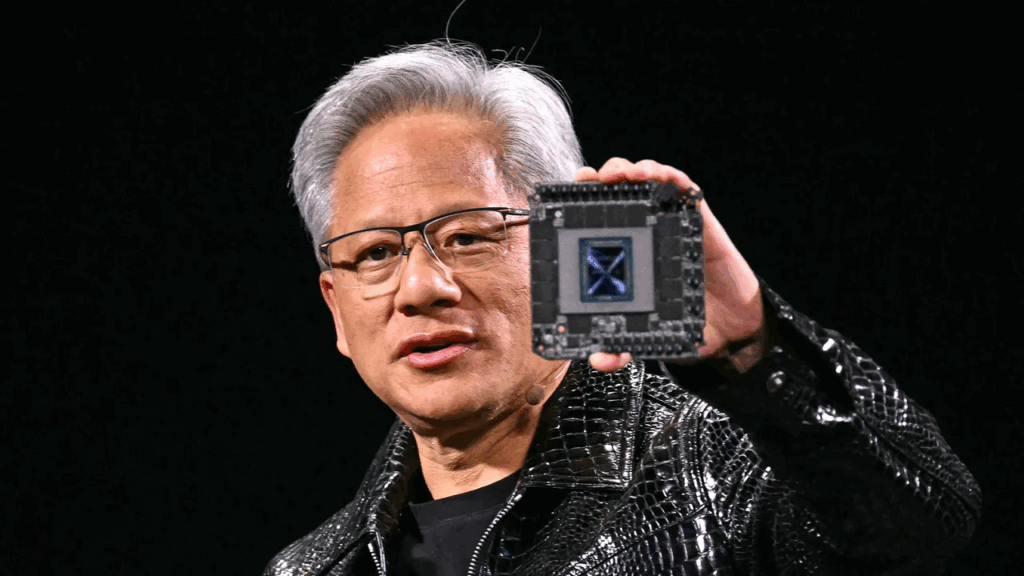

Nvidia fired back. The company told reporters that its customers “trust the full stack,” pointing to CUDA, networking, and its budding library of AI frameworks. Nvidia termed Google as a “partner and a customer” rather than a competitor. The message tried to calm a market that sold off Nvidia shares after the Google news cycle.

Nvidia leadership also stressed demand from enterprise and sovereign clients. Nvidia’s messaging reinforced the corporate line: training clusters still rely on Nvidia’s GPU ecosystem because it offers stability and scale through a familiar workflow. In other words, Nvidia voiced no concern about Google’s entry.

Investors and Market Reactions

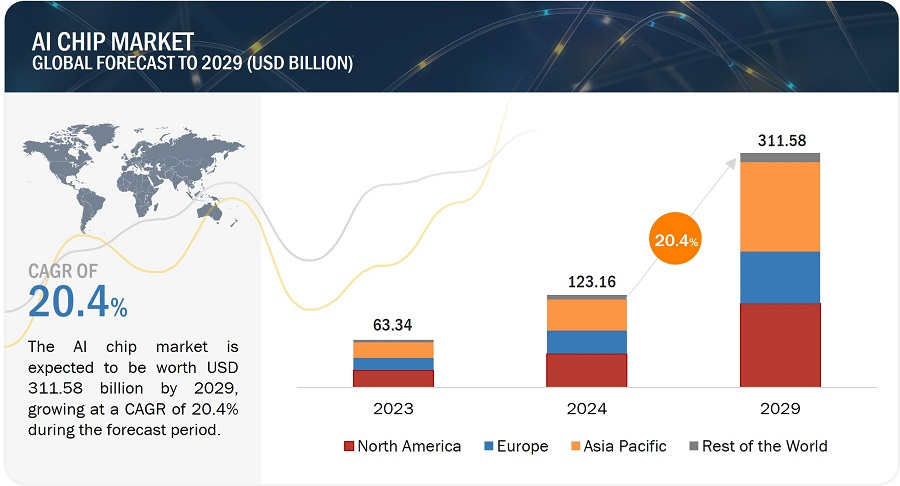

The tension between Google’s independence and Nvidia’s dominance touched financial markets. Nvidia shares dipped after reporting surfaced. Traders responded to expectations for slower hyperscaler spending. Analysts questioned whether cloud buyers want in-house silicon that reduces reliance on Nvidia. Google’s work, Amazon’s Graviton and Trainium, and Microsoft’s Maia add to that consideration.

Google’s silicon strategy also created new expectations around performance-per-dollar metrics. CIOs read every benchmark. Procurement teams consider heat output. Startups chase hardware that shortens training cycles. The market is less predictable, and Nvidia no longer enjoys a monopoly mindset in analyst notes.

This Feels Different…

Google’s decision feels distinct because AI workloads appear to be nonstop. Models increase in size. Retrieval tasks multiply. Consumers expect real-time AI in phones, browsers, and enterprise dashboards. Every part of that system demands more computing. Google stated that demand is up so much that it must “double capacity every six months.” That statement encapsulated the hardware constraints with unusual clarity.

Nvidia is the storm’s center, and Google is attacking from both outside and inside the ecosystem. That dual force changed the industry’s tone.

TF Summary: What’s Next

AI compute demand surges in every sector — search, retail, entertainment, government, and enterprise SaaS. Google amplifies that surge with its TPU and Axion programs. Nvidia anchors the opposite side of the market with its GPU empire. Buyers stand between them with more choice than ever. The choice reimagines the AI arms race.

MY FORECAST: Google intensifies its silicon push next year with deeper TPU integration across Search and Gemini traffic. Nvidia responds with more vertical optimization and pricing strategies that target hyperscalers directly. The competitive heat expands into new categories: networking, cooling, memory, and AI-specific storage. A two-player rivalry becomes a multi-front battle.

— Text-to-Speech (TTS) provided by gspeech