The platform tightens age gates worldwide, trading friction and trust for safety-by-default.

Discord thrust a difficult question of its users. If you want to see sensitive content, you must prove your age. Not with a checkbox. Or a birthday field. With a face scan or a government ID.

The platform confirms that it will roll out global age verification from early March, defaulting all users to a “teen-appropriate” experience unless they verify as adults. The change applies worldwide and affects access to age-restricted communities, unblurred sensitive media, and certain direct messages.

This is one of the most aggressive safety procedures by a major social platform in years. It also comes at a time of deep mistrust.

Discord is already facing backlash after a previous age-verification partner suffered a breach that exposed IDs for about 70,000 users. Privacy advocates warn that face scans and ID uploads carry real risk, even when companies promise deletion.

So Discord’s security is at the nexus of a wider uncertainty.

How do platforms protect minors without turning adults into surveillance subjects?

What’s Happening & Why This Matters

Policy: “Teen-by-Default”

Discord says teen safety comes first. The company already applies age checks in the UK and Australia to comply with local online safety laws. It now expands that model globally.

Savannah Badalich, Discord’s global head of product policy, explains the intent clearly. “Nowhere is our safety work more important than when it comes to teen users,” he says. “Rolling out teen-by-default settings globally builds on Discord’s existing safety architecture.”

By default, users see restricted content, limited discoverability, and tighter communication rules. To lift those limits, users must verify their age.

That verification happens in two ways.

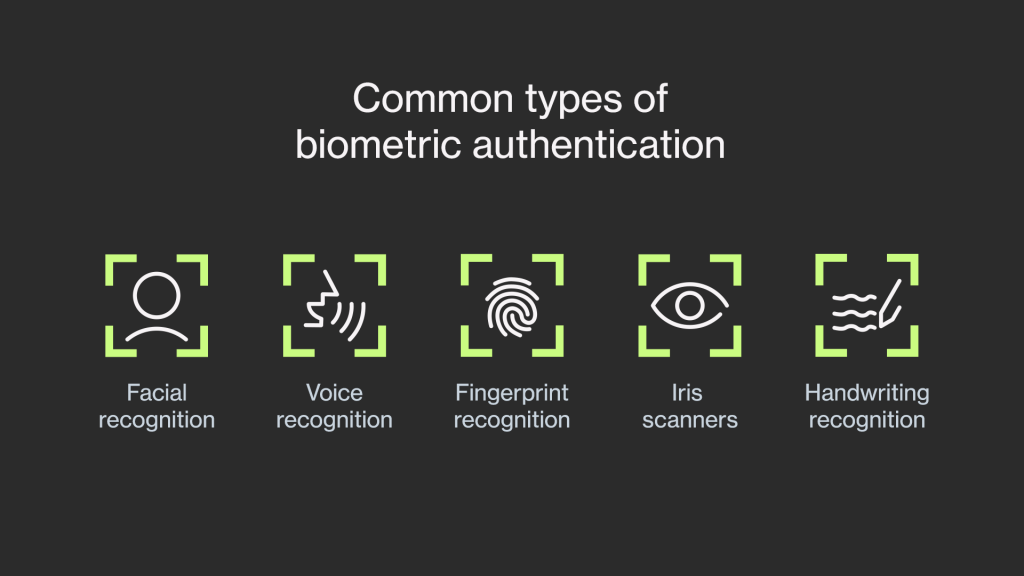

Users can upload a government-issued ID. Or they can take a short video selfie, which AI uses to estimate facial age.

Discord calls the practice choice and proportionality.

The platform says most adults will not need to verify at all, thanks to a new age inference model. That model notes account tenure, device data, and activity patterns. The messaging platform does not scan private messages or content, according to the company.

Only users who fail inference checks or attempt to access age-restricted spaces receive verification prompts.

On-Device Face Scans and Third-Party Partners

Discord stresses that privacy is central to the design.

For face scans, Discord says age estimation runs entirely on the user’s device. Video selfies never leave the phone. The system sends only a ‘pass’ or ‘fail’ result back to Discord.

For ID uploads, Discord says documents are deleted once age confirmation is completed. It says neither Discord nor its partners permanently store IDs or selfies.

To handle verification, Discord partners with k-ID, a service also used by Meta and Snap. K-ID, in turn, relies on facial age estimation technology from Privately, a Swiss firm focused on on-device processing.

K-ID states that it never receives biometric data. It says it stores only the result of the age check, not images or videos. Privately echoes that claim, saying its models run locally and avoid cloud transmission to comply with GDPR data-minimization rules.

On paper, this appears best practice. In actuality, many are still unconvinced.

Trust Erodes After a Previous Breach

Discord’s timing does not help.

In October, hackers stole official IDs from roughly 70,000 Discord users after breaching a third-party vendor that handled age verification appeals in the UK and Australia. The attackers reportedly attempted extortion. Discord said the breach did not involve its own systems, but the damage stuck.

That incident fuels today’s backlash.

On Reddit and gaming forums, users describe the new policy as invasive. Some vow to leave rather than submit selfies or IDs. Others argue that age verification is easy to bypass and punishes adults without truly stopping minors.

One Reddit user sums up the mood bluntly: “Why would anyone trust Discord with their ID after they already leaked them?”

Privacy advocates add another concern.

Even when data stays on-device, age estimation still involves biometric processing. That creates risk if models misclassify users or if appeal flows require manual review. Experts warn that age-estimation tools often struggle to distinguish between 17- and 18-year-olds, the exact boundary these systems aim to enforce.

Discord: Safety Requires Friction

Discord acknowledges that the change will cost users.

Badalich tells reporters that Discord expects some people to leave. She also says the company plans to “find other ways to bring users back.”

From Discord’s perspective, friction is necessary

The platform wants to reduce accidental exposure to explicit content. It wants to block unsolicited messages from strangers. It wants to make adult spaces deliberate, not default.

The strategy aligns Discord with an industry shift.

TikTok, Roblox, and Meta all introduced stricter teen defaults in response to pressure from lawmakers. DiDiscord’sEO, Jason Citron, appeared before a U.S. Senate hearing in 2024 alongside Mark Zuckerberg, Evan Spiegel, and Shou Zi Chew to address pointed questions about child safety.

The regulatory message is clear. Platforms must demonstrate they can protect minors before governments force them to do so.

Age Verification Changes Online Anonymity

The security story goes beyond Discord.

Age verification changes how the internet works.

For years, platforms have grown by lowering barriers — easy sign-ups fuel network effects. Age checks reverse that logic. They add gates, collect signs, and reduce anonymity.

Supporters argue this is overdue. Children deserve protection. Platforms profit from scale and must bear responsibility.

Critics argue that normalizing face scans and ID uploads creates new surveillance norms. Once one platform succeeds, others follow. Sensitive data becomes a prerequisite for participation.

The trade-off is stark. Safety improves. Privacy shrinks.

Impact

Discord sits at the intersection of gaming, social chat, and creator communities. Its user base skews young. That makes it a test case.

If DiDiscord’sollout succeeds, other platforms will follow suit. If it fails, lawmakers may intervene with harsher rules.

Either way, the experiment changes expectations. Users now ask if a platform is fun, is it safe? And at what cost?

That question defines the next phase of social media.

TF Summary: What’s Next

Discord rolls out global age verification using face scans, IDs, and inference tools to restrict sensitive content by default. The measure strives to protect teens but has ignited a firestorm over privacy and trust following a prior data breach. The platform accepts potential user loss in exchange for safety-by-design.

MY FORECAST: Age verification will be implemented across leading platforms by 2027, driven by regulatory and liability pressures. Systems improve, but trust lags. Platforms that prove privacy-by-design regain users. Those who mishandle data, lose them.

— Text-to-Speech (TTS) provided by gspeech | TechFyle