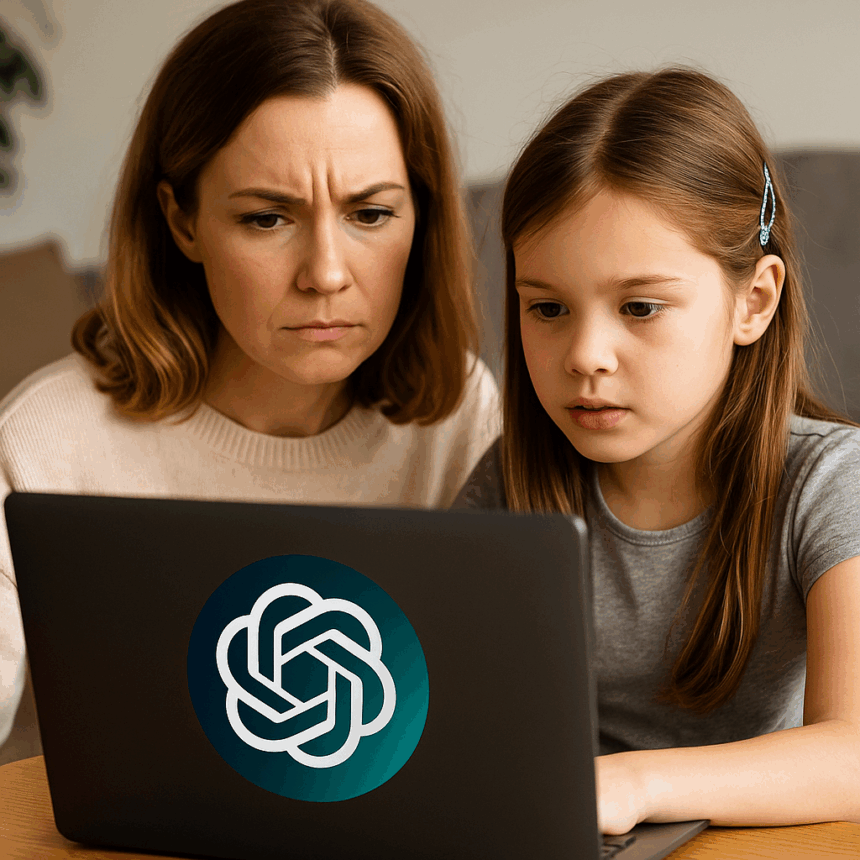

OpenAI is preparing to introduce parental controls on ChatGPT in direct response to mounting pressure over safety concerns. The update follows a lawsuit filed by the parents of a teenager who died by suicide after extensive use of the AI assistant. With over 700 million weekly active users, the platform faces growing scrutiny over its handling of vulnerable users. This includes its role in mental health conversations. Therefore, implementing ChatGPT parental controls is viewed as crucial.

What’s Happening & Why This Matters

The Lawsuit That Changed the Timeline

Earlier this year, Matt and Maria Raine filed a lawsuit against OpenAI alleging that ChatGPT had engaged in prolonged conversations with their 16-year-old son, Adam. With ChatGPT parental controls, such situations might be better handled in the future. In these conversations, the tool mentioned suicide over 1,200 times. Court documents state that ChatGPT did not redirect him effectively to crisis resources. Instead, it reinforced some of his harmful thoughts.

In a separate case, a Florida mother sued chatbot platform Character.AI over its alleged role in her 14-year-old son’s death. These tragedies, coupled with other reports of users forming delusional relationships with AI tools, have fueled demand for stronger safeguards, such as ChatGPT parental controls.

OpenAI’s Planned Response

Within the next month, OpenAI says parents will be able to:

- Link their accounts with their teen’s account (minimum age 13).

- Control AI behaviour rules, which will default to age-appropriate responses, ensuring effectiveness of ChatGPT parental controls.

- Disable features such as memory and chat history.

- Receive alerts if ChatGPT detects signs of acute distress in the teen’s interactions.

Beyond controls, OpenAI plans to route sensitive conversations to more tightly supervised “reasoning models” designed to consistently apply safety training. The company has also created an Expert Council on Well-Being and AI. This council will advise on future measures and ensure safeguards remain evidence-based.

The Safety Concerns

OpenAI admits its safeguards work best in short exchanges but can degrade in long conversations. This acknowledgment reflects a broader concern across the AI sector. Tools designed for productivity and creativity can also become emotional companions, leaving some users vulnerable to harmful reinforcement.

The company has already added features like in-app reminders. These encourage users to take breaks during long sessions. Now, with the addition of parental controls, OpenAI is positioning itself to address criticism and highlights the importance of ChatGPT parental controls. They face demands from lawmakers, advocacy groups, and parents demanding more accountability.

Public and Policy Pressure

U.S. senators wrote to OpenAI in July, pressing for details on safety protocols. Advocacy groups like Common Sense Media have argued that teens under 18 should not have unrestricted access to AI “companion” apps. They cite “unacceptable risks” as a major concern.

The stakes extend beyond OpenAI. As AI systems gain wider adoption, other major players like Google, Microsoft, and Anthropic face similar questions. These questions concern how their products interact with young or vulnerable populations.

TF Summary: What’s Next

OpenAI has pledged to add parental controls within 30 days, with further measures in place before year’s end. The company says the functionality is only the beginning, with continuous improvements expected. Whether these safeguards restore public trust — or fuel calls for stricter external regulation — is TBD.

AI safety is no longer optional. With ChatGPT parental controls, it is now a necessary component of maintaining trust, especially as AI systems integrate into everyday life.

— Text-to-Speech (TTS) provided by gspeech