The race to harness artificial intelligence (AI) in national security is heating up. Anthropic, a leading AI startup backed by Amazon, recently launched a new suite of AI models tailored for U.S. government use. These models promise stronger handling of classified information, improved foreign language capabilities, and enhanced cybersecurity intelligence support. As AI increasingly intertwines with defense operations, the stakes are rising for security, ethics, and international power dynamics.

What’s Happening & Why This Matters

Anthropic, the creator of the Claude chatbot, introduces Claude Gov, a specialized AI platform built to support sensitive U.S. government functions. This new iteration focuses on refining AI’s ability to process classified documents securely and understand complex intelligence data in real-time. Governments worldwide, including those of the United States and Israel, leverage AI tech in defense. However, Anthropic’s models are restricted to authorized users working within classified environments.

The company stresses that its models boost proficiency in critical foreign languages and dialects essential to U.S. national security operations. While details on specific languages remain confidential, this capability aims to improve global intelligence gathering and analysis.

Cybersecurity also features prominently. The new models offer improved interpretation of cybersecurity data, enabling intelligence analysts to detect and respond to digital threats more quickly. Anthropic envisions these tools as a force multiplier for government analysts tasked with protecting national infrastructure.

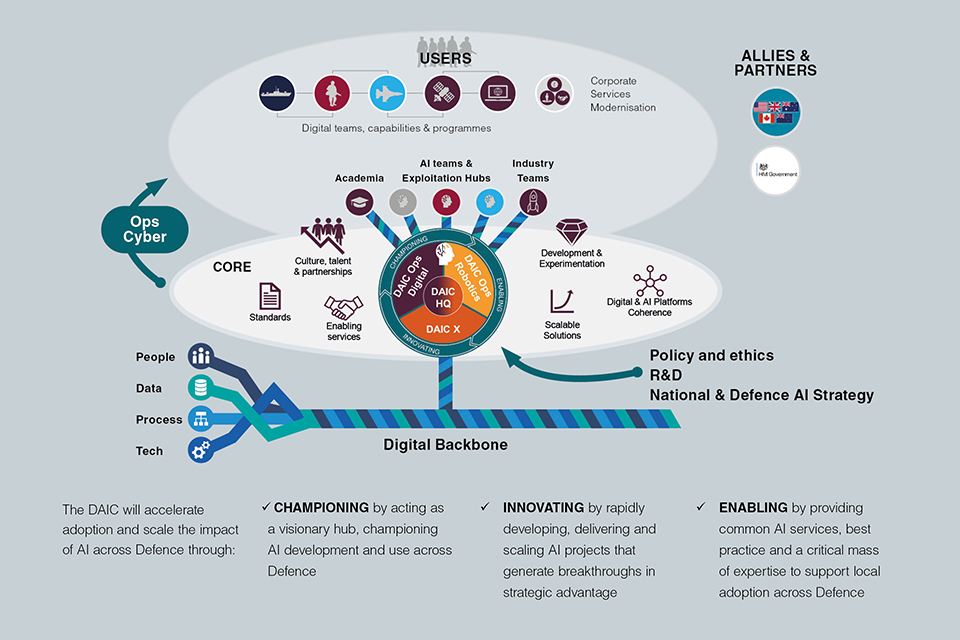

Anthropic openly pushes for closer integration between AI companies and intelligence agencies. The startup recently submitted recommendations to the U.S. Office of Science and Technology Policy (OSTP) to establish secure communication channels between AI labs and intelligence entities. They advocate for streamlined security clearances to speed up collaboration. This illustrates the growing impact of AI on geopolitics and defense strategies.

However, these developments raise concerns among critics and whistleblowers. Edward Snowden, known for exposing government surveillance programs, publicly criticized the appointment of former U.S. Army General and NSA Director Paul Nakasone to a top role at OpenAI, calling it a “willful, calculated betrayal.” His comments reflect growing unease about AI’s close ties to the military and intelligence sectors.

TF Summary: What’s Next

Anthropic’s new AI models mark a turning point in integrating advanced AI into national security workflows. By improving the handling of classified documents and foreign language comprehension, the technology enhances U.S. intelligence capabilities. The inclusion of cybersecurity analysis tools further strengthens the defense against evolving digital threats.

Yet, the close ties between AI companies and intelligence agencies spotlight crucial debates over privacy, transparency, and ethics. The future demands technological progress with robust safeguards to ensure AI serves the public interest and respects civil liberties.

— Text-to-Speech (TTS) provided by gspeech