When the architects of the digital world face human consequences in a courtroom, technology stops feeling abstract.

A courtroom in Los Angeles is the unlikely backdrop for one of the most consequential tech accountability battles of the decade. Mark Zuckerberg, CEO of Meta Platforms, testified before a jury for the first time about allegations that social media platforms harm children’s mental health. The trial focuses on whether companies intentionally design apps to maximise engagement at the expense of young users’ well-being.

Families claim platforms like Instagram trap minors in cycles of comparison, anxiety, and compulsive use. Meta denies wrongdoing and argues it continues to build safeguards. Yet the case reaches beyond one company. It probes how the modern attention economy shapes childhood itself.

The outcome matters. Hundreds of similar lawsuits hang in the balance. Policymakers watch closely. Tech companies quietly brace for new rules. Society, meanwhile, confronts a deeply uncomfortable question: what happens when the machines we built to connect us start shaping and developing minds?

What’s Happening & Why This Matters

A Landmark Case Tests Social Media Accountability

At the case’s centre is a 20-year-old plaintiff who says she became addicted to social platforms as a child, leading to anxiety, depression, and body dysmorphia. Lawyers argue these systems deliberately exploit psychological vulnerabilities.

Internal documents presented in court suggest platforms track and encourage time spent. One memo reportedly projected that average user time would rise from about 40 minutes daily to nearly 46 minutes within a few years. Zuckerberg counters that engagement is product value, not manipulation.

He states that success comes from usefulness, not addiction. “I think a reasonable company should try to help the people who use its services,” he says under questioning.

Plaintiffs disagree. They argue that engagement metrics create incentives to keep users scrolling, regardless of the emotional cost. If proven, that premise threatens the business model of nearly every major social platform.

Teen Safety, Age Verification, and the Reality Gap

A key dispute centres on under-13 users. Instagram officially requires users to be at least 13 years old. Evidence suggests that younger children still have easy access.

Internal estimates from years ago suggested millions of underage users were already on the platform. Lawyers highlight how simple self-reported age fields fail when children lie about birthdays.

Zuckerberg acknowledges the difficulty. Teens often lack official ID. Detection systems improve but are imperfect. He notes that Meta removes accounts it identifies as underage, though critics argue that enforcement lags far behind the scale of usage.

One attorney presses the point bluntly: expecting a nine-year-old to navigate legal fine print feels unrealistic.

The gap between policy and practice exposes another serious issue. Digital platforms operate globally. Enforcement occurs locally and inconsistently. Children navigate a system designed primarily for adults.

Beauty Filters and the Manufactured Self

Another contentious topic involves appearance-altering filters. These tools simulate cosmetic procedures or reshape facial features. Experts warn they distort self-image, especially among teens.

The evidence presented includes internal employee warnings about pressure on young users, particularly girls. Zuckerberg defends the decision to allow filters in the name of free expression, though the company reportedly avoids promoting them.

The debate reveals a philosophical divide. Are platforms neutral tools or curated environments responsible for psychological outcomes?

The science is unsettled. Psychologists debate whether social media addiction qualifies as a clinical diagnosis. Still, many researchers document links between heavy usage and mental distress.

Instagram’s leadership frames high usage as “problematic use,” similar to watching too much television. Critics respond that interactive platforms amplify comparison, social pressure, and algorithmic reinforcement far beyond passive media.

Competing Narratives About Harm

Meta’s defence emphasises personal context. The company suggests that many plaintiffs faced difficult home environments or pre-existing challenges. Lawyers argue that social media may not be the primary cause of mental health struggles.

Families push back fiercely. They describe behavioural changes tied to online interactions, harassment, or obsessive comparison cycles. Some parents present heartbreaking stories of self-harm and suicide.

Outside the courthouse, grieving families gather with photos of children they lost. For them, the trial represents more than legal accountability. It is a public reckoning.

One parent expresses cautious hope that court proceedings might finally drive change after years of legislative inaction.

Design vs Content: A New Legal Strategy

Tech companies have historically relied on legal protections that shield them from liability for user-generated content. The case sidesteps that defence. It targets product design instead.

The argument claims harm arises not from individual posts but from platform mechanics — algorithms, notifications, infinite scroll, and reward loops. If courts accept that theory, it could reshape the entire digital ecosystem.

Already, other companies face similar lawsuits. Some settle early. Others prepare for prolonged battles. Legal experts describe these cases as “bellwether trials,” meant to gauge how juries react before larger waves of litigation.

Meanwhile, governments worldwide are considering regulations to address youth access, algorithm transparency, and platform accountability. The trial feeds directly into that policy debate.

Technology’s Moral Growing Pains

Zuckerberg’s testimony defines a known strain within Silicon Valley. Companies build systems that scale faster than society can understand them. By the time risks become clear, billions already depend on the technology.

The CEO insists Meta seeks long-term value, not short-term exploitation. Critics argue that profit incentives tell a different story. Both perspectives contain truth. Modern platforms deliver genuine connection and information while also amplifying comparison and compulsive behaviours.

Human psychology evolved for small communities. Social media creates vast digital arenas where social feedback arrives continuously and publicly. For adolescents, whose brains are still developing impulse control and identity formation, the effects intensify.

The mismatch between ancient wiring and modern technology sits at the heart of the controversy.

TF Summary: What’s Next

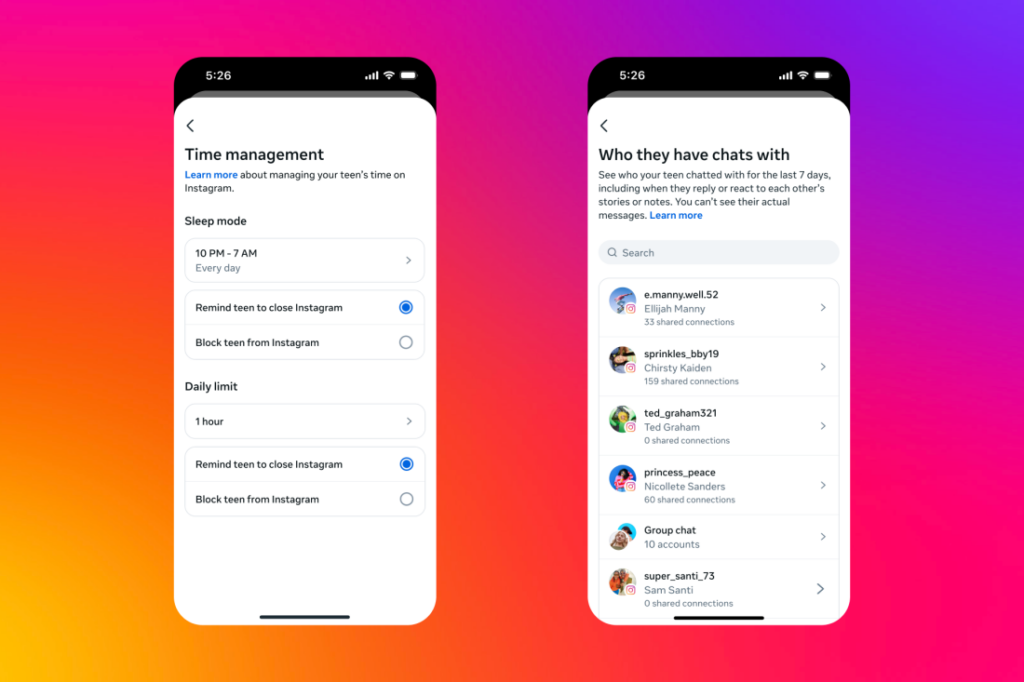

The trial signals a turning point. Courts examine not just what users post but how platforms shape behaviour. If juries side with plaintiffs, companies may need to redesign core features, strengthen age verification, and prioritise wellbeing metrics alongside engagement metrics.

Even if Meta prevails, public scrutiny alone will modify expectations. Parents demand safeguards. Regulators gain momentum. Competitors quietly study how to adapt before legal pressure reaches them.

Digital technology once promised connection without consequence. That illusion fades. Society now confronts the costs of building systems optimised for attention rather than flourishing.

MY FORECAST: Over the next decade, social media will transform from an unregulated frontier into a heavily regulated utility for younger users. Platforms survive, but childhood online becomes far more structured, monitored, and age-segmented. The era of frictionless teen access ends.

— Text-to-Speech (TTS) provided by gspeech | TechFyle