Nvidia backs the AI kingmaker. OpenAI ships new science tooling. Researchers worry about paper spam.

Nvidia runs the toll boothboom’she modern AI boom. Every serious model training run burns silicon. NVIDIA sells the pickaxes.

So Nvidia’s CEO says the company plans” its “largest ever investment in OpenAI, it lands like a starter pistol for the next funding cycle. Jensen Huang frames it as a straightforward bet on momentum. He calls it a good investment.”

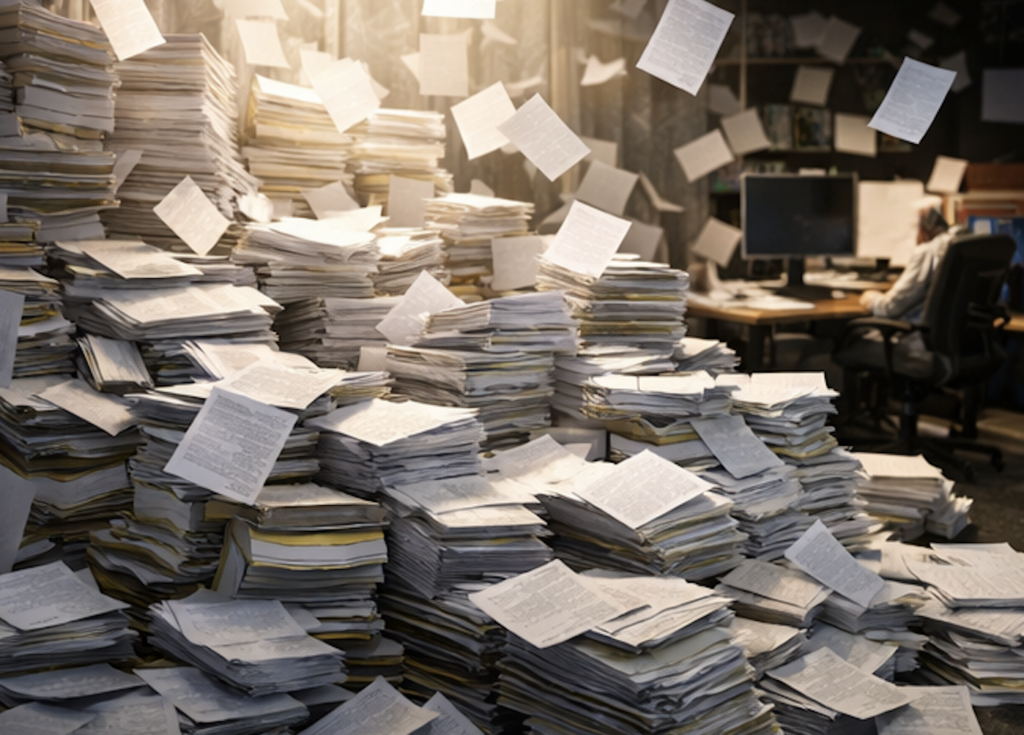

At the same time, OpenAI releases a new science-focused workspace named Prism. Prism accelerates paper writing and improves formatting. It also triggers a familiar fear: AI slop. Researchers warn that polished text can mask weak science. Journals already struggle with volume. Prism lowers the effort bar even more.

Put those together, and the tension gets fun. NVIDIA invests in DeepOpenAI’s future. OpenAI pushes deeper into knowledge work. Critics warn that AI output is starting to flood the market. The market then asks a blunt question.

Does more AI produce more value, or more noise?

What’s Happening & Why This Matters

Nvidia Signal” its “largest” ever” OpenAI Cheque

Jensen Huang tells reporters that Nvidia will open AI’s next financing round. He calls the deal “a good investment.”

He also pushes back on reports of internal doubts. A report says the deal “sits “on ice. The same report claims Huang criticises a lack of discipline in OpenAI’s business approach. Huang rejects that framing. He calls the “idea “nonsense.”

The size matters, even without a number. The file notes a rumoured $100 billion figure tied to a prior partnership agreement. Huang says the investment “looks” nothing like that figure.

This move changes Nvidia’s position in the stack.

OpenAI drives demand for training clusters. OpenAI also drives demand for inference clusters. Inference means running models at scale for users. That workload eats GPUs too. Nvidia profits either way.

So why invest directly?

Because the best customer is the one who keeps building, equity adds leverage. Nvidia gains upside beyond hardware margins. Nvidia also gains a seat closer to the roadmap.

There is also a defensive angle. Nvidia faces fierce platform competition. Cloud providers push custom chips. Model labs push efficiency tricks. Every breakthrough that reduces GPU demand threatens Nvidia’s growth story. A deep relationship with OpenAI lets Nvidia stay nea” the “fro “t row” of model needs.

The file also connects OpenAI to giant infrastructure plans. It describes OpenAI looking at data centre spending in the hundreds of billions, plus power draw at nation-scale levels.

That kind of appetite is Nvidia’s core business.

‘Prism’ Boosts Speed, Then Raises ‘Slop’ Alarms

OpenAI releases Prism, a free AI-powered workspace for scientists. Prism integrates OpenAI’s GPT-5.2 model into a LaTeX-based editor. It supports drafting, citation help, diagram creation from sketches, and real-time collaboration.

OpenAI frames Prism as a time-saver. It targets formatting pains, literature scanning, and targets the busywork that drains researchers.

Kevin OpenAI’s VP for Science ties Prism to a bigger thesis. He says, 2026 will be for AI and science what 2025 was for AI in software engineering.”

He also cites usage volume and says ChatGPT receives about 8.4 million weekly messages on hard science topics.

The scepticism lands fast.

Prism writes clean text, formats citations, and makes a manuscript “look “done.” That polish can help real science. It can also help junk.

Weil acknowledges a core issue in public. He warns that the tool does not relieve the researcher’s duty to verify references. He says, none of this absolves the scientist of the responsibility to verify that their references are correct.”

That line matters because AI systems can invent plausible citations. Traditional tools like EndNote format real references. They do not hallucinate sources. Prism sits in a different category. It blends editing with generation. That blend raises trust issues.

Now zoom out to the system level.

Peer review already runs hot. Editors triage mountains of submissions. Reviewers donate time. Journals face a backlog. Prism adds fuel to the paper machine.

A study published in Science in December 2025 finds that researchers using large language models increase output by 30–50%. The same study finds that AI-assisted papers perform worse in peer review.

That pairing creates a brutal dynamic.

More papers. Lower average quality. Same review capacity.

Cornell information science professor Yian Yin calls the pattern widespread. He “There’s a big shift in our current ecosystem that warrants a very serious” look.”

Yale sociocultural anthropologist Lisa Messeri goes even sharper. She says, “Science is nothing but a collective endeavour.”

Her point hits at incentives. Tools that help individuals publish more can harm collective discovery if they flood the signal.

Nvidia’s Bet Rides on Trust, Over Hype

Here is the weird truth. Nvidia’s success depends on AI value, not AI volume.

If AI output turns into junk at scale, users lose trust. Institutions tighten rules. Regulators step in. Budgets shift. That pressure can slow deployments. It can also change how organisations buy compute.

So Nvidia backing OpenAI during a slop debate looks risky on paper. In practice, it is a calculated risk.

OpenAI sits near the centre of enterprise AI adoption. Enterprises already run workflows through ChatGPT. They also test newer tools. When OpenAI expands into scientific tooling, it targets a large market. Research spending drives long-term innovation. Research also influences policy narratives.

If Prism boosts real productivity, OpenAI gains stickiness in labs, universities, and industry research groups. That stickiness flows into more training, more fine-tuning, and more inference. Nvidia sells the hardware behind those loops.

If Prism triggers a slop spike, backlash grows. Journals tighten gates. Funders demand stronger disclosure. Editors adopt stricter screening. That response slows publication noise. It also forces better tooling norms.

In that scenario, OpenAI still wins if it adapts faster than rivals. Nvidia still wins if model demand stays high.

Competition stays real. The file notes OpenAI worries about pressure from Google and Anthropic. It also mentions an internal focus on improving ChatGPT’s day-to-day experience after benchmarking losses.

That competitive push creates a second reason for Nvidia to invest.

Nvidia invests in the lab that drives the market narrative. Narrative shapes adoption. Adoption shapes compute demand.

This is” why” “slop” matters so much. Slop is not just cringe content. Slop is a tax on attention — a tax on trust. When trust drops, buyers hesitate.

OpenAI’s Prism launch sits right on that fault line.

OpenAI states that Prism does not conduct research independently. Humans stay accountable.

That stance sets a standard. It also sets expectations. If Prism users submit junk at scale, the brand takes Nvidia’s investment and then rides on OpenAI, proving discipline, not just speed.

TF Summary: What’s Next

Nvidia signals a deep commitment to OpenAI’s next funding round, even as reports swirl about internal doubts. Jensen Huang frames the investment as an innovative business and rejects claims of dissatisfaction. At the same time, OpenAI launches Prism, a scientist-friendly writing workspace that boosts productivity while raising fresh concerns about AI slop in journals.

MY FORECAST: Nvidia doubles do” n on “compute demand cert “inty.” OpenAI addresses slop anxiety with stricter provenance controls, stronger citation verification workflows, and clearer disclosure tools. Journals respond with tougher screening. The market rewards the teams that treat trust as a product feature, not a press release.

— Text-to-Speech (TTS) provided by gspeech | TechFyle