A creative backlash challenges how artificial intelligence learns, who profits, and where the legal lines get drawn

The creative world is drawing a hard line in the sand. Artists, writers, musicians, and actors now speak with unusual unity against artificial intelligence companies. Their claim stays blunt and emotional. Training AI models on copyrighted work without permission equals theft. This argument no longer lives at the fringe. It now shapes public debate, courtrooms, and boardrooms across the tech sector.

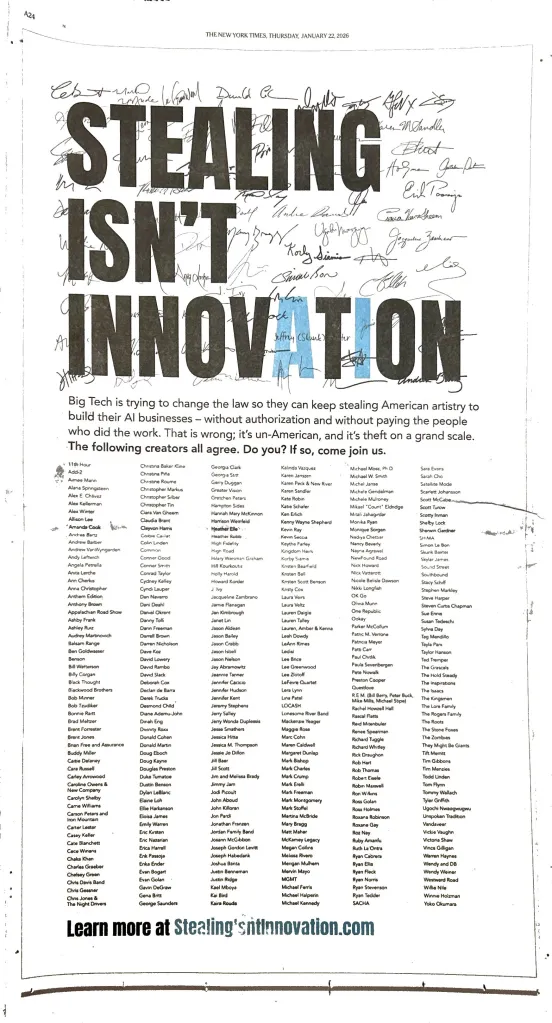

In recent months, hundreds of high-profile creators have signed an open letter accusing major AI developers of exploiting their work. The group argues that AI firms scrape books, films, songs, photographs, and scripts on a massive scale. They say this happens without consent, compensation, or credit. As AI systems grow more capable, artists fear a future where machines learn from human creativity while replacing the very people who made that creativity possible.

The debate defines one of the decade’s most critical technological conflicts. It blends copyright law, ethics, economics, and cultural survival into a single fight. And it forces governments to confront how innovation should work when machines learn from human labor.

What’s Happening & Why This Matters

A Collective Stand From the Creative World

More than 800 artists signed an open letter organized by the Human Artistry Campaign as part of its Stealing Isn’t Innovation movement. The signatories include globally recognized actors, musicians, authors, and filmmakers. Their message is direct. AI companies must stop using copyrighted work without permission. They must negotiate licenses instead. They must respect creative labor as property, not raw material.

The letter warns that the creative economy fuels jobs, exports, and cultural influence. It argues that unchecked AI training threatens that foundation. One passage states that some of the largest technology firms build AI platforms “without authorization or regard for copyright law.” That language signals both frustration and legal intent.

The scope of resistance surprises many observers. Creators across mediums align behind a single demand. They want ethical data sourcing and payment. They want agency over how their work trains machines.

This moment matters because it marks a shift. Artists no longer react individually after damage occurs. They are organizing and establishing the issue publicly. They are urging lawmakers and courts to respond before AI becomes too entrenched to regulate.

How AI Training Actually Works

Modern AI systems learn by ingesting massive datasets. These datasets include text, images, music, video, and voice recordings. Engineers feed this material into neural networks. The systems then identify patterns. Over time, they generate new content that mimics style, tone, and structure.

Much of this data comes from open internet scraping. Books, news articles, illustrations, film scripts, and music tracks are often included in training sets without explicit permission. AI companies defend this practice using the doctrine of fair use. They argue that training transforms the material rather than merely reproducing it.

Artists reject that logic. They argue that training copies the work at scale. They say the output competes with the original creators. In their view, transformation does not erase appropriation. Scale magnifies harm rather than minimizing it.

This technical process now sits at the center of legal and ethical scrutiny. How machines learn becomes just as important as what they produce.

Lawsuits Multiply Across the Globe

Legal pressure intensifies alongside public protest. Roughly 60 lawsuits are moving through U.S. courts involving authors, visual artists, music publishers, and news organizations. Similar cases emerge in Europe and the United Kingdom. Outcomes vary. Some judges allow claims to proceed. Others dismiss early arguments while leaving room for future appeals.

These cases test the limits of existing copyright law. Most statutes predate machine learning by decades. Courts now face questions that lawmakers never anticipated. Does ingesting copyrighted material count as copying? Fair use. Does similarity in output prove infringement?

No single ruling resolves everything yet. Still, the sheer volume of cases signals systemic tension. AI companies prepare for long legal battles. Artists prepare for precedent-setting decisions.

The Voice Controversy That Changed the Tone

Public awareness spikes after a high-profile dispute involving Scarlett Johansson and OpenAI. Johansson objects to an AI voice that sounds strikingly similar to her performance in the film Her. Her legal team sends formal letters asserting that the company lacks the right to deploy a voice resembling her own.

OpenAI pauses the voice shortly after. The company denies intentional imitation. Still, the incident resonates deeply across the creative community. It illustrates how AI training blurs identity, performance, and ownership. Voice, once considered deeply personal, now becomes reproducible at scale.

This moment changes the conversation. AI training stops feeling abstract. It becomes intimate. It touches faces, voices, and careers that people recognize.

Tech Defenses And Fractures

AI developers respond with mixed strategies. Some double down on fair use arguments. They emphasize innovation and global competition. Others quietly explore licensing deals, revenue sharing, and opt-out systems.

Executives argue that restrictive rules risk slowing progress. They warn that limiting training data favors large incumbents with private datasets. Startups struggle to compete under heavy licensing regimes.

At the same time, internal divisions surface. Engineers express concern about legal uncertainty. Product teams fear reputational damage. Investors demand clarity. AI companies now face pressure not just from artists but also from markets.

The result feels unstable. Innovation continues. But confidence erodes.

Governments Enter the Arena

Policymakers can no longer ignore the issue. Lawmakers in the United States, the European Union, and the United Kingdom hold hearings and consultations. Regulators explore transparency rules for training data. Some propose compulsory licensing schemes. Others examine opt-in registries for creators.

Europe moves fastest. Its AI Act includes provisions on transparency in training and copyright safeguards. The U.S. takes a more fragmented approach. Courts carry much of the burden. Agencies hesitate to move too aggressively.

This regulatory patchwork increases complexity. AI firms must navigate different rules across regions. Creators worry about loopholes. The global nature of AI training complicates enforcement.

Still, momentum builds. Governments now treat AI training as a labor and rights issue, not just a technical one.

Culture, Power, And The Meaning Of Creation

At its core, this conflict reaches beyond law. It asks philosophical questions about creativity itself. Who owns style or controls influence? Who benefits when machines learn from millions of human lives?

Artists argue that creativity reflects lived experience. Innovators say that learning from data mirrors how humans learn. The disagreement centers on consent and compensation.

This tension shapes public trust in AI. Systems perceived as exploitative face resistance. Systems built on partnership may earn legitimacy.

The outcome influences how society values creative work in an automated age.

TF Summary: What’s Next

The fight over AI training and copyright accelerates. Artists organize earlier and louder. Courts move slowly but steadily. Tech companies face pressure to adapt or litigate. Licensing models, opt-out systems, and transparency standards now move from theory to necessity.

MY FORECAST: The industry shifts toward negotiated access rather than silent scraping. Early movers gain trust and stability. Late movers face lawsuits and regulations. AI progress continues, but it grows more expensive, more accountable, and more human-aware.

— Text-to-Speech (TTS) provided by gspeech | TechFyle