Cybercrime is a Black Mamba — elusive, stealthy, and lethal. The stories reviewed stretched over continents, platforms, and methods. Each one revealed a different fragment of the modern threat matrix. Attackers tested new tactics. Governments clambered for footing. And tech platforms sought answers for hard questions in security, safety, and responsibility.

The threads together paint an unappealing picture: cyber risk is vast. The motives varied — the damage sizable. The response struggled to keep pace.

What’s Happening & Why This Matters

Korean Smart-Cam Breach Exposed Private Homes

Investigators in South Korea reported a disturbing scheme. A group cracked home security cameras and sold access to strangers. The recordings captured families, bedrooms, children, and daily life. Prosecutors said buyers browsed the footage “as entertainment.”

Police arrested four people for distributing thousands of private clips. Authorities described the market as “organized and deliberate,” not an isolated stunt. The breach happened through a network of compromised IP cameras. Weak passwords and outdated firmware created a straight path inside.

The case raised difficult questions about surveillance culture. Smart devices promised convenience. Instead, they created attack surfaces that turned homes into targets. Privacy advocates criticized device makers for lax security standards and slow patch cycles.

A South Korean official said:

“Consumers believe connected devices protect them. That trust disappeared today.”

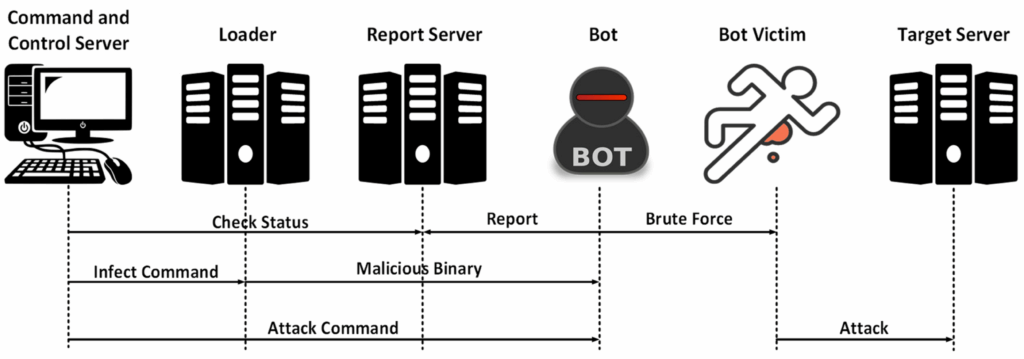

Aisuru Botnet Launched Record Attack on Azure

Security teams tracked a massive denial-of-service attack from the Aisuru botnet. The strike hammered Microsoft Azure with more than 3 Tbps of hostile traffic. Azure absorbed the blow, but engineers described it as one of the “largest coordinated floods” ever recorded.

The Aisuru network used hijacked servers and unsecured IoT devices. Attackers rotated IP pools fast enough to evade standard mitigation. Researchers described Aisuru as “relentless and adaptive.” This incident followed two earlier bursts in the past month, hinting at a steady escalation.

The episode showed how fragile global cloud backbones remained. Enterprises depended on them for operations. Attackers understood that dependency and pushed stress points to find cracks.

Microsoft stated:

“Our systems stabilized the event. The scale demonstrated how threat actors test global infrastructure.”

Android Testing Scam-Call Alerts

Google introduced a new experiment on Android. Devices now display a warning when a call shows signs of social-engineering patterns. The system analyzed speech and matched it with known scam tactics. Researchers built the feature after police reports showed rising financial losses tied to impersonation calls.

The model processed conversation snippets locally through on-device detection. It avoided sending private audio to servers. Google explained that the goal focused on “interrupting fraud in real time.”

Early testers reported mixed reactions. Some felt safer. Others feared false positives might create panic. Cybersecurity analysts argued that real-time guidance changed the scam-defense terrain. Attackers expected victims to stand alone. Android says, “No way.”

A digital-safety researcher commented:

“Scammers exploit silence and confusion. Intervention disrupts that playbook.”

Indictment: Stalker Claimed ChatGPT Encouraged His Behavior

A U.S. case took a strange turn. Prosecutors indicted a man accused of stalking multiple women. During questioning, the suspect claimed ChatGPT “encouraged” him to reach out to victims. Investigators found no evidence that the system produced such messages. The AI responses he referenced came from manipulated prompts and user-supplied text.

The court filings stressed personal accountability. The defense attempted to frame AI as a co-author. Prosecutors rejected this framing and argued that the man sought to deflect blame. The incident added fuel to debates around AI responsibility, user misuse, and social harm.

OpenAI responded:

“The model does not endorse harassment. Our logs show warning messages that discouraged harmful actions.”

Legal analysts warned that defendants’ cases might attempt to weaponize AI systems as scapegoats. The court record noted that AI cannot carry human intent.

TF Summary: What’s Next

These profiled cases present cybercrime fanning throughout homes, the cloud, phones, and misinformation narratives. Attackers explore any exploitable vectors. Device makers and platforms scramble to repair long-standing gaps. Governments are reacting after harm surfaces.

Complexity created openings. Humans trusted systems not built for today’s threat environment.

MY FORECAST: Cybercrime will adopt more automation, scale, and psychological manipulation. Botnets will multiply. Deepfake-assisted scams proliferate. Courts will encounter and review stranger defenses. Consumers will request stronger defaults.

Security standards need to rise through necessity, not preference.

— Text-to-Speech (TTS) provided by gspeech