Europe’s tight grip on AI governance ignites an essential debate. Does this intense regulation protect users and enhance innovation, or does it choke the very progress it wants to nurture? The European Commission and member states actively regulate powerful tech firms to safeguard citizens. But their approach sparks questions: Is Europe creating a haven for AI, or are its policies creating barriers that other regions won’t face?

What’s Happening & Why This Matters

Europe’s Regulatory Ambition: Protect or Paralyze?

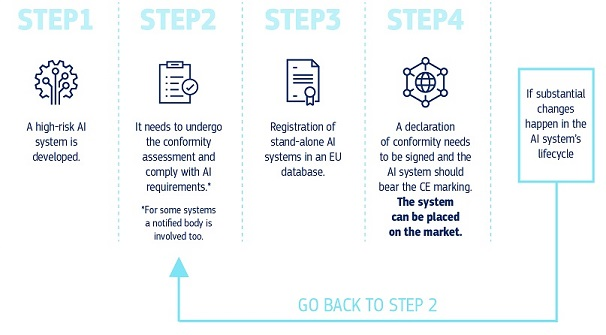

The EU’s AI Act represents one of the strictest AI regulatory frameworks worldwide. It categorizes AI applications by risk and demands transparency, accountability, and human oversight for high-risk uses. These rules extend far beyond the tech industry, affecting healthcare, finance, and public services. On paper, it serves as a blueprint for ethical AI. But in practice, it risks suffocating startups and researchers with red tape and expensive compliance costs.

European regulators have a concise message: tech titans (e.g., Meta, Google, Apple, Microsoft) wield immense power and must be held accountable. Denmark’s Digital Minister, Caroline Stage Olsen, doesn’t mince words: “The scale of these companies is staggering. They must be partners in protecting citizens, not unchecked forces.” This stance challenges Silicon Valley’s traditional freedom to innovate without heavy oversight.

Yet, the sheer weight of regulation can backfire. Small and medium enterprises may lack the resources to navigate complex rules. This raises a question few regulators answer: Does the EU want to lead AI innovation or control it so tightly that breakthroughs happen elsewhere?

User Protection: A Double-Edged Sword Wrapped in Privacy Concerns

The EU’s approach to online safety reaches into every corner of the digital ecosystem. Laws like the Digital Services Act (DSA) and the Audiovisual Media Services Directive (AVMSD) enforce content moderation, age verification, and user data protection. These measures aim to shield minors from harmful content and curb misinformation.

The problem lies in execution. Age verification, connected to the European Digital Identity Wallet (eID) system, requires users to disclose personal information to access services. This raises privacy alarms. Critics argue these controls risk excluding vulnerable populations and creating gatekeepers in the digital realm. What good is protection if it erects walls that shut out the very people it intends to shield?

Meta’s parental alert proposal on children’s app downloads illustrates the tech industry’s new role as guardian. While well-intentioned, it also signals a growing surveillance apparatus within consumer products. Are users truly safer, or just more observed?

Innovation on the Line: Ethical Safeguards or Innovation Roadblocks?

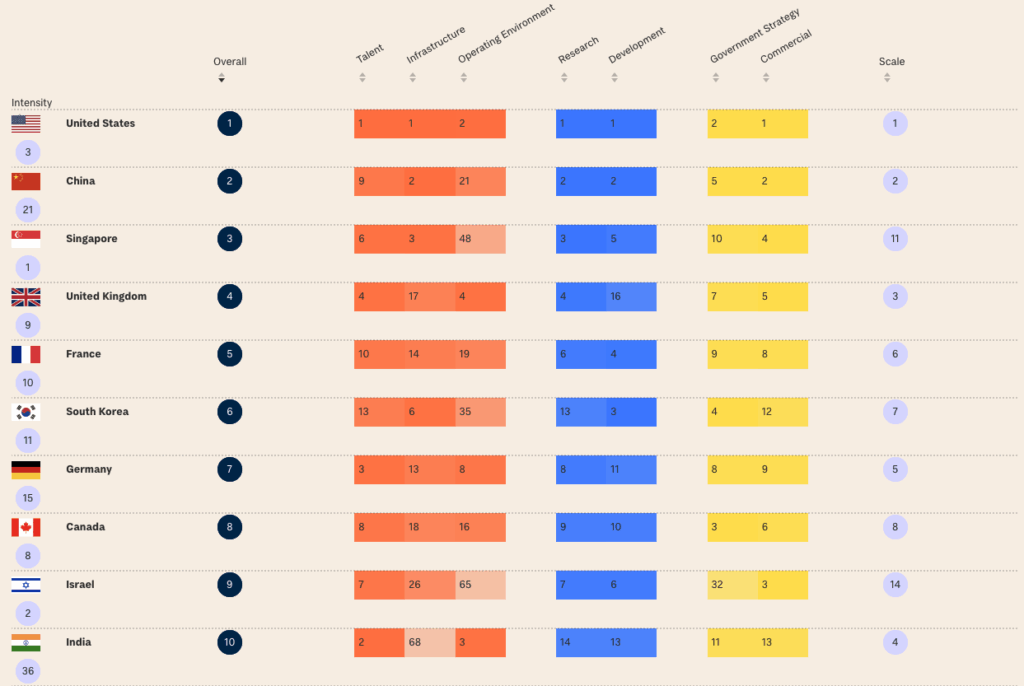

Innovation requires risk-taking and experimentation. Europe’s stringent AI laws may unintentionally punish pioneers. High compliance costs, slower product rollouts, and legal uncertainty make Europe a tough market. Startups often operate on thin margins and cannot afford lengthy legal reviews. This could drive fresh ideas to the U.S., China, or other regions with lighter regulations.

However, a strong argument exists for regulation’s role in building public trust. Ethical AI design, transparency, and accountability can make users more comfortable with adopting new technologies. A mistrustful user base slows adoption, which stifles innovation regardless of the regulatory climate. The question is: Can Europe dictate where safety and creativity coexist?

Academic Integrity and AI: The Gray Area of Innovation

The academic world faces fresh ethical dilemmas with AI’s rise. Recent reports indicate that some researchers are embedding AI prompts in papers to influence peer reviewers positively. This practice blurs the lines between original work and AI assistance, threatening the foundation of scientific integrity.

The EU and universities are pushing for clear disclosure guidelines on AI’s role in research. Yet, the tension remains between encouraging AI-assisted innovation and maintaining rigorous ethical standards. This debate signifies a greater issue: How do regulators enforce rules without stifling creative use of AI tools in academia?

Estonia: AI and Education

Estonia’s AI Leap program offers a hopeful model. The government trains teachers to use AI responsibly in classrooms, partnering with OpenAI and Anthropic to develop regionally appropriate chatbots. President Alar Karis notes, “Our students are already experimenting with AI creatively. The goal is to give them tools and ethics to guide that energy.”

Estonia’s approach demonstrates that AI governance need not be a brake on innovation. Early education in AI ethics fosters a digitally literate generation that is prepared to harness AI as a force for good. Governance isn’t just rules; it’s also culture and education.

The Politics: Power, Influence, and the Global AI Race

The EU’s firm governance reflects its ambition to rein in AI’s already extensive influence. The Commission’s enforcement and member states’ cooperation send a clear signal: Europe demands control over AI’s impact. But this political will risks creating friction with industry players and other nations.

Regulators struggle with innovation, leadership, and ethical responsibility. Other countries may adopt a laissez-faire attitude to AI, embracing rapid growth but with less oversight. Europe’s choice could either position it as a moral leader or isolate it from the most dynamic AI development hubs.

TF Summary: What’s Next

Europe stands at a crossroads. Its AI governance efforts promise user safety and ethical innovation, but the path forward remains challenging. Europe must fine-tune its rules to encourage startups while protecting citizens. Programs like Estonia’s show the value of blending education with regulation.

The global AI race intensifies, and Europe’s decisions now will define its technological and ethical leadership. The key lies in crafting governance that guides AI responsibly without throttling innovation.

— Text-to-Speech (TTS) provided by gspeech