In the age of rapidly advancing technology, tools designed for convenience and connectivity are being repurposed for nefarious purposes. Two recent attacks in the U.S. have shed light on how ordinary tech, from smart glasses to generative AI, has been used by individuals to plan and execute violent acts. The use of these technologies raises significant concerns about their potential for misuse. Let’s take a closer look at how these tools were involved and why this matters.

What’s Happening & Why This Matters

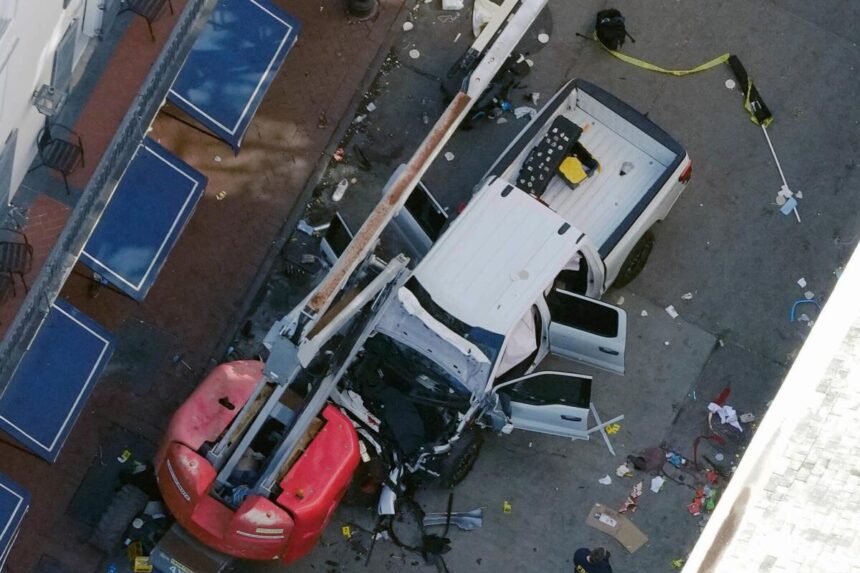

Smart Glasses Used in New Orleans Attack

In the tragic New Year’s Eve attack in New Orleans, the assailant, Shamsud-Din Jabbar, used Meta’s smart glasses to scout and record footage of Bourbon Street in preparation for his deadly attack. The FBI revealed that Jabbar had used the glasses to film the area months before the attack, capturing key locations while cycling through the city. During the attack, he drove a rented pickup truck into a crowd, killing 14 people. Additionally, he had planted explosive devices on Bourbon Street, which he intended to detonate remotely.

Meta’s smart glasses, a product of its collaboration with Ray-Ban, cost around $300. They allow users to take photos, record videos, and even interact with Meta’s AI tool. While Jabbar did not live stream or record during the actual attack, his use of the glasses to film the area beforehand highlights the potential risks posed by technology designed for convenience. This is a reminder that seemingly innocent devices can be weaponized with devastating consequences.

The FBI continues investigating and offers support for victims and their families through its Victim Services Response Team.

ChatGPT Aids Attack in Las Vegas

In another disturbing incident, Matthew Livelsberger, who exploded a Tesla Cybertruck outside the Trump International Hotel in Las Vegas, used ChatGPT, a generative AI tool, to plan his attack. Livelsberger searched for information on explosives and ammunition through ChatGPT, revealing how this AI tool can be misused to facilitate violent acts. Although Livelsberger did not intend to kill anyone, his actions, which caused minor injuries to seven people, show the potential dangers of AI when used irresponsibly.

Sheriff Kevin McMahill of the Las Vegas Metropolitan Police Department called this a “game-changer” in law enforcement. The use of generative AI in such a manner is alarming, especially given that ChatGPT and similar tools are designed to be accessible and user-friendly. While OpenAI, the creator of ChatGPT, has stated that its tools are designed to refuse harmful instructions, this incident underscores the growing need for vigilance in AI.

Political grievances and societal issues drove Livelsberger’s actions, but the core takeaway is technology’s role in facilitating the attack. This is the first known instance of ChatGPT to assist in such a plot on U.S. soil.

TF Summary: What’s Next

These two incidents are real-world examples of how technology, designed for everyday use, can be twisted to malefactors’ purposes. As the FBI and law enforcement agencies widen their investigations, it’s clear that the need for stronger regulation and monitoring of technology, ingenious devices, and AI tools is more pressing than ever. While these technologies offer convenience and innovation, they also have risks that must be addressed to prevent future misuse. The path forward requires balancing the benefits of innovation with the need for safety and responsibility in an increasingly connected world.

— Text-to-Speech (TTS) provided by gspeech